VMware has revealed the next version of Virtual SAN (VSAN), 6.1 at VMworld US 2015. This incremental upgrade from the current 6.0 version that was announced back in Feb. may seem small compared to the last update but their are a few big things in it that I wanted to highlight.

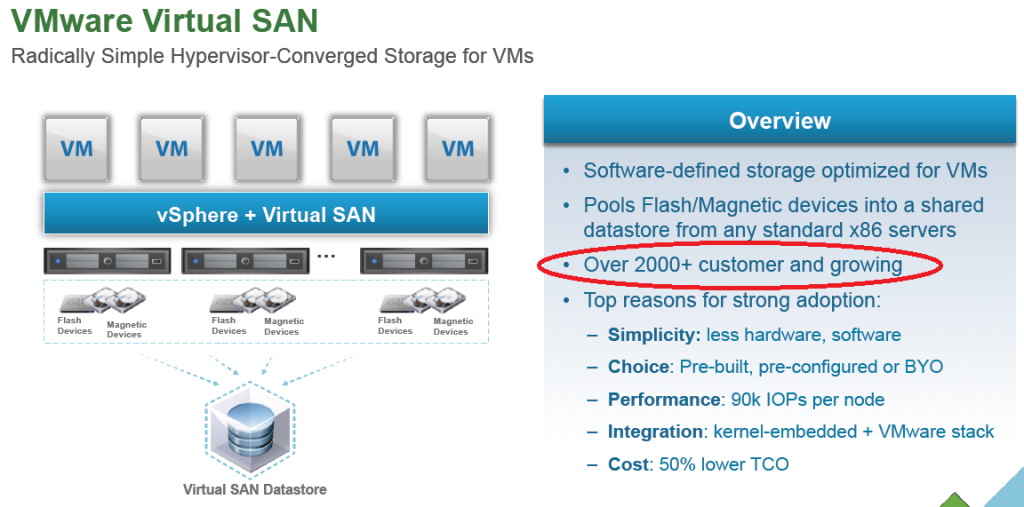

Who’s using VSAN today?

The first thing I wanted to point out from VMware’s marketing slide for VSAN is the fact that they claim 2,000+ customers. That seems like a pretty small amount which is around the typical range that a 1-2 year old startup might expect to have. But given the fact that VMware is not a startup, they have been marketing the hell out of VSAN which has been available now for over a year and a half and it’s built into vSphere that number seems pretty low.

I can think of at least 2 reasons for the low number, the first is cost, VSAN is still pretty expensive to license and when you start adding up the storage and license costs for each host you’re near the ballpark of a physical SAN. The second is what I call the comfort blanket, people get attached to what they currently use (physical SAN) and are very reluctant to change it despite all the promises of wonderful things that something else might bring. As a result they tend to stick to what they know and what they are comfortable with instead of venturing outside that comfort zone into unfamiliar territory.

What’s New in Virtual SAN 6.1?

What’s New in Virtual SAN 6.1?

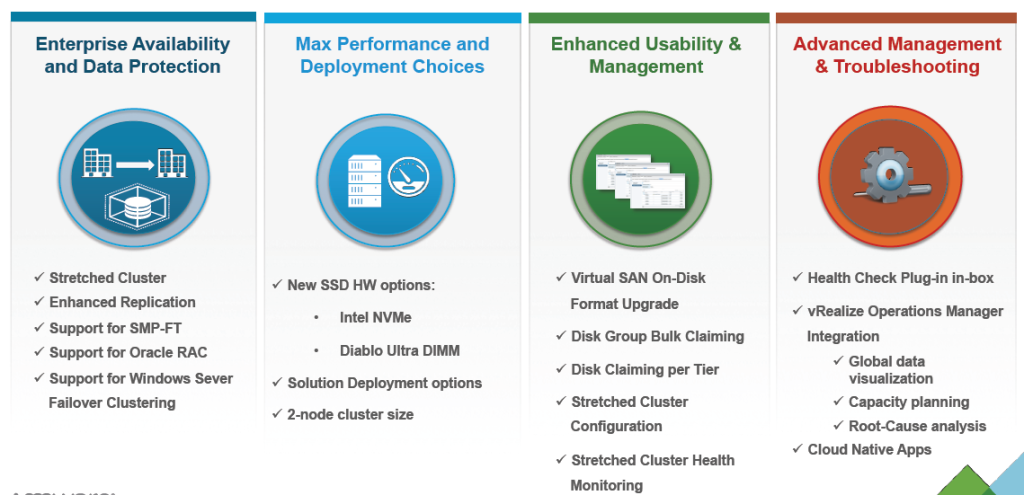

If you want the quick overview of everything new in 6.1 the below slide illustrates that. The big things that stand out are support for Stretched Storage Clusters (i.e. vMSC), support for 2 node VSAN clusters, support for multi-vCPU (SMP) Fault Tolerance, vRealize Ops integration and new enhanced replication with lower RPO’s. Continue reading for more details on all these.

Stretched Clustering

Stretched Clustering

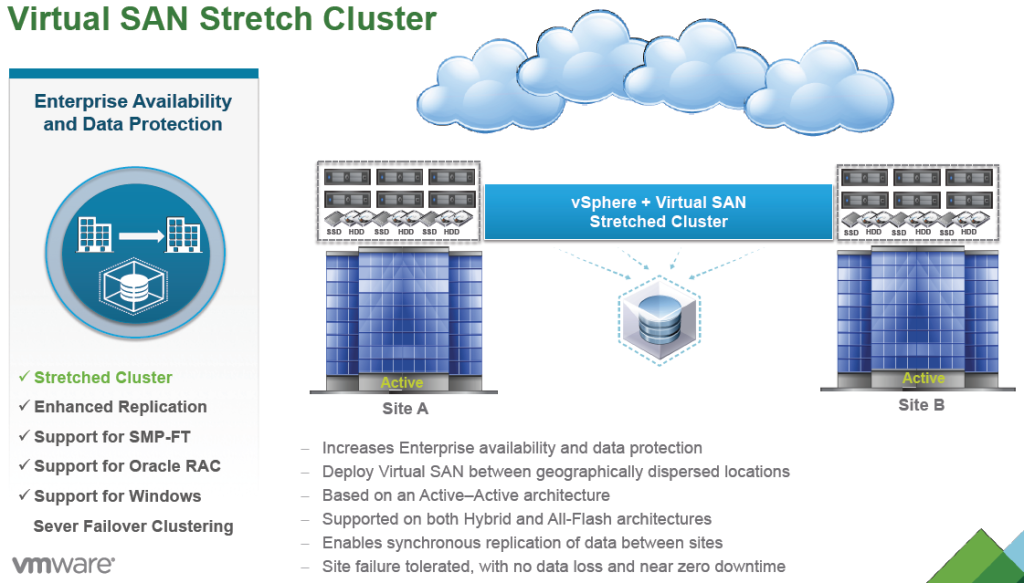

Prior to version 6.1 you were limited to deploying a VSAN cluster to a single site as it did not support higher latency between VSAN nodes. Now with support for stretched clusters you can spread your VSAN nodes farther apart to provide a higher level of storage availability that can survive not just a single node failure but also a site failure. This is very similar to a vSphere Metro Storage Cluster (vMSC) solution, the difference being that vMSC is intended for external storage arrays and this solution is built into vSphere hence no need for it to be vMSC certified. Just like vMSC however this is intended for metro distances only as it is limited by latency (5ms).

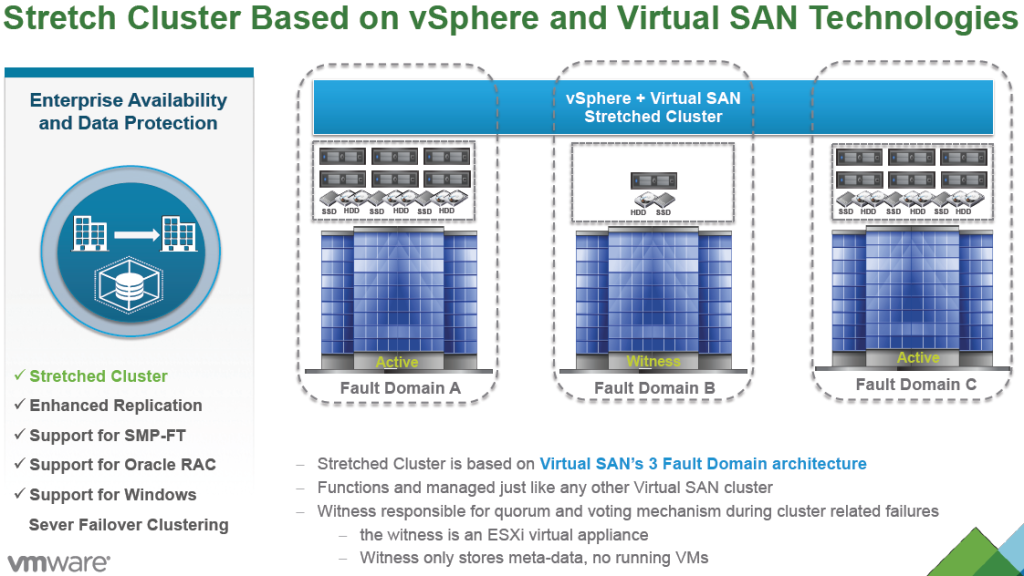

The VSAN Stretch Cluster solution is available in either VSAN configuration (hybrid or all-flash) and utilizes synchronous replication between sites to keep all nodes continually in sync. It is also based on an active-active architecture so storage is usable at either site. As with just about any multi-site storage solution a 3rd party quorum is required for quorum and decision making to resolve split brain situations when a site failure occurs. The witness can be deployed as a virtual appliance at a 3rd site or hosted in the cloud via vCloud Air. As the 3rd site option is often not available for many companies who only have 2 sites the vCloud Air option is a nice alternative.

The VSAN Stretch Cluster solution is available in either VSAN configuration (hybrid or all-flash) and utilizes synchronous replication between sites to keep all nodes continually in sync. It is also based on an active-active architecture so storage is usable at either site. As with just about any multi-site storage solution a 3rd party quorum is required for quorum and decision making to resolve split brain situations when a site failure occurs. The witness can be deployed as a virtual appliance at a 3rd site or hosted in the cloud via vCloud Air. As the 3rd site option is often not available for many companies who only have 2 sites the vCloud Air option is a nice alternative.

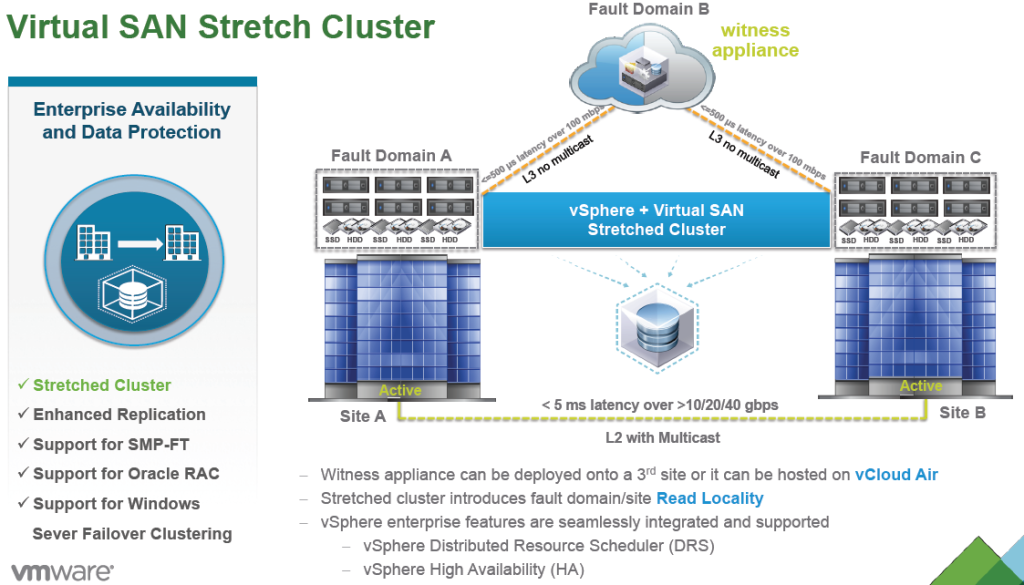

As mentioned the requirements for this solution is the two sites must have less than 5ms latency between them, this is pretty much standard for any type of synchronous replication solution as the storage at the two sites must stay synchronized at all times and higher latency between sites can impact performance. The requirement for the connection to the 3rd site witness appliance is 100Mbps but realistically as there is not heavy data flow between the witness and storage sites it can probably be much lower. The requirement for the connection between the 2 storage sites is 10Gbps which is pretty high and is the primary reason why this solution is metro distance limited. Note because this is an active-active solution both DRS & HA are supported between sites.

As mentioned the requirements for this solution is the two sites must have less than 5ms latency between them, this is pretty much standard for any type of synchronous replication solution as the storage at the two sites must stay synchronized at all times and higher latency between sites can impact performance. The requirement for the connection to the 3rd site witness appliance is 100Mbps but realistically as there is not heavy data flow between the witness and storage sites it can probably be much lower. The requirement for the connection between the 2 storage sites is 10Gbps which is pretty high and is the primary reason why this solution is metro distance limited. Note because this is an active-active solution both DRS & HA are supported between sites.

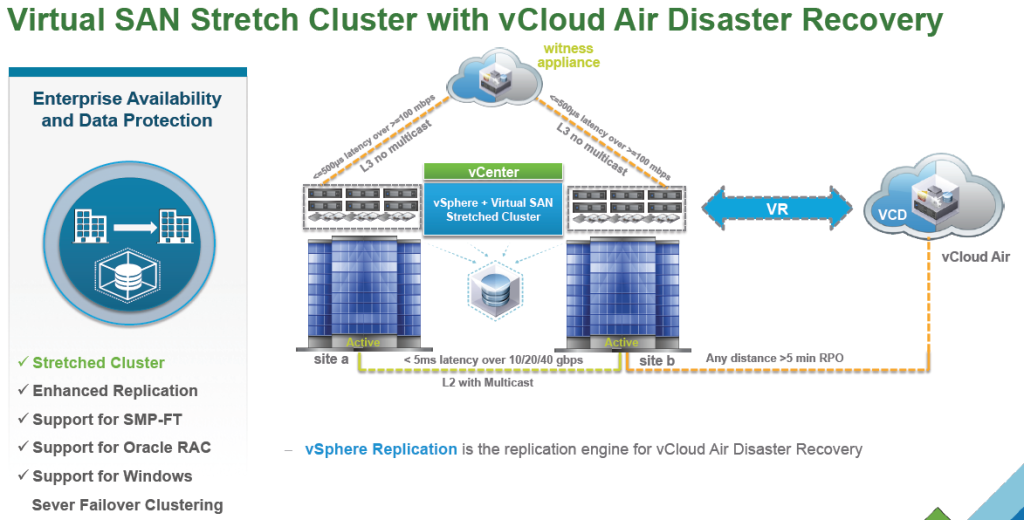

While having continuous availability is critical to some customers there is often the need to extend that out further to protect against regional disasters. You can combine a VSAN stretch cluster with VMware’s vCloud Air Disaster Recovery Solution that utilizes vSphere Replication (asynchronous) to replicate your VSAN stretch cluster to a cloud based failover environment. This provides you with double protection against either a single site failover or a regional event that might impact both your stretched cluster sites.

While having continuous availability is critical to some customers there is often the need to extend that out further to protect against regional disasters. You can combine a VSAN stretch cluster with VMware’s vCloud Air Disaster Recovery Solution that utilizes vSphere Replication (asynchronous) to replicate your VSAN stretch cluster to a cloud based failover environment. This provides you with double protection against either a single site failover or a regional event that might impact both your stretched cluster sites.

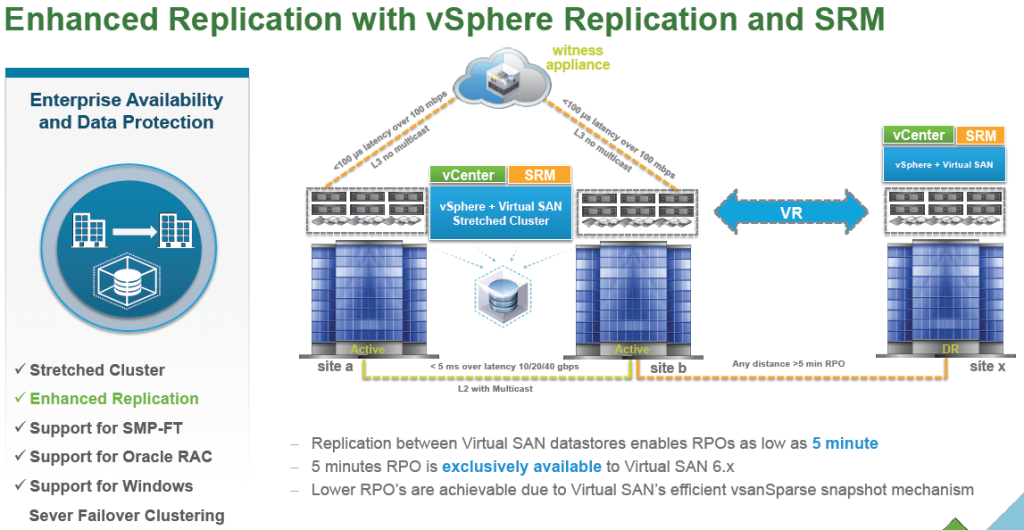

In addition to replication to a vCloud Air based DR cloud there is also enhanced replication to an offsite location using vSphere Replication with Site Recovery Manager. This improved replication is more efficient due to the new VSAN vsanSparse snapshot mechanism that was introduced in vSphere 6.0 and VMware is claiming that RPO’s as low as 5 minutes can be achieved as a result.

In addition to replication to a vCloud Air based DR cloud there is also enhanced replication to an offsite location using vSphere Replication with Site Recovery Manager. This improved replication is more efficient due to the new VSAN vsanSparse snapshot mechanism that was introduced in vSphere 6.0 and VMware is claiming that RPO’s as low as 5 minutes can be achieved as a result.

SMP-Fault Tolerance

SMP-Fault Tolerance

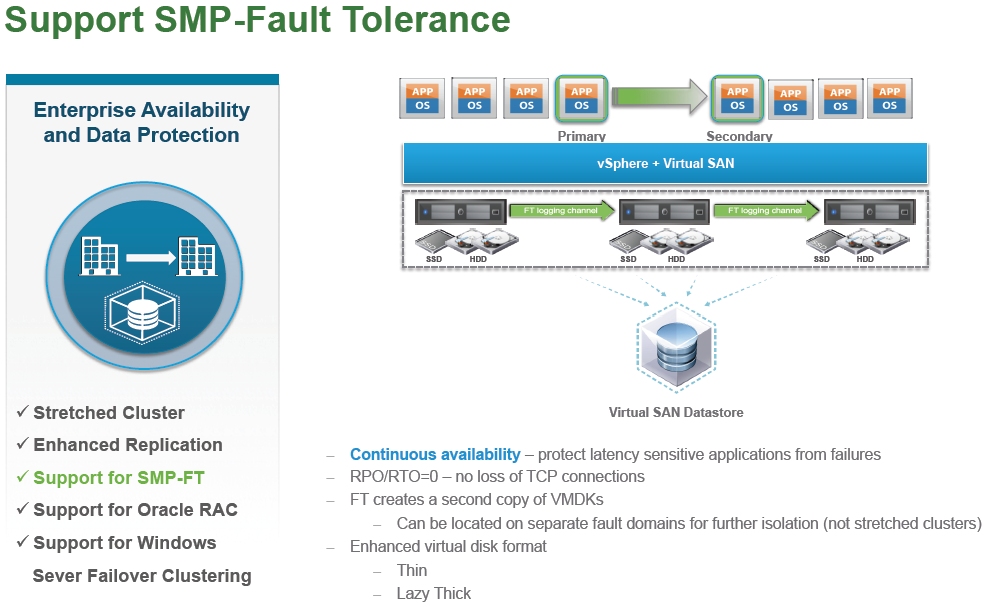

I don’t know that many people use the vSphere Fault Tolerance (FT) feature that much due to the many limitations and restrictions that it puts on VMs. Support for multi vCPU (SMP) VM’s was finally introduced in vSphere 6.0 but VSAN was not supported with Fault Tolerance (even single CPU VM’s). Now with 6.1 you can use the FT feature with VSAN if you so desire including SMP VM’s.

Support for Application Clustering Technologies

Support for Application Clustering Technologies

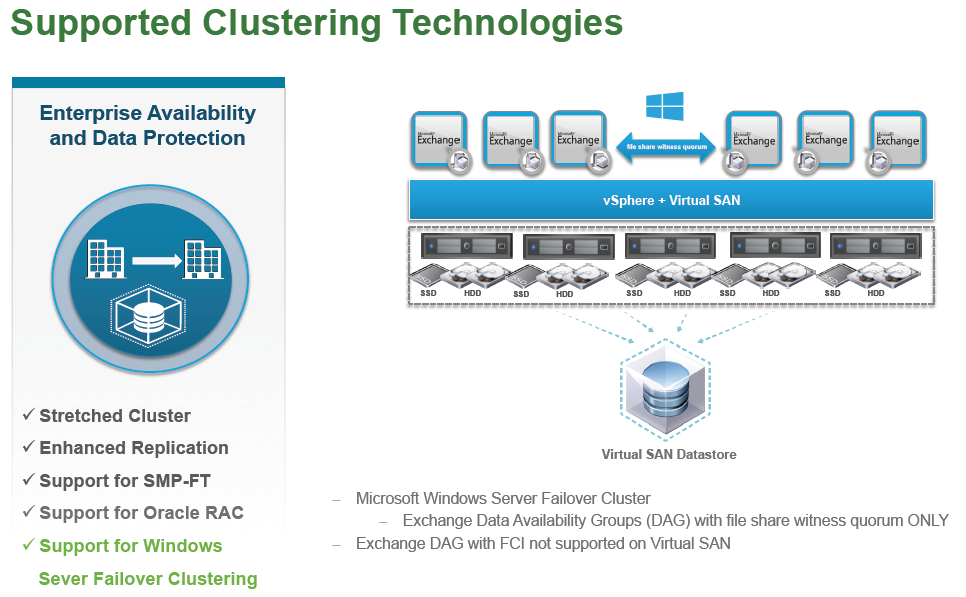

VSAN now supports certain application specific clustering technologies including Oracle Real Application Cluster (RAC), Microsoft Exchange and Microsoft SQL Server. Note for Exchange and SQL the only supported configurations are those that use the file share quorum witness, Failover Cluster Instances (FCI) are not supported..

2-Node ROBO Solution

2-Node ROBO Solution

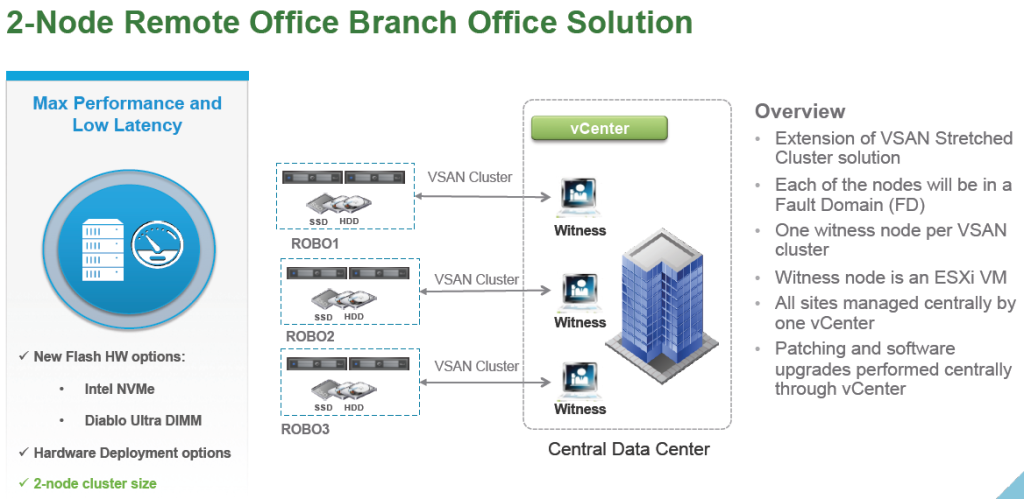

While VMware has worked to scale VSAN on the high end up to 64 nodes the minimum supported nodes has remained at 3 nodes until now. VSAN now supports a 2-node cluster but this is only meant for a ROBO solution as it does require a witness node in the same manner as a stretched cluster does which is deployed at a central location and managed by vCenter Server. With this solution each node will be in a Fault Domain and each ROBO cluster will have it’s own independent witness node with all management done by a single vCenter Server instance. This is great for ROBO deployments that have many sites where companies desire to keep as little infrastructure as possible at each site. This solution is not intended for standalone 2-node deployments where the minimum nodes remains at 3 nodes.

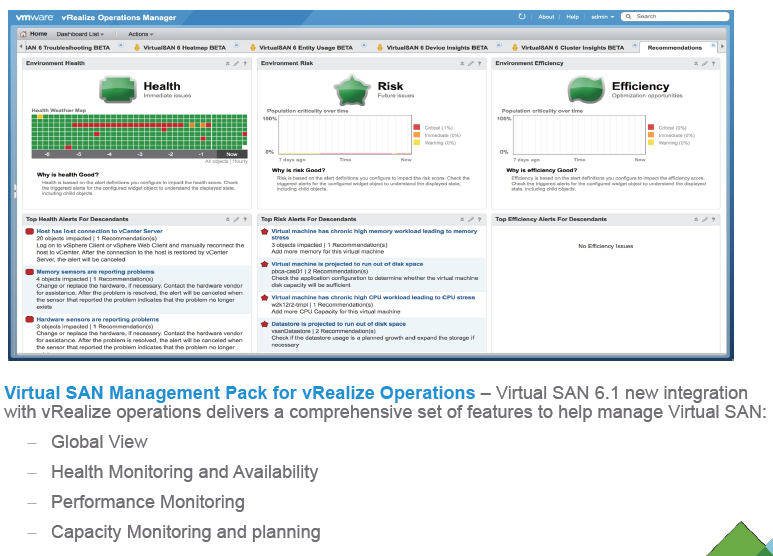

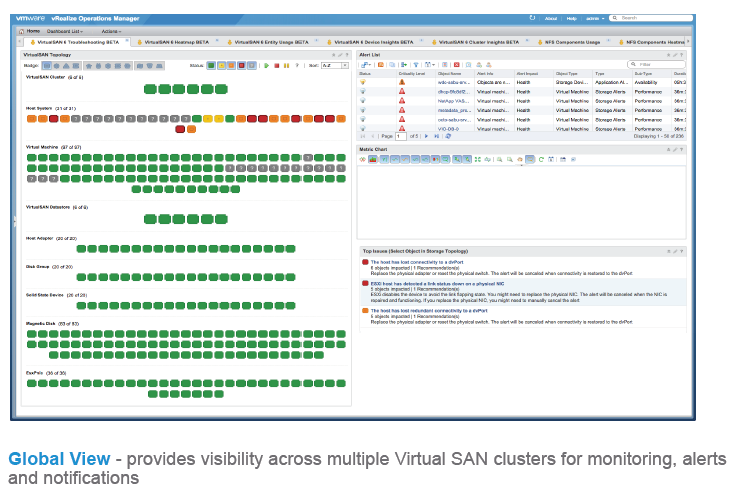

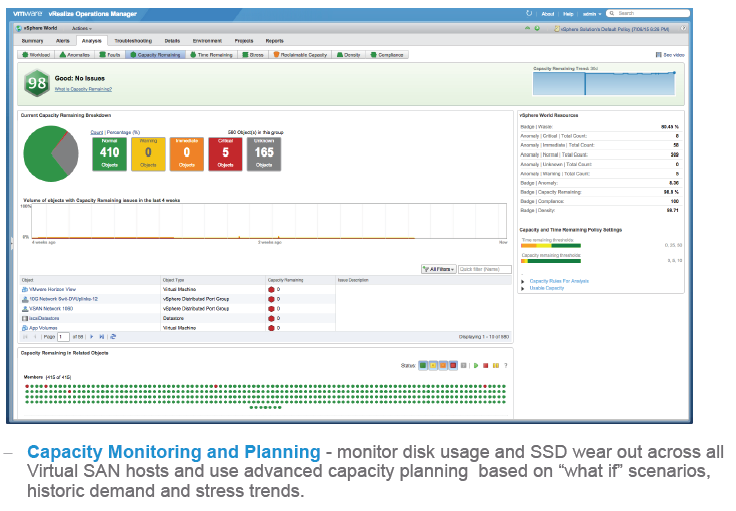

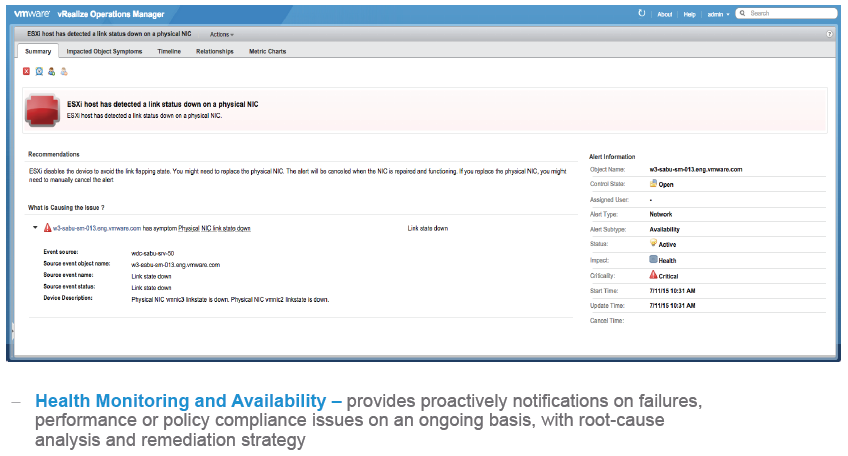

Virtual SAN Management Pack for vRealize Operations

Virtual SAN Management Pack for vRealize Operations

Virtual SAN now has integration with vRealize Operations (vROps) for enhanced health, performance and capacity monitoring. The integration isn’t native to vROps and SAN integrates the same way that every vendor does through a Management Pack that is installed in vROps that provides support for VSAN. This provides some much needed better monitoring for VSAN and includes a Global View that provides visibility across multiple VSAN clusters for monitoring, alerts and notifications as well as capacity monitoring that can monitor disk usage and SSD wear out across all VSAN hosts and use advanced capacity planning based on “what if” scenarios, historic demand and stress trends. In addition the health monitoring provides proactively notifications on failures, performance or policy compliance issues on an ongoing basis, with root-cause analysis and remediation strategy.

Some additional new things

Some additional new things

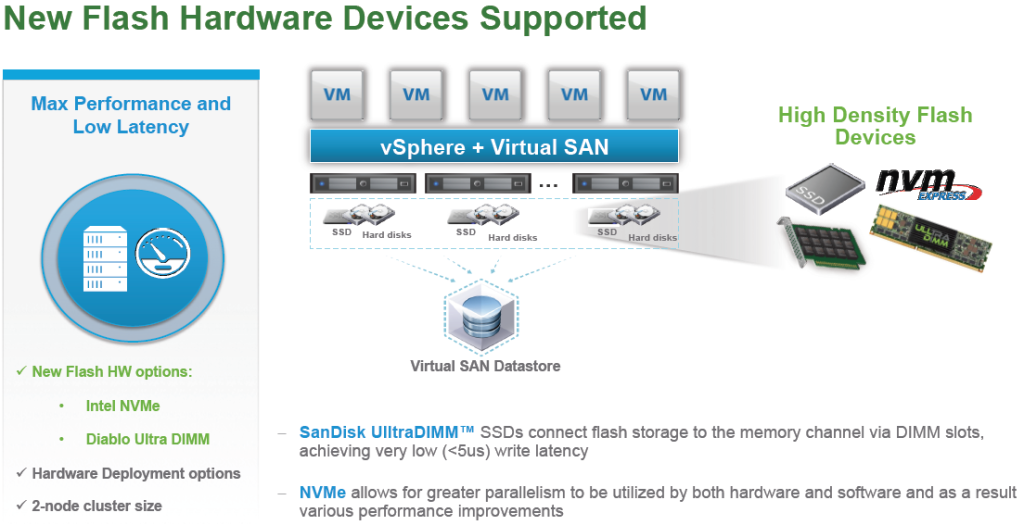

Some additional smaller things included in VSAN 6.1 include support for new higher performance flash devices such as SanDisk’s UltraDIMMs that supports NVMe (Non-Volatile Memory Express) which is a specification that allows SSDs to connect to the PCIe bus instead of through traditional SAS/SATA interfaces. By using the PCIe bus it allows for much lower latency and support for multiple queues and higher queue depths, the end result improves performance and helps eliminates bottlenecks.

VSAN 6.1 also allows for non-destructive file system upgrades from previous versions of VSAN which makes upgrading much easier.

VSAN 6.1 also allows for non-destructive file system upgrades from previous versions of VSAN which makes upgrading much easier.

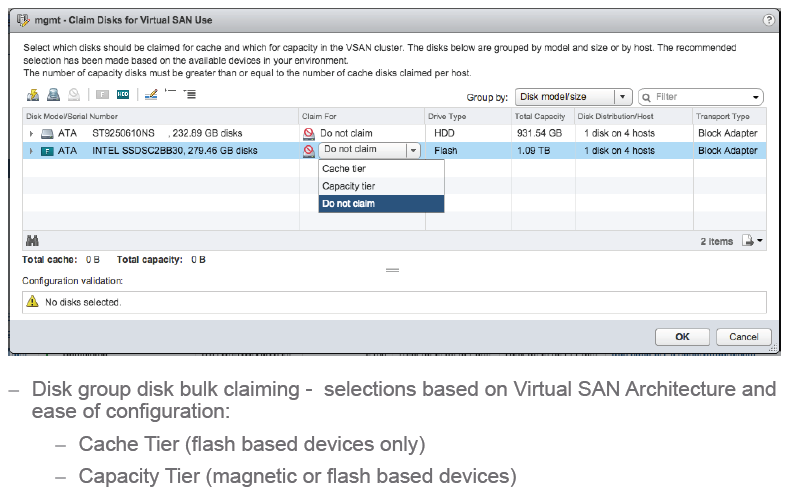

VSAN 6.1 also makes it easier to claim disks into a VSAN node by allowing you to choose many simultaneously instead of choosing them one by one.

VSAN 6.1 also makes it easier to claim disks into a VSAN node by allowing you to choose many simultaneously instead of choosing them one by one.

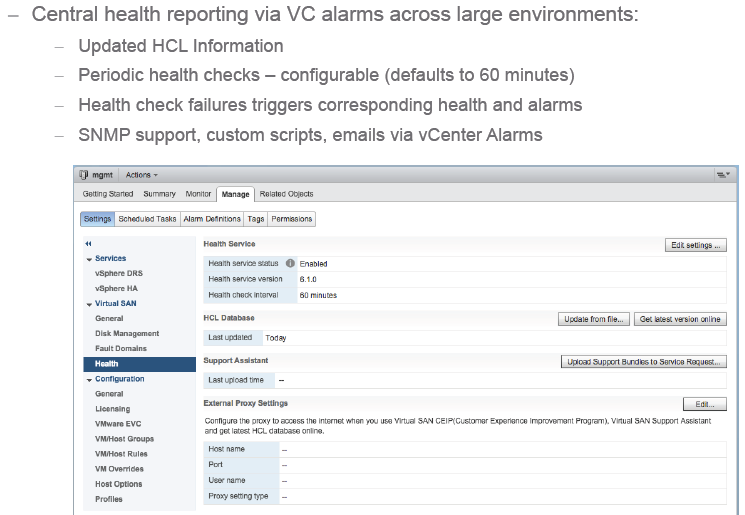

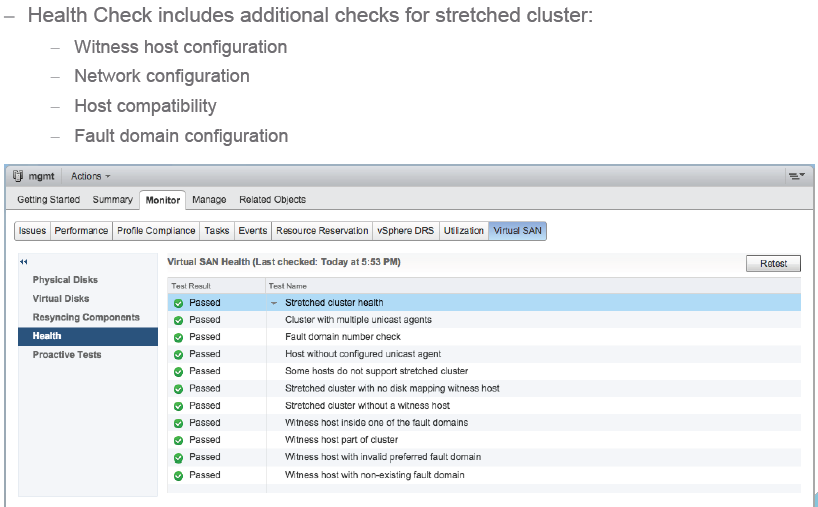

Finally health reporting has been improved with new information, configuration options and support for stretched clusters.

Finally health reporting has been improved with new information, configuration options and support for stretched clusters.