VMware’s new Virtual Volumes storage architecture required some changes to the SCSI specifications for block arrays to support the new sub-LUN model that VVols uses. Because of this it required I/O vendors to update their software to be able to support the new Protocol Endpoint component in VVols that is used as the data path between hosts and VMs (VVols) residing on storage arrays. Early on after VVols was released many I/O vendors were in catch-up mode to introduce support for this into their I/O adapter firmware. As a result there is a good chance that the I/O adapter that you are using may not work with VVols or may require a firmware upgrade to support it.

Let’s first look at what changed with VVols that required changes to be made to I/O adapter firmware to support VVols. A traditional block I/O adapter connects to a LUN on a storage array where your VMFS datastore is located. To connect to the LUN it simply needs the data path information (WWN) which includes HBA #, controller and of course the LUN ID of the volume associated with the VMFS datastore. You’ll see this in the vSphere client when selecting an I/O adapter with a syntax similar to vmhbaAdapter:CChannel:TTarget:LLUN (i.e. vmhba1:C0:T3:L1).

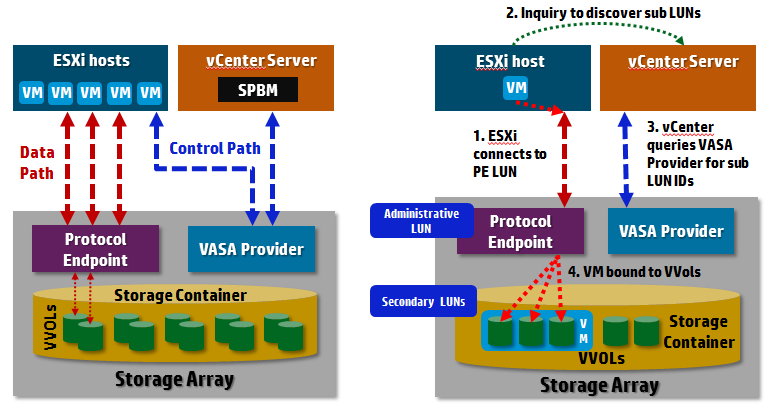

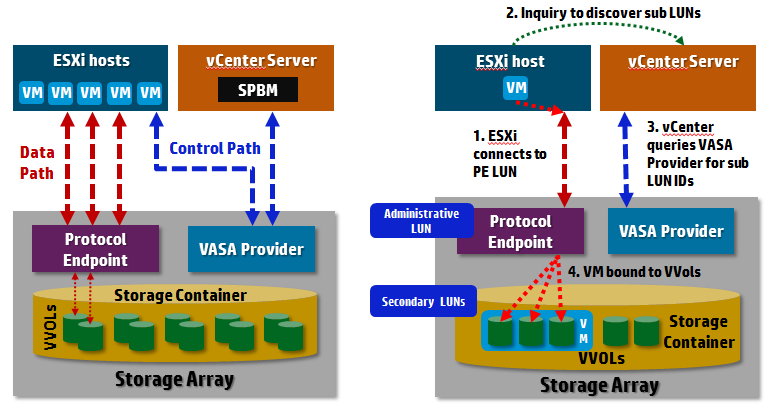

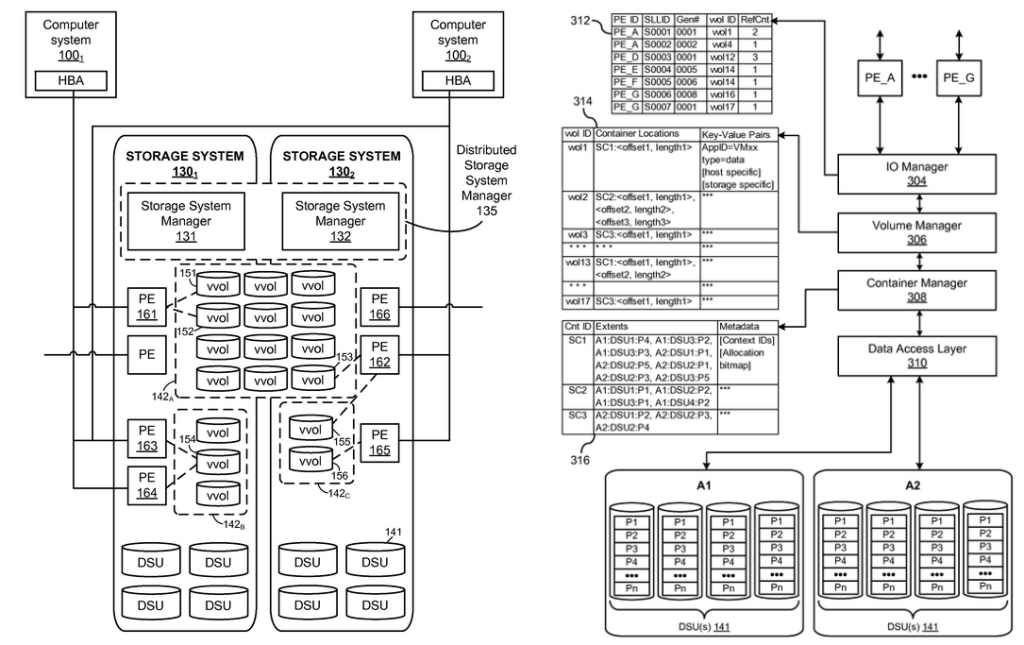

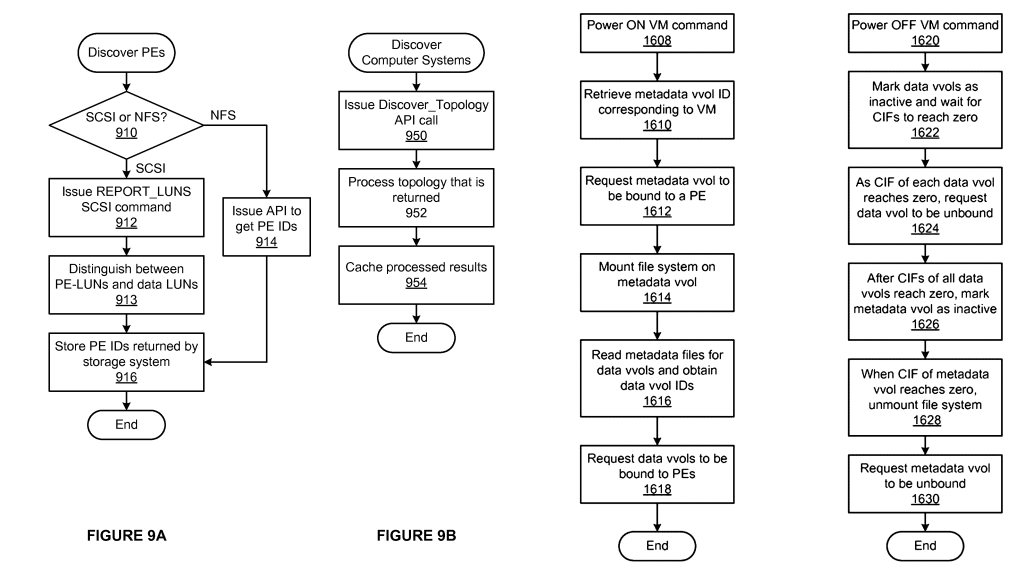

VVols introduces the concept of Secondary LUN IDs which is essentially an additional layer of LUN numbering that supports a lot more sub-LUNs than a array traditionally supports. The way this works is that a host will connect to a special Administrative LUN on the storage array via the Protocol Endpoint. This Admin LUN has no storage allocated to it and serves as a gateway to the sub-LUNs beneath it, it’s LUN ID is usually greater than 255 to identify it as a non-data LUN. A host cannot connect directly to a sub-LUN and must go through the Admin LUN to get to it. These Secondary LUN ID’s are provided to a host via the VASA Provider, so you can see why it is an important component. You can also see why direct to SAN backup is not supported with VVols as you cannot connect to a sub LUN without going through a ESXi host. The relationship between these components is outlined in the figure below:

The architecture is a bit complex and introduces additional identifiers beyond the LUN ID to connect to VVols on the array. These additional identifiers include secondary level IDs (SLLID), VVol IDs and Reference counts, the PE LUN still has a traditional WWN associated with it. Now while both block and NFS arrays utilize protocol endpoints and SLLIDs, the special Admin (PE) LUN only applies to block storage arrays, if you are using a NFS array with VVols this doesn’t apply as their is no special PE LUN. With block arrays PEs are discovered via an in-band path using the standard SCSI command, REPORT_LUNS which reports the WWN of the PE, with NFS PEs are discovered via an out-of-band path using an API which returns the IP address and mount point of the PE. PE LUNs on block storage are recognized differently from traditional data LUNs as they have a special conglomerate bit set (LU_CONG).

The architecture is a bit complex and introduces additional identifiers beyond the LUN ID to connect to VVols on the array. These additional identifiers include secondary level IDs (SLLID), VVol IDs and Reference counts, the PE LUN still has a traditional WWN associated with it. Now while both block and NFS arrays utilize protocol endpoints and SLLIDs, the special Admin (PE) LUN only applies to block storage arrays, if you are using a NFS array with VVols this doesn’t apply as their is no special PE LUN. With block arrays PEs are discovered via an in-band path using the standard SCSI command, REPORT_LUNS which reports the WWN of the PE, with NFS PEs are discovered via an out-of-band path using an API which returns the IP address and mount point of the PE. PE LUNs on block storage are recognized differently from traditional data LUNs as they have a special conglomerate bit set (LU_CONG).

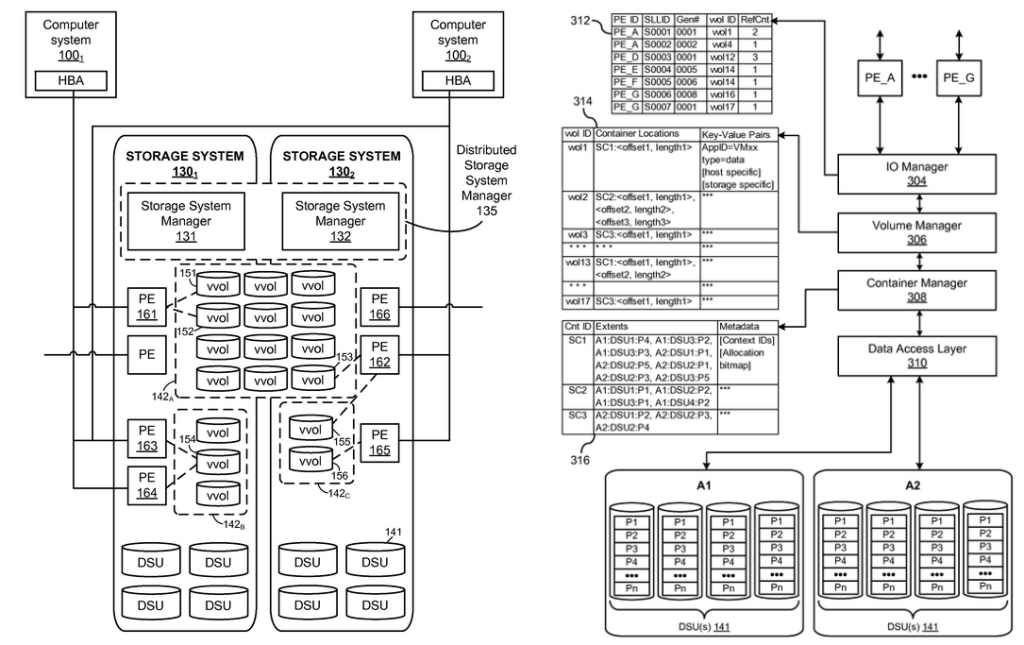

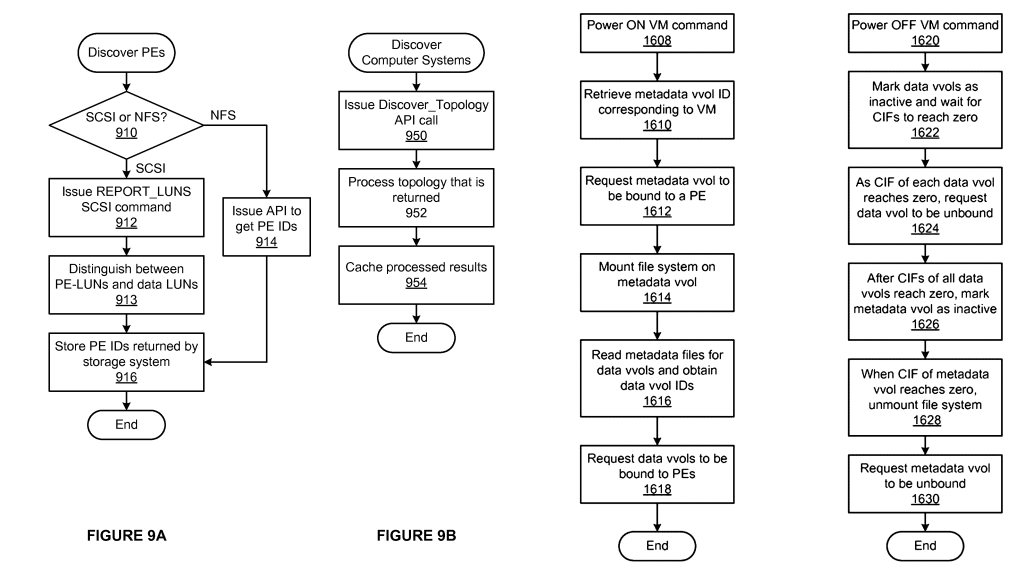

VMware has patented this new storage architecture that they refer to as “Computer system accessing object storage system“, here are a few diagrams from the patent, if you want to deep-dive into this new architecture give the patent a read.

This new sub LUN architecture required VMware to submit update proposals to the T-10 committee for SCSI specifications to support it and the end result of all this was I/O adapters had to be updated to support this as well.

This new sub LUN architecture required VMware to submit update proposals to the T-10 committee for SCSI specifications to support it and the end result of all this was I/O adapters had to be updated to support this as well.

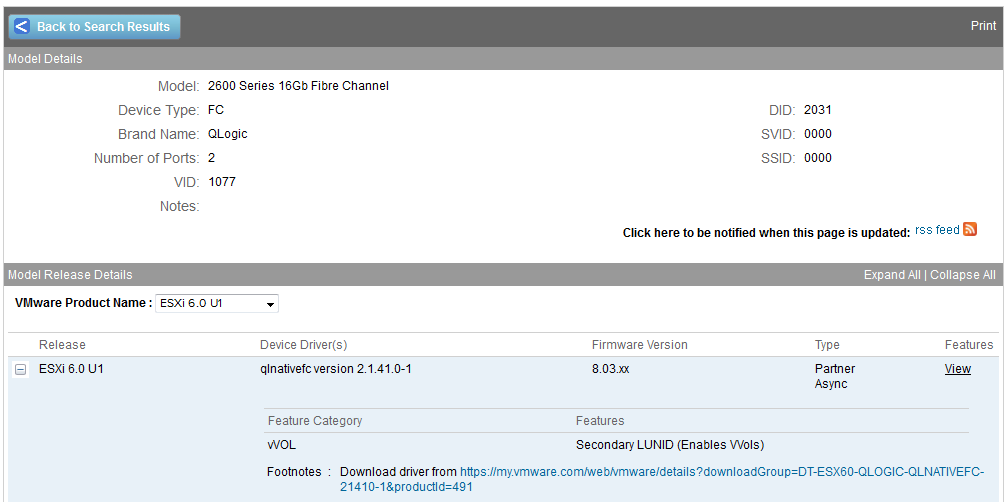

So know we know the why, let’s look at the how, as in how do I know if my I/O adapter will support VVols? Perhaps the quickest and easiest way is to simply look it up on the VMware HCL. If you go the VMware HCL and select I/O Adapters you will notice a new Feature there that you can select that is called “Secondary LUNID”. You can simply select that feature and select your I/O Adapter brand name, optionally the device type (i.e. FC) and then search as shown below.

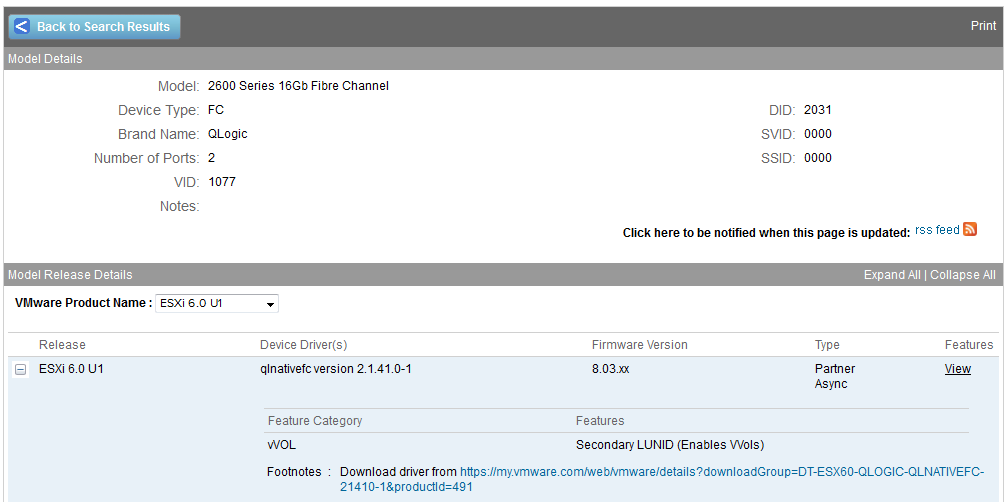

In the results you will see the firmware versions needed to support VVols and links to any special drivers that might be needed for ESXi to support it as shown below.

In the results you will see the firmware versions needed to support VVols and links to any special drivers that might be needed for ESXi to support it as shown below.

From what I’ve seen there are some I/O Adapters that will work using the standard ESXi image, some require custom server ESXI images (i.e. HPE, Dell) and other that require you to download and install a driver into ESXi. You can see many of these in the vSphere Drivers/Tools download page.

From what I’ve seen there are some I/O Adapters that will work using the standard ESXi image, some require custom server ESXI images (i.e. HPE, Dell) and other that require you to download and install a driver into ESXi. You can see many of these in the vSphere Drivers/Tools download page.

One thing to note is if your I/O Adapter does not support VVols or does not have the firmware to support it you will see this error in your vmkernel logs:

Sanity check failed for path vmhbaX:Y:Z. The path is to a VVol PE, but it goes out of adapter vmhbaX which is not PE capable. Path dropped.

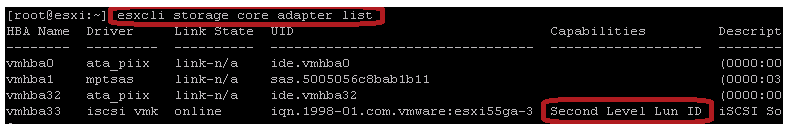

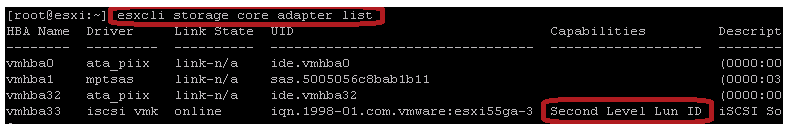

To check if your HBA is VVol capable you can run this command on your ESXi hosts: esxcli storage core adapter list , you should see Second Level Lun ID (SLLID) listed under the Capabilities column if the I/O Adapter supports VVols as shown below, VMware has a KB on this as well.

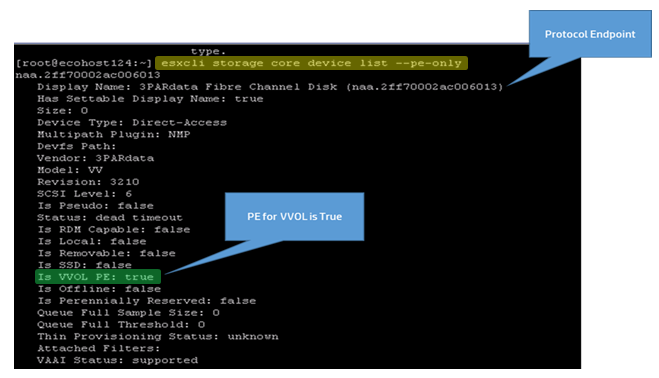

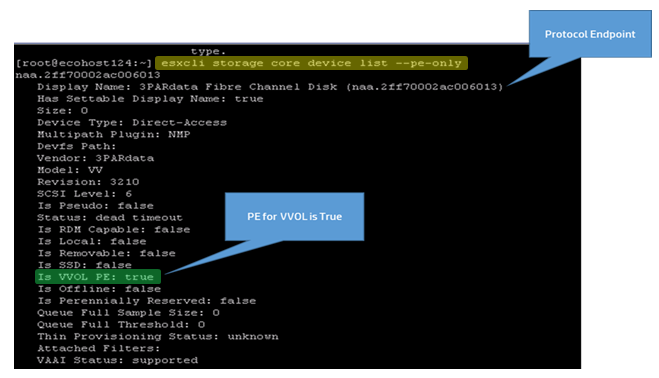

Once you are sure your I/O Adapter has the required firmware and the proper ESXi I/O driver is present you can check from the host to see if it is able to recognize the Protocol Endpoint (Admin LUN). You’ll need to make sure that your VASA Provider is enabled and setup before you can do this though. Once that is setup you can use the esxcli command run from each host to see if the PE is visible to it, the syntax for the command is esxcli storage core device list –pe-only as shown below.

Once you are sure your I/O Adapter has the required firmware and the proper ESXi I/O driver is present you can check from the host to see if it is able to recognize the Protocol Endpoint (Admin LUN). You’ll need to make sure that your VASA Provider is enabled and setup before you can do this though. Once that is setup you can use the esxcli command run from each host to see if the PE is visible to it, the syntax for the command is esxcli storage core device list –pe-only as shown below.

In the output that is produced if you see a value of “true” for “Is VVOL PE” that confirms that the host is able to connect successfully to the array PE to access VVols. Often times this will be false if your I/O adapter does not support VVols or VVols is not setup correctly.

In the output that is produced if you see a value of “true” for “Is VVOL PE” that confirms that the host is able to connect successfully to the array PE to access VVols. Often times this will be false if your I/O adapter does not support VVols or VVols is not setup correctly.

So there you have it, it may seem a bit complicated but there is a good chance that your I/O Adapter already supports VVols and you really won’t have to do anything to start using it. It’s always a good thing to check though and make sure your I/O Adapters and storage arrays have the required firmware level to support VVols. Hopefully this gives you a better understanding of how VVols works under the hood and the relationships between hosts, protocol endpoints and VVols.