Author's posts

Aug 08 2013

The Top 10 Things You MUST Know About Storage for vSphere

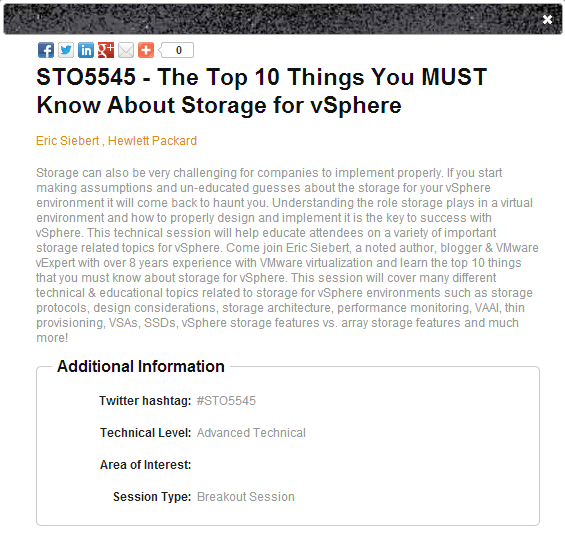

If you’re going to VMworld this year be sure and check out my session STO5545 – The Top 10 Things You MUST Know About Storage for vSphere which will be on Tuesday, Aug. 27th from 5:00-6:00 pm. The session was showing full last week but they must have moved it to a larger room as it is currently showing 89 seats available. This session is crammed full of storage tips, best practices, design considerations and lots of other information related to storage. So sign up know before it fills up again and I look forward to seeing you there!

Jul 23 2013

Top 5 Tips for monitoring applications in a Virtual Environment

The focus on applications often gets lost in the shuffle when implementing virtualization which is unfortunate because if you look at any server environment its sole purpose is really to serve applications. No matter what hardware, hypervisor or operating system is used, ultimately it all comes down to being able to run applications that serve a specific function for the users in a server environment. When you think about it, without applications we wouldn’t even have a need for computing hardware, so it’s important to remember, it’s really all about the applications.

So with that I wanted to provide 5 tips for monitoring applications in a virtual environment that will help you ensure that your applications run smoothly once they are virtualized.

Tip 1 – Monitor all the layers

The computing stack consists of several different components all layered on top of each other. At the bottom is the physical hardware or bare metal as it is often referred to. On top of that you traditionally had the operating system like Microsoft Windows or Linux, but with virtualization you have a new layer that sits between the hardware and the operating system. The virtualization layer controls all access to physical hardware and the operating system layer is contained within virtual machines. Inside those virtual machines is the application layer where you install applications within the operating system. Finally you have the user layer that accesses the applications running on the virtual machines. Within each layer you have specific resource areas that need to be monitored both within and across layers. For example storage resources which are a typical bottleneck in virtual environments need storage management across layers so you get different perspectives from multiple viewpoints.

To have truly effective monitoring you need to monitor all the layers so you can get a perspective from each layer and also see the big picture interaction between layers. If you don’t monitor all the layers you are going to miss important events that are relevant at a particular layer. For example if you focus only on monitoring at the guest OS layer, how do you know your applications or performing as they should or that your hypervisor does not have a bottleneck. So don’t miss anything when you monitor your virtual environment, you should monitor the application stack from end to end all the way from the infrastructure to the applications and the users that rely on them.

Tip 2 – Pay attention to the user experience

So you monitor your applications for problems but that won’t necessarily tell you how well it’s performing from a user perspective. If you’re just looking at the application and you see it has plenty of memory and CPU resources and there are no error messages you might get a false sense of confidence that it is running OK. If you dig deeper you may uncover hidden problems, this is especially true with virtualized applications that run on shared infrastructure and multi-tier applications that span servers that rely on other servers to properly function.

The user experience is what the user experiences when using the application and is the best measure of how an application is performing. If there is a bottleneck somewhere between shared resources or in one tier of an application it’s going to negatively impact the user experience which is based on everything working smoothly. So it’s important to have a monitoring tool that can simulate a user accessing an application so you can monitor from that perspective. If you detect that the user experience has degraded many tools will help you pinpoint where the bottleneck or problem is occurring.

Tip 3 – Understand application and virtualization dependencies

There are many dependencies that can occur with applications and in virtual environments. With applications you may have a multi-tier application that depends on other services running on other VMs such as a web tier, app tier and database tier. Multi-tier applications are typically all or nothing, if any one tier is unavailable or has a problem the application fails. Clustering can be leveraged within applications to provide higher availability but you need to take special precautions to ensure a failure doesn’t impact the entire clustered application all at once. This may also extend beyond applications into other areas, for example if Active Directory or DNS is unavailable it may also affect your applications. In addition there are also many dependencies inside a virtual environment. One big one is shared storage, VMs can survive a host failure with HA features that bring them up on another host, but if your primary shared storage fails it can take down the whole environment.

The bottom line is that you have to know your dependencies ahead of time, you can’t afford to find them out when problems happen. You should clearly document what your applications need to be able to function and ensure you take that into account in our design considerations for your virtual environment. Something as simple as DNS being unavailable can take down a whole datacenter as everything relies on it. You also need to go beyond understanding your dependencies and configure your virtual environment and virtualization management around them. Doing things like setting affinity settings so when VMs are moved around they are either kept together or spread across hosts will help minimize application downtime and balance performance.

Tip 4 – Leverage VMware HA OS & application heartbeat monitoring

One of the little known functions of VMware’s High Availability (HA) feature is the ability to monitor both operating systems and applications to ensure that they are responding. HA was originally designed to detect host failures in a cluster and automatically restart VMs on failed hosts on other hosts in the cluster. It was further enhanced to detect VM failures such as a Windows blue screen by monitoring a heartbeat inside the VM guest OS through the VMware Tools utility. This feature is known as Virtual Machine (VM) Monitoring and will automatically restart a VM if it detects the loss of the heartbeat. To help avoid false positives it was further enhanced to detect I/O occurring by the VM to ensure that it was truly down before restarting it.

VMware HA Application Monitoring was introduced as a further enhancement to HA in vSphere 5 that took HA another level deeper, to the application. Again leveraging VMware Tools and using a special API that VMware developed for this you can now monitor the heartbeat of individual applications. VMware’s API allows application developers for any type of application, even custom ones developed in-house to hook in to VMware HA Application Monitoring to provide an extra level of protection by automatically restarting a VM if an application fails. Both features are disabled by default and need to be enabled to function, in addition with application monitoring you need to be running an application that supports it.

Tip 5 – Use the right tool for the job

You really need a comprehensive monitoring package that will monitor all aspects and layers of your virtual environment. Many tools today focus on specific areas such as the physical hardware or guest OS or the hypervisor. What you need are monitoring tools that can cover all your bases and also focus on your applications which are really the most critical part of your whole environment. Because of the interactions and dependencies with applications and virtual environments you also need tools that can understand them and properly monitor them so you can troubleshoot them more easily and spot bottlenecks that may choke your applications. Having a tool that can also simulate the user experience is especially important in a virtualized environment that has so many moving parts so you can monitor the application from end-to-end.

SolarWinds can provide you with the tools you need to monitor every part of your virtual environment including the applications. SolarWinds Virtualization Manager coupled with Server & Application Monitor can help ensure that you do not miss anything and that you have all the computing layers covered. SolarWinds Virtualization Manager delivers integrated VMware and Microsoft Hyper-V capacity planning, performance monitoring, VM sprawl control, configuration management, and chargeback automation to provide complete monitoring of your hypervisor.

SolarWinds Server & Application Monitor delivers agentless application and server monitoring software that provides monitoring, alerting, reporting, and server management. It only takes minutes to create monitors for custom applications and to deploy new application monitors with Server & Application Monitor’s built-in support for more than 150 applications. Server management capabilities allow you to natively start and stop services, reboot servers, and kill rogue processes. It also enables you to measure application performance from an end user’s perspective so you can monitor the user experience.

With SolarWinds Virtualization Manager and SolarWinds Server & Application Monitor you have complete coverage of your entire virtual environment from the bare metal all the way to the end user.

Jul 14 2013

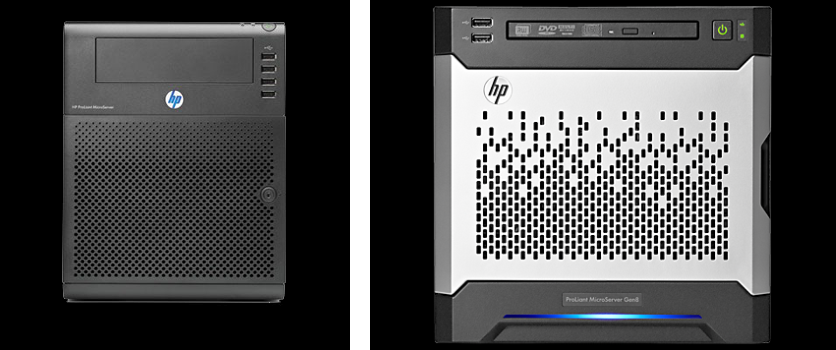

The new HP ProLiant MicroServer Gen8 – a great virtualization home lab server

I’ve always liked the small size of the HP MicroServer which makes it perfect for use in a virtualization home lab, but one area that I felt it was lacking was with the CPU. The original MicroServer came with a AMD Athlon II NEO N36L 1.3 Ghz dual-core processor which was pretty weak to use with virtualization and was more suited for small ultra-notebook PC’s that require small form factors and low power consumption. HP came out with enhanced N40L model a bit later but it was just a small bump up to the AMD Turion II Neo N40L/1.5GHz and later on to the N54L/2.2 Ghz dual-core processor. I have both the N36L & N40L MicroServers in my home lab and really like them except for the under-powered CPU.

Well HP just announced a new MicroServer model that they are calling Gen8 which is in line with their current Proliant server model generation 8. The new model not only looks way cool, it also comes in 2 different CPU configurations which give it a big boost in CPU power. Unfortunately while the GHz of the CPU makes a big jump, it’s still only available in dual-core. The two new processor options are:

- Intel Celeron G1610T (2.3Hz/2-core/2MB/35W) Processor

- Intel Pentium G2020T (2.5GHz/2-core/3MB/35W) Processor

Having a Pentium processor is a big jump over the Celeron and it comes with more L3 cache. Unfortunately though neither processor supports hyper-threading which would show as more cores to a vSphere host. Despite this its still a nice bump that makes the MicroServer even better for a virtualization home lab server. Note they switched from AMD to Intel processors with the Gen 8 models.

Lets start with a the cosmetic stuff on the new MicroServer, it has a radical new look (shown on the right below) which is consistent with it’s big brother Proliant servers, I personally think it looks pretty bad-ass cool.

Note the new slim DVD drive opening on the Gen8 instead of the full size opening on the previous model. One thing to note is that while the new new Gen8 models are all depicted with a DVD drive, it does not come standard and must be purchased separately and installed. The Gen8 model is also a bit shorter and less deep and slightly wider than the old model. On the old model the HP logo would light up blue when powered on to serve as the health light and it looks like on the new model there is a blue bar at the bottom that lights up instead. There are also only 2 USB ports on the front now instead of 4. The old model also had keys (which I always misplace) to open the front to gain access to the drives and components, it looks like on the new model they did away with that and have a little handle to open it. On the back side they have moved the power supply and fan up a bit, removed one of the PCIe ports (only 1 now), removed the eSATA port, added 2 USB ports (2 of them 3.0) and added a 1GB NIC port. This is a nice change especially the addition of the second NIC which makes for more vSwitch options with vSphere. I have always added a 2-port NIC to my MicroServers as they only had 1 previously so it’s nice that it comes with 2.

Inside the unit still has 4 non-hot plug drive trays and supports up to 12TB of SATA disk (4 x 3TB). The storage controller is the HP Dynamic Smart Array B120i Controller which only supports RAID levels 0, 1 and 10. Also only 2 of the bays support 6.0Gb/s SATA drives, the other 2 support 3.0Gb/s SATA drives. There are still only 2 memory slots that support a maximum of 16GB (DDR3), this is another big enhancement as the previous model only supported 8GB maximum memory which limited it to how many VMs you could run on it. The Gen8 model also comes with a new internal microSD slot so you could boot ESXi from it if you wanted to, both the old & new still have an internal USB port as well. The server comes with the HP iLO Management Engine which is standard on all Proliant servers and is accessed through one of the NICs that does split-duty, but you have to license it to use many of the advanced features like the remote console. To license it costs a minimum of $129 for the iLO Essentials with 1 yr support which is a bit much for a home lab server that is under $500.

Speaking of cost which has always made the MicroServer attractive for home labs, the G1610T model starts at $449 and the G2020T starts at $529, the two models are identical besides the processor and they both come standard with 2GB of memory and no hard drives. I wish they would not include memory in it and make it optional as well and lower the price. If you want to go to 8G or 16Gb of memory (who doesn’t) you have to take out the 2GB DIMM that comes with it and toss it and put in 4GB or 8GB DIMMs. Here are some of the add-on options and pricing on the HP SMB Store website:

- HP 8GB (1x8GB) Dual Rank x8 PC3- 12800E (DDR3-1600) Unbuffered CAS-11 Memory Kit [Add $139.00]

- HP 4GB (1x4GB) Dual Rank x8 PC3-12800E (DDR3-1600) Unbuffered CAS-11 Memory Kit [Add $75.00]

- HP 500GB 6G Non-Hot Plug 3.5 SATA 7200rpm MDL Hard Drive [Add $239.00]

- HP 1TB 6G Non-Hot Plug 3.5 SATA 7200rpm MDL Hard Drive [Add $269.00]

- HP 2TB 6G Non-Hot Plug 3.5 SATA 7200rpm MDL Hard Drive [Add $459.00]

- HP 3TB 6G Non-Hot Plug 3.5 SATA 7200rpm MDL Hard Drive [Add $615.00]

- HP SATA DVD-RW drive [Add $129.00]

- HP NC112T PCI Express Gigabit Server Adapter [Add $59.00]

- HP 4GB microSD [Add $79.00]

- HP 32GB microSD [Add $219.00]

- HP iLO Essentials including 1yr 24×7 support [$129.00]

With all the add-ons the server cost can quickly grow to over $1000, not ideal for a home lab server. I’d recommend heading to New Egg & Micro Center and getting parts to upgrade the server. You can get a Kingston HyperX Blu 8GB DDR3-1600 Memory Kit for $69 or a Kingston HyperX Red 16GB DDR3-1600 Memory Kit for $119.00 which is half the cost.

All in all I really like the improvements they have made with the new model and it makes an ideal virtualization home lab server that you are typically building on a tight budget. HP if you want to send me one I’d love to do a full review on it. Listed below are some links for more information and a comparison of the old MicroServer G7 N54L and the new Gen8 G2020T model so you can see the differences and what has changed.

- HP ProLiant MicroServer Gen8 product page on hp.com

- HP ProLiant MicroServer Generation 8 (Gen8) QuickSpec on hp.com

- HP ProLiant MicroServer Gen8 Data Sheet on hp.com

Comparison of the old MicroServer G7 N54L and the new Gen8 G2020T model:

Feature Old MicroServer G7 (N54L) New MicroServer Gen8 (G2020T)

Processor AMD Turion II Model Neo N54L (2.20 GHz, 15W, 2MB) Intel Pentium G2020T (2.5GHz/2-core/3MB/35W)

Cache 2x 1MB Level 2 cache 3MB (1 x 3MB) L3 Cache

Chipset AMD RS785E/SB820M Intel C204 Chipset

Memory 2 slots - 8GB max - DDR3 1333MHz 2 slots - 16GB max - DDR3 1600MHz/1333MHz

Network HP Ethernet 1Gb 1-port NC107i Adapter HP Ethernet 1Gb 2-port 332i Adapter

Expansion Slot 2 - PCIe 2.0 x16 & x1 Low Profile 1 - PCIe 2.0 x16 Low Profile

Storage Controller Integrated SATA controller with embedded RAID (0, 1) HP Dynamic Smart Array B120i Controller (RAID 0, 1, 10)

Storage Capacity (Internal) 8.0TB (4 x 2TB) SATA 12.0TB (4 x 3TB) SATA

Power Supply One (1) 150 Watts Non-Hot Plug One (1) 150 Watts Non-Hot Plug

USB ports 2.0 Ports - 2 rear, 4 front panel, 1 internal 2.0 Ports - 2 front, 2 rear, 1 internal) 3.0 Ports - 2 rear

microSD None One - internal

Dimensions (H x W x D) 10.5 x 8.3 x 10.2 in 9.15 x 9.05 x 9.65 in

Weight (Min/Max) 13.18 lb/21.16 lb 15.13 lb/21.60 lb

Acoustic Noise 24.4 dBA (Fully Loaded/Operating) 21.0 dBA (Fully Loaded/Operating)

Jul 06 2013

Hell must of frozen over…

…because I actually got a VMworld session approved! I last had a VMworld session approved (2 of them) in 2010 for my Deep Dive on Virtualization and Building a Home Lab sessions. I’ve really given up hope in recent years of getting another one due to the sheer number of submissions and because VMware has so many sessions because there product offerings are so broad now. This year I had one of the sessions that I submitted through HP “The Top 10 Things you MUST know about Storage for vSphere” approved, it is based on a presentation that I built for our VMUG user conference sponsorship’s this year. As part of HP’s sponsorship we were guaranteed a few session slots but my session made it on its own outside of those slots. So thank you to all who voted for my session and I look forward to seeing you at VMworld.

Jul 03 2013

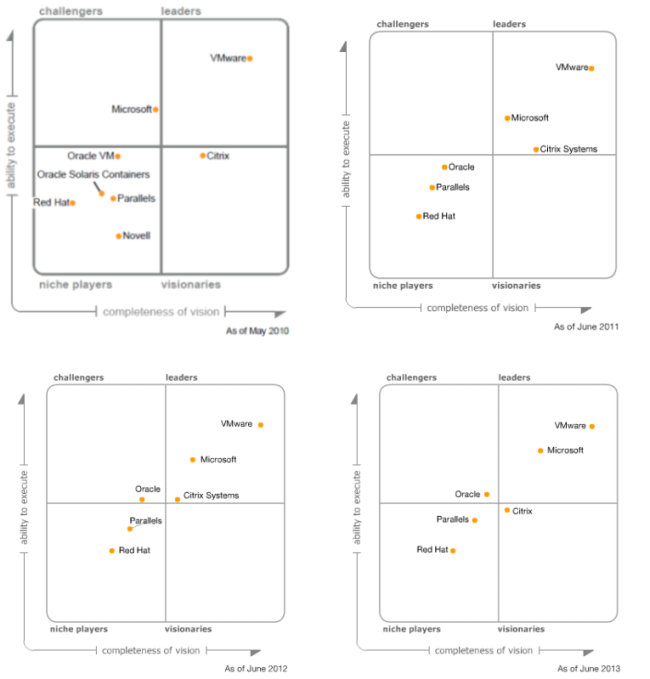

VMware & Microsoft positioning in Gartners Magic Quadrant over the years

Gartner just released their annual “Magic Quadrant for x86 Server Virtualization Infrastructure” report for 2013 and Microsoft continues to draw closer to VMware’s top position. If you look at the differences over the years VMware pretty much hasn’t moved and remains near the highest position that you can be in the quadrant as they lead in both their ability to execute and completeness of vision. That should really come to no surprise as VMware still is and always has been the market leader and have set the bar high in the x86 virtualization field. But Microsoft is slowly but surely closing the gap, I’d be surprised if they ever pass VMware in either of the directions given VMware’s lightning focus on virtualization, but I think they are close enough for most companies to take Microsoft seriously. Also to note the niche players are still mostly niche players and will most likely remain there for the foreseeable future as VMware & Microsoft dominate the x86 virtualization playing field. Citrix isn’t really doing much on the server virtualization side anymore and a large percentage of their client virtualization installations are on non-Citrix server hypervisor platforms like vSphere.

Jul 02 2013

Come see me speak at the Phoenix VMUG User Conference on July 11th

Last year the VMUG leaders in Phoenix invited me to present their keynote at the annual Phoenix VMUG User Conference. I love VMUG’s but had to turn it down unfortunately because of conflicts and having to travel there from Denver. Well I felt bad about having to do that so I ended up just recently moving from Denver, CO to Phoenix, AZ so I could be available to do it if they asked again this year. So as a result I’ll be speaking at the Phoenix VMUG this year which takes place on Thursday, July 11th at the Desert Willow Conference Center in Phoenix. I believe I’ll be doing the morning keynote which will be based on a deck that I will be presenting at VMworld this year for a session that was approved titled “Top 10 things you MUST know about storage for vSphere”. So if you’re in the Phoenix area make sure you head on over to the VMUG website and register. I look forward to meeting my fellow VMware geeks in the valley and participating in the VMUG community here.

Jun 03 2013

Top 5 Hypervisor New Storage Enhancement Use Cases

Having largely perfected the server side of virtualization, VMware & Microsoft have turned their attention towards improving storage as they recognize the critical role that it plays in a virtual environment. VMware in particular has put a big focus on enhancing storage features in recent vSphere releases and promises to continue that trend in future releases. To understand why storage is getting so much attention now you need to look at the role storage plays in a virtual environment.

If you look at the four basic resources that are required for a VM to function, CPU, memory, network and storage; storage is different from the other resources in two ways. First, the other resources are all local to a host and storage is typically accessed remotely via a network or fabric and I/O has a long journey from the host to a storage device. Secondly, unlike the other resource types, storage is a shared resource by many hosts so there is a lot of contention for it. As a result storage is often the limiting factor to achieving higher VM densities which is why VMware has put so much focus on storage features.

Looking at some recent storage enhancements, there are a few key areas that VMware & Microsoft are focusing on that include integration, management and software defined storage. VMware has been trying to mimic with software many of the features traditionally found inside storage arrays and has also turned towards mimicking an entire array with a virtual storage appliance. Let’s take a look at the top 5 storage enhancements from VMware & Microsoft and their use cases in a virtualized environment.

1 – Storage APIs and integration

Integration between the hypervisor and storage devices is the key to efficiency in a virtual environment. This allows the two to communicate with each other more effectively and also offloads resource intensive tasks from the hypervisor to the storage array which is better equipped to handle them. VMware has a number of APIs that they have developed under the vStorage API family that allow storage vendors to integrate directly with vSphere. The vStorage APIs for Array Integration (VAAI) provide capabilities such as offloading block zeroing and data copy as well as providing a more granular disk locking mechanism. VMware has made enhancements to VAAI to improve thin provisioning management and efficiency by allowing the hypervisor to reclaim disk blocks once data has been deleted. Microsoft has made improvements in this area as well with their Offloaded Data Transfer (ODX) and thin reclaim (UNMAP) capabilities that are built into Hyper-V & Winders Server.

2 – Storage resource controls

Because storage is a shared resource, contention for storage resources can be like the Wild West with many hosts and VMs all fighting over limited storage resources. Having a sheriff in town can help tame that contention and ensure that VMs are getting the resources they need. Features like VMware’s Storage I/O Control (SIOC) allows for centralized storage resource management so storage prioritization can be applied across all hosts connected to a datastore instead of on an individual basis. This can help ensure fairness across hosts so critical VMs get the storage resources they need and you don’t have to worry about one host hogging all the storage resources.

3 – Non-disruptive storage migration

A virtual environment is very dynamic and VMs need mobility to ensure resources are balanced and for increased availability. VMware has had their vMotion feature for years that allows a VM on shared storage to move from one host to another when needed without any disruptions. Microsoft introduced a similar feature which they call Live Migration in Hyper-V 3.0, but unlike vMotion it did not require shared storage. VMware enhanced vMotion when they introduced Storage vMotion which allows a VM to move from one datastore to another without disruption. vMotion and Storage vMotion were two different features that operated independently and at different levels (host vs. storage). VMware finally bridged the gap between the two features in vSphere 5.1 with their latest enhancement informally called “Shared Nothing vMotion” that can move a VM to a different host and datastore in one operation. This was on par with what Microsoft had with Live Migration and provides better VM mobility especially for environments that do not have shared storage and can help make balancing workloads and shifting VMs around for scheduled maintenance much easier.

4 – Virtual Storage Appliances

Virtual storage appliances (VSA) allow you to create a virtual shared storage device from local server disk that can be presented to any host and used in the same manner as traditional physical shared storage (NAS/SAN). While historically known as VSAs, VMware has created a new marketing term for them called Software Defined Storage that is part of their whole Software Defined Data Center vision. Today there are many VSAs on the market both from VMware and other storage vendors that offer different features and capabilities. Microsoft currently does not offer a VSA as part of Hyper-V but there are some 3rd party VSA’s that work with Hyper-V. VSAs can provide many benefits and are suited for many different uses cases. They are great for SMBs that desire shared storage to be able to use the many advanced virtualization features that required shared storage but don’t have the budget for a NAS or SAN device. They are also good for remote office deployments and to implement cost-effective BC/DR.

5 – Storage load balancing

Storage workloads are often very dynamic and unpredictable and being able to shift them around to better balance them helps improve performance and avoid overburdened datastores. VMware has a feature called Storage Distributed Resource Scheduler (DRS) that allows for intelligent placement of VMs that are powered on as well as the shifting of workloads from one storage resource to another whenever needed to ensure optimum performance and eliminate I/O bottlenecks. Storage DRS is similar to the auto tiering feature that is found in storage arrays but instead of working at the disk block level it works at the VM level. Storage DRS works in conjunction with other vSphere storage features, when combined, Storage DRS is the brains, Storage vMotion is the muscle and Storage I/O Control is the judge to help create a more balanced and efficient storage platform for VMs.

As the hypervisor management tools take over and automate more and more tasks, it becomes harder to maintain visibility between the virtual and storage resources. As a result, having good virtualization management and monitoring software for your virtualization and storage resources is critical. Because storage is such an important part of a virtual environment you can’t afford to make any assumptions about your storage resources. Products like SolarWind’s Virtualization Manager and Storage Manager can help you proactively monitor and analyze the performance and capacity of your storage resources. Featuring configurable real-time dashboards you can easily stay on top of storage trends and identify any problem areas that may be occurring. Together, SolarWinds Virtualization Manager and Storage Manager can help you keep a sharp eye on the performance and capacity of your entire physical and virtual storage infrastructure-from VM to spindle!

Apr 30 2013

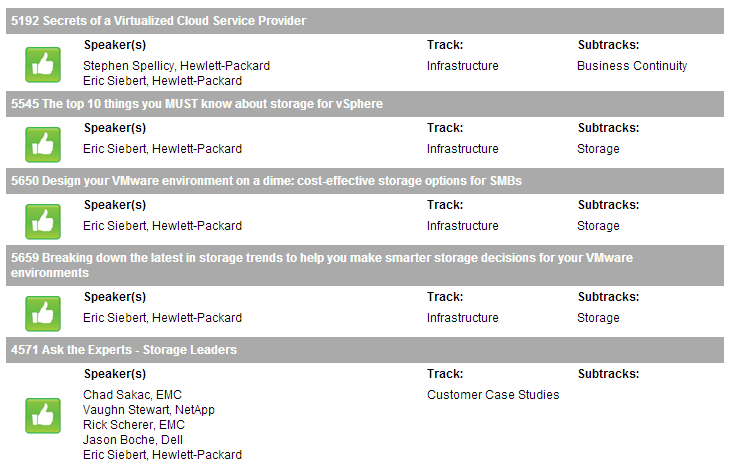

VMworld session public voting – vote early and vote often

Well maybe not often, that’s the way they used to do it in Chicago in the old days. I did want to make a plea to get your votes for my sessions at VMworld. I have several I submitted as part of HP and I promise to make them as technical as possible. My Top 10 things you must know about storage for vSphere session is crammed full of technical info, so much so that I’ve had to trim it down twice as it could easily go over an hour.

Before I highlight my sessions I wanted to mention how crazy it’s gotten trying to get session submissions approved for VMworld. Back in the ole days (2008) when I first presented at VMworld, there weren’t a lot of bloggers, there were a lot less partners and VMware had a lot less products. Fast forward to today and there are hundreds of bloggers (200+), hundreds of partners and VMware has a boatload of products. As a result they get a crazy amount of submissions each year and with very limited session slots it’s extremely difficult to get one. What really impacts it the most is that VMware has gotten so big with so many products that they need a lot of session slots to cover them all, that doesn’t leave much for partners, who are guaranteed some slots and that leaves hardly any for bloggers. It’s a shame as there are so many great session submissions each year but there are so few slots to fit them in.

Which brings me to the public voting, VMware lets public voting have an influence on the approved session outcome. Public voting is just a percentage of the methods used to approve sessions, a big part of it is the private content committee judging that is done. With the content committee, every session is given a look over and scored by the committee. Where it becomes extremely difficult is with public voting, there are so many sessions on the ballot that it quickly becomes overwhelming and nobody has time to go through them one by one. Therefore many people skim through them or look for specific sessions that they know about. What becomes really important is the title of your session, which is essentially the curb appeal that draws people in to take a closer look at your session. Many people don’t get past the titles and only look at interesting and catchy titles. When you are confronted with over 1200 sessions to vote on, you simply don’t have the time to look at them all. What I find that helps a bit is filtering on tracks, keywords or speaker names. It’s still a difficult task though, imaging voting for over 1200 people running for president, it’s just too much.

So with that I’d like to point out my sessions and let you take a look and see if you judge them worthy of your vote. I appreciate your consideration and vote or no vote I look forward to seeing you at VMworld anyway. Even if you don’t vote for me you should still get out there an vote.