Author's posts

Oct 10 2013

No vMSC certification for vSphere 5.5 yet

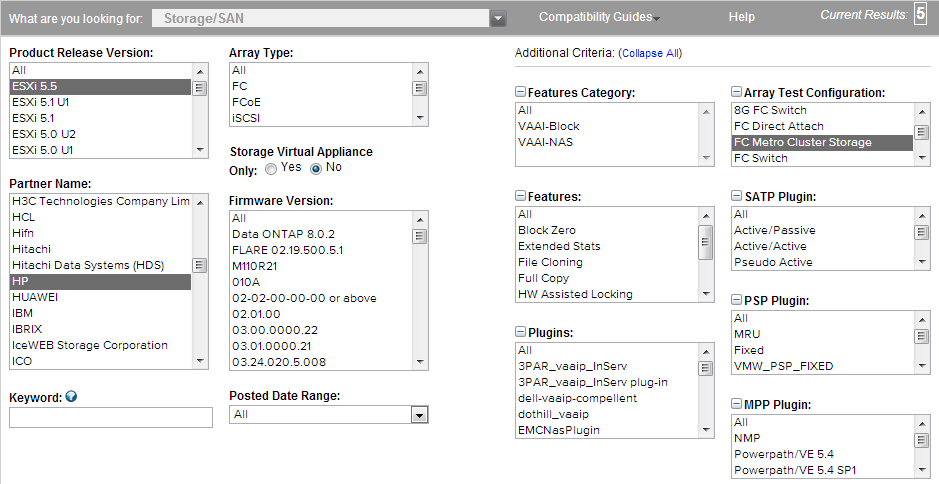

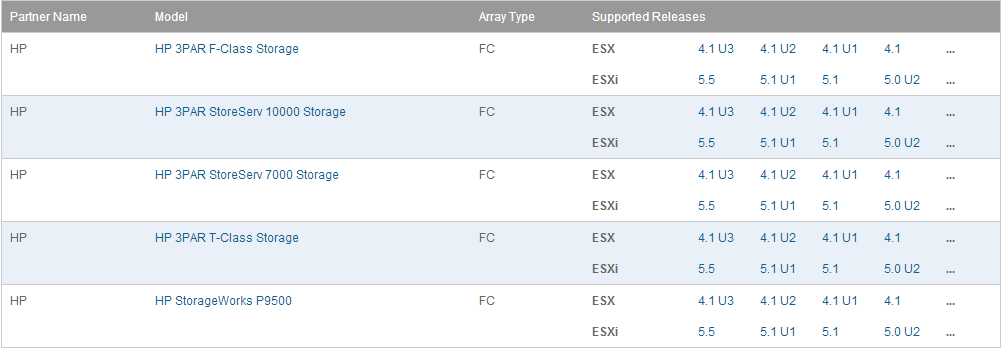

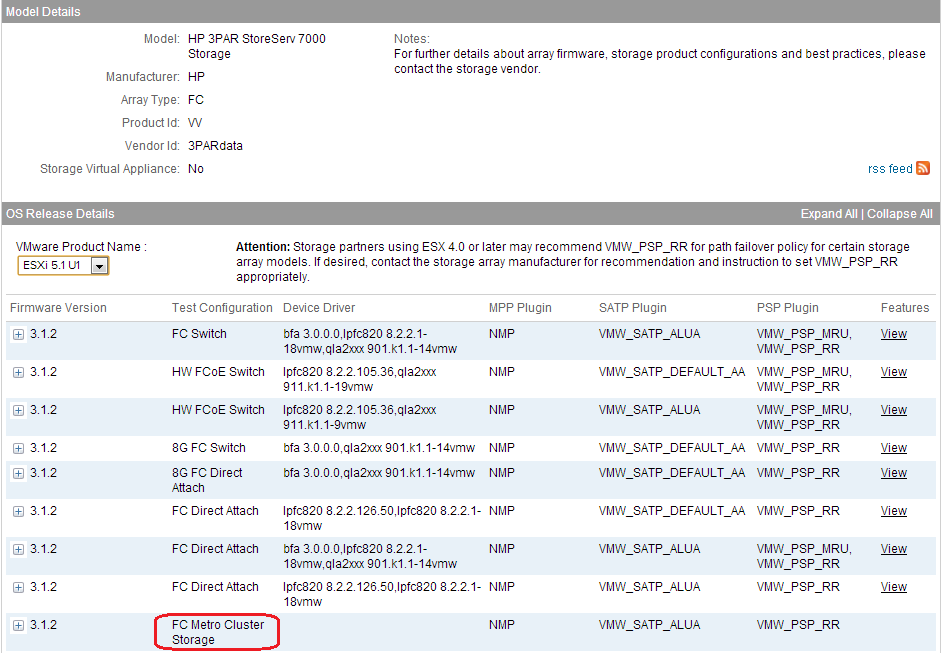

The other day I had someone ask if 3PAR was certified for vMSC with vSphere 5.5. So naturally I went to the VMware HCL and checked by selecting ESXi 5.5 for the version, then HP as the vendor and then the FC Metro Cluster Storage as the array test configuration.

When I searched I got back 5 results which made me think it was certified for vMSC in ESXi 5.5. However after checking with the product teams they said a full storage re-certification was required for ESXi 5.5 and while the arrays were certified for ESXi 5.5, separate testing still needed to be done for vMSC with ESXi 5.5. As a result despite the HCL showing results back for vMSC and ESXi 5.5, the arrays are actually not certified for vMSC with ESXi 5.5. Note this behavior is true for any partner you select, when I selected EMC and FC-SVD Metro Cluster Storage it returned one result for ESXi 5.5 just like it would if you select ESXi 5.1 U1.

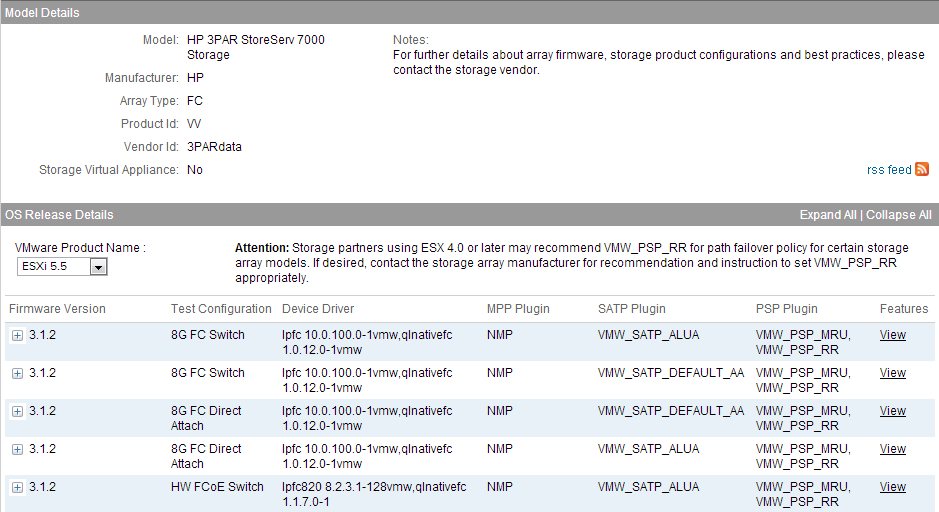

After looking further at the HCL you can tell this because the FC Metro Cluster Storage entry is missing in the OS Release Details for ESXi 5.5.

If you switch the version to ESXi 5.1 Update 1 you’ll see the entry indicating it is certified for vMSC.

After checking further with VMware it appears that there was no Day 0 support for vMSC in vSphere 5.5 which means that there are no arrays which are certified for vMSC with vSphere 5.5. The reason for this is that VMware has not yet completed what is needed for vMSC re-certification testing with vSphere 5.5. They do not expect to have this until Nov-Dec at which time partners can begin the re-certification process for vSphere 5.5. As a result you probably will not see any arrays actually certified for vMSC with vSphere 5.5 until next year.

Sep 30 2013

New SolarWinds Virtualization Manager 6.0 integrated management

I recently wrote about the challenges around management in heterogeneous virtual environments and how tools from vendors like SolarWinds can help overcome those challenges. One of the keys to having a good management product is that it’s not creating more management silos and can cover the full spectrum of your virtual environment. SolarWinds has a lot of management products that can cover every inch of your virtual environment from applications to hypervisors to servers, storage and networking. While having that end to end coverage from a single vendor can eliminate management headaches, wouldn’t it be nice if those tools could all integrate with each other to provide even better and more unified management.

Consider the following common scenario in a virtual environment; users are reporting that an application running inside a VM is responding very slowly. Where is the first place you typically start? You look specifically at application and operating system performance monitors, OK so I see it’s performing poorly there, what next? Must be a resource problem but is it a problem with virtual or physical resources? I’ll start with looking at my virtual resources since they are closer to the VM, but virtual resources are closely tied to physical resources so I’ll need to investigate both. But my physical resources are spread across different physical hardware areas; I’ll need to look at servers, storage and networking resources all independently. This quest to find the root cause can have me jumping through many different management tools and if they don’t interact with each other I can’t follow the trail across them which can make solving the problem extremely difficult for me.

Now if you have tools that can talk to each other, you can more easily follow the bread crumbs across tools and have visibility into the whole relationship from app to bare metal. So instead of manually trying to piece the puzzle together while trying to follow the flow from an I/O generated inside a VM through all the layers to its final destination on a physical storage device, you would be able to see this at a single glance with individual tools that specialize on a specific area but work together with other tools that cover different areas. This is a big deal in a virtual environment that has a lot of moving parts that can make it easy to get lost and end up hitting dead ends and having to start over. You need a single pane of glass to look through so you’re not blindly working with tools that can’t show you the end to end view of your entire virtual environment.

The latest release of SolarWind’s Virtualization Manager does just that, it brings together two tools that focus on different areas, one inside a VM, the other outside of it. Virtualization Manager is a great management tool that is focused on providing the best possible management and monitoring for virtual environments including the hypervisor and physical resources. SolarWind’s Server and Application Monitor on the other hand specializes in the management that occurs inside a VM at the guest OS and application layers. With the latest Virtualization Manager 6.0 release SolarWinds has provided integration with Server and Application Monitor so they can share information and you get a single pane of glass that provides end to end visibility.

While that combination is a big win that makes management much easier, it gets even better. Together Virtualization Manager and Server and Application Monitor cover the management of apps, guest OSs, servers and the hypervisor, but what about my network and storage resources? In a virtual environment those are two very critical resources that are often physically separate from server resources. Virtualization Manager has that covered as well with integration with both SolarWinds Network Performance Monitor and SolarWinds Storage Manager. This integration completes the picture and ties together every single layer and resource that exists in a virtual environment. You now have the fusion of four great management tools, each with a specific focus area, into one great tool that is both broad and deep for maximum visibility into your virtual environment.

So I encourage you to check out SolarWinds Virtualization Manager and all the other great management tools that SolarWinds has to offer that makes management in any virtual environment, including heterogeneous ones, both simple and easily and more importantly, complete. To find out more about this great new Virtualization Manager integration check out the below resources which may leave you with a strong feeling of management tool envy!

- SolarWinds Virtualization Manager and Server & Application Manager Integration video

- Virtualization Manager 6.0 Federal Webcast

- Systems Management Demo showing Server and Application Monitor and Virtualization Manager integration (under the “Virtualization” tab)

- Virtualization Manager with SAM and NPM integration blog post

- Virtualization Manager and Storage Manager integration blog post

- Virtualization Manager and Server and Application Manager blog post

Sep 24 2013

Welcome to HyTrust as a vSphere-land sponsor

I’d like to welcome HyTrust as a new sponsor to vSphere-land. While they might be a new sponsor here, HyTrust and I actually go way back to the days when I was a judge for the Best of VMworld awards. Back in 2009 I was a judge for the security category for Best of VMworld, I always had a fondness for security so I really enjoyed judging that category. Back in those days virtualization security was still a relatively new area and didn’t get as much focus as it does today. I remember going through the motions for judging at VMworld, visiting each of the vendors in that category, asking questions and learning about their products.

HyTrust was actually my last stop of the day before we had to turn everything in and I was short on time when I visited them and talked with their president, Eric Chiu. But that didn’t matter as it didn’t take long for me to figure out that they had a pretty special product that really stood out from the other security products I had seen that day. Most of the other security products I had seen where doing basically the same thing just in slightly different ways. HyTrust’s approach to security on the other hand was completely different and made my decision for the winner of that category very easy. They impressed me and the other judges so much that we also chose them as Best of Show among all the other category winners.

I wrote a follow-up article on HyTrust for searchvmware.com describing their solution in more detail and also mentioned them as a solution in my article on How to Steal a VM in 3 Easy Steps. But don’t take my word for it, check them out yourself and you decide. It’s not easy being a virtualization startup and I recently read a great article on Eric Chiu and HyTrust that described all the behind the scenes financial dealings that helps fund startups in their quest to be successful. It’s always challenging as a startup and many fail but HyTrust has ridden the virtualization wave and has not faltered. Virtualization security has never been more important then it is today and there will always be a need for good solutions to secure virtual environments so be sure and give HyTrust a look. They’ve been in the news recently with the latest version of their product and also were named one of the 10 recent tech investments to watch.

Sep 23 2013

Configuration maximums that changed between vSphere 5.1 and 5.5

Below is a table of all the configuration maximums that changed between the vSphere 5.1 release and the vSphere 5.5 release.

Maximum Type Category vSphere 5.1 vSphere 5.5 vSphere 6.0

Virtual disk size VM Storage 2TB minus 512 bytes 62TB 62TB

Virtual SATA adapters per VM VM I/O Devices NA 4 4

Virtual SATA devices per virtual SATA adapter VM I/O Devices NA 30 30

Logical CPUs per host Host Compute 160 320 480

NUMA Nodes per host Host Compute 8 16 16

Virtual CPUs per host Host Compute 2048 4096

Virtual CPUs per core Host Compute 25 32

RAM per host Host Compute 2TB 4TB

Swap file size Host Compute 1TB NA

VMFS5 - Raw Device Mapping size (virtual compatibility) Host Storage 2TB minus 512 bytes 62TB

VMFS5 - File size Host Storage 2TB minus 512 bytes 62TB

e1000 1Gb Ethernet ports (Intel PCI‐x) Host Networking 32 NA

forcedeth 1Gb Ethernet ports (NVIDIA) Host Networking 2 NA

Combination of 10Gb and 1Gb Ethernet ports Host Networking Six 10Gb and Four 1Gb ports Eight 10Gb and Four 1Gb ports

mlx4_en 40GB Ethernet Ports (Mellanox) Host Networking NA 4

SR-IOV Number of virtual functions Host VMDirectPath 32 64

SR-IOV Number of 10G pNICs Host VMDirectPath 4 8

Maximum active ports per host (VDS and VSS) Host Networking 1050 1016

Port groups per standard switch Host Networking 256 512

Static/Dynamic port groups per distributed switch Host Networking NA 6500

Ports per distributed switch Host Networking NA 60000

Ephemeral port groups per vCenter Host Networking 256 1016

Distributed switches per host Host Networking NA 16

VSS portgroups per host Host Networking NA 1000

LACP - LAGs per host Host Networking NA 64

LACP - uplink ports per LAG (Team) Host Networking 4 32

Hosts per distributed switch Host Networking 500 1000

NIOC resource pools per vDS Host Networking NA 64

Link aggregation groups per vDS Host Networking 1 64

Concurrent vSphere Web Clients connections to vCenter Server vCenter Scalability NA 180

Hosts (with embedded vPostgres database) vCenter Appliance Scalability 5 100

Virtual machines (with embedded vPostgres database) vCenter Appliance Scalability 50 3000

Hosts (with Oracle database) vCenter Appliance Scalability NA 1000

Virtual machines (with Oracle database) vCenter Appliance Scalability NA 10000

Registered virtual machines vCloud Director Scalability 30000 50000

Powered-On virtual machines vCloud Director Scalability 10000 30000

vApps per organization vCloud Director Scalability 3000 5000

Hosts vCloud Director Scalability 2000 3000

vCenter Servers vCloud Director Scalability 25 20

Users vCloud Director Scalability 10000 25000

Sep 22 2013

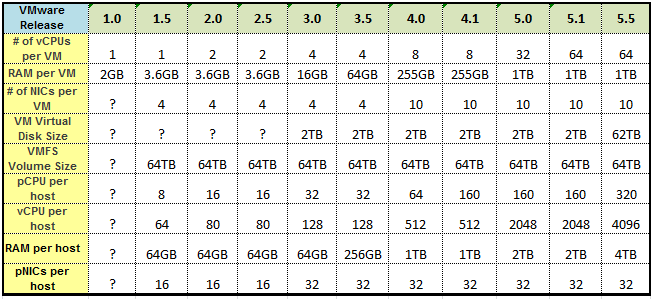

The Monster VM didn’t get any smarter in vSphere 5.5 but it got a whole lot fatter

If you look at the VM resource configuration maximums over the years there have some big jumps in the sizes of the 4 main resource groups with each major vSphere release. With vSphere 5.0 the term “Monster VM” was coined as the number of virtual CPUs and the amount of memory that could be assigned to a VM took a big jump from 8 vCPUs to 32 vCPUs and from 255GB of memory to 1 TB of memory. The number of vCPUs further increased to 64 with the vSphere 5.1 release.

One resource that has stayed the same over the releases going back all the way to vSphere 3.0 (and beyond?) is the virtual disk size which has been limited to 2TB. With the release of vSphere 5.5, the maximum vCPUs and memory has stayed the same but the size of a virtual disk has finally been increased to 62TB. That increase has been long awaited as previously the only way to use bigger disks with a VM with using a RDM. So while the Monster VM may have not had it’s brains increased in this release, the amount it can pack away in its belly sure got a whole lot bigger!

Sep 22 2013

Come and get your vSphere 5.5

vSphere 5.5 is now available for your downloading pleasure here, while you’re waiting for it to download be sure and check out my huge (and growing) vSphere 5.5 link collection to get you all the info you need to know how to use it. This is also the shortest release cycle from the previous version that VMware has done to date at 377 days from the release of vSphere 5.1. Also don’t forget the documentation, the first document I always check out is the latest Configuration Maximums document to see how much larger everything continues to grow.

Sep 17 2013

Top 5 Tips for Managing Heterogeneous Virtual Environments

Many virtual environments lack consistency and uniformity and are often made up of a diverse mix of hardware and software components. This diversity could include things like multiple vendors for hardware components as well as hypervisors from different vendors. Since budgets play a big factor in determining the hardware and software used in a virtual environment, often times there will be variety of equipment from different vendors. In other cases customers want to use best of breed hardware and software which typically means having to go to different vendors to get the configuration they desire.

For hardware it’s not uncommon to see different hardware vendors used across servers, storage and networking, and sometimes different hardware vendors within the same resource group (i.e. storage). When it comes to hypervisor software the most commonly used software is VMware vSphere but it’s not uncommon to see Microsoft’s Hyper-V inside the same data center as a majority of customers use Microsoft for their server OS which gives them access to Hyper-V. Another common mix for client virtualization is running Citrix XenDesktop on top of VMware vSphere. Whatever the reasons are for choosing different vendors to build out a virtual environment, it’s rare to find a datacenter that has the same brand hardware across servers, storage and networking and only a single hypervisor platform running on it.

As a result of this melting pot of different hardware and hypervisors, managing these heterogeneous virtual environments can become extremely difficult as management tools tend to be specific to a hardware or hypervisor platform. Having so many management tool silos can add complexity, increase administration overhead and increase costs. To help offset that we’ll cover 5 tips for managing heterogeneous virtual environments so you can do it more effectively.

1 – Group similar hardware together for maximum effectiveness

If you are going to use a mix of different brands and hardware models you can’t just throw it all together and expect it to work effectively and efficiently. Every hardware platform has its own quirks and nuances and because virtualization is very picky about physical hardware you should group similar hardware together whenever possible. When it comes to servers this is usually done at the cluster level so features like vMotion and Live Migration that move VMs from host to host can ensure CPU compatibility. Because AMD & Intel CPUs use different architectures you cannot move a running VM across hosts with different processor vendors. There are also limitations on doing this within processor families of a single CPU vendor that you need to be careful of. Some of these can be overcome using features like VMware’s Enhanced vMotion Compatibility (EVC) but it can still cause administration headaches.

Shared storage you can intermix more easily as many storage features built into the hypervisor like thin provisioning and Storage vMotion will work independently of the underlying physical storage hardware. With storage you can use different vendor arrays side by side in a virtual environment without any issues but you should be aware of the differences and limitations between file-based (NAS) and block-based (SAN) arrays. You can use file and block arrays together in a virtual environment but due to protocol and architecture differences you may run into issues with feature support and integration across file and block arrays. Therefore you should try and group similar storage arrays together as well so you can get take advantage of features and integration that may only work with within a storage array product family and within a storage protocol. By doing some planning up front and grouping similar hardware together you can increase efficiency, improve management and avoid incompatibility problems.

2 – Try and stick to one hypervisor platform for your production environment

I’ve seen surveys that say more than 65% of companies that have deployed virtualization are using multiple hypervisors. That number seems pretty high and what I question is how those companies are deploying multiple hypervisors. Someone taking a survey that has 100 vSphere hosts and 1 Hyper-V host can say they are running multiple hypervisors but the reality is they are using one primary hypervisor. When you start mixing hypervisors together for whatever reason you run into all sorts of issues that can have a big impact on your virtual environment. The biggest issues with this tend to be in these areas:

- Training – You have to make sure your staff is continually trained on 2 different hypervisor platforms

- Support – You need to have support contracts in place for 2 different hypervisor platforms

- Interoperability – There is some cross hypervisor interoperability between hypervisors using conversion tools but since they use different disk formats this can be cumbersome

- Management – Both VMware & Microsoft have tried to implement management of the other platform into their native management tools but it is very limited and not all that usable

- Costs – You are doubling your costs for training, support, management tools, etc

As a result it makes sense to use one hypervisor as your primary platform for most of your production environment. It’s OK to do one off’s and pockets here and there with a secondary hypervisor but try and limit that.

3 – Make strategic use of a secondary hypervisor platform

Sometimes it may make sense financially to intermix hypervisor platforms in your data center for specific use cases. Using alternative lower cost or free hypervisor platforms can help increase your use of virtualization while keeping costs down. If you do utilize a mix of hypervisors in your data center do it strategically to avoid complications and some of the issues I mentioned in the previous tip. Here are some suggestions for strategically using multiple hypervisor platforms together:

- Use one primary hypervisor platform for production and a secondary for your development and test environments. You may consider using the same hypervisor for production and test though so you can test for issues that may occur from running an application on a specific hypervisor before it goes into production.

- Create tiers based on application type and how critical it is. This can be further defined by the level of support that you have for each hypervisor. If you have 24×7 support on one and 9×5 support on another you’ll want to make sure you have all your critical apps running on the hypervisor platform with the best support contract.

- If you have remote offices you might consider having a primary hypervisor platform at your main site and using an alternate hypervisor at your remote sites.

These are just some of the logical ways to divide and conquer using a mix of hypervisors, you may find other ways that work better for you that gives you the benefits of both platforms without the headaches.

4 – Understand the differences and limitations of each platform

No matter if you’re using different hardware or hypervisor platforms you need to know what the capabilities and limitations of each platform which can impact availability, performance and interoperability. Each hypervisor platform tends to have its own disk format so you cannot easily move VMs across hypervisor platforms if needed. When it comes to features there are usually some requirements around using them and despite being similar they tend to be proprietary to each hypervisor platform.

When it comes to hardware CPU compatibility amongst hosts is a big one because moving a running VM from one host to another using vMotion or Live Migration requires both hosts to have CPUs that are the same manufacturer (i.e. Intel or AMD) as well as architecture (CPU family). With hypervisors there are some features unique to particular hypervisor platforms like the power saving features built into vSphere. Knowing the capabilities and limitations of the hardware and hypervisors that you use can help you strategically plan how to use them together more efficiently and help avoid compatibility problems.

5 – Leverage tools that can manage your environment as a whole

Managing heterogeneous environments can often be quite challenging as you have to switch between many different management tools that are specific to hardware, applications or a hypervisor platform. This can greatly increase administration overhead and decrease efficiency as well as limit the effectiveness of monitoring and reporting. When you have management silos in a data center you lack the visibility across the environment as a whole which can create unique challenges as virtual environments demand unified management. Compounding the problem is the fact that native hypervisor tools are only designed to manage a specific hypervisor so you need separate management tools for each platform. Some of the hypervisor vendors have tried to extend management to other hypervisor platforms but they are often very limited and more designed to help you migrate from a competing platform. The end result is a management mess that can cause big headaches and fuels the need for management tools that can operate at a higher level and that can bridge the gap between management silos.

SolarWinds delivers management tools that can stretch from apps to bare metal and can cover every area of your virtual environment. This provides you with one management tool that can manage multiple hypervisor platforms and also provide you with end to end visibility from the apps running in VM’s to the physical hardware that they reside on. Tools like SolarWinds Virtualization Manager deliver integrated VMware and Microsoft Hyper-V capacity planning, performance monitoring, VM sprawl control, configuration management, and chargeback automation; all in one affordable product that’s easy to download, deploy, and use. Take it even further by adding on with SolarWinds Storage Manager and Server and Application Monitor and you have a complete management solution from a single vendor that covers all your bases and creates a melting pot for your management tools to come together under a unified framework.

Sep 09 2013

Best Practices for running vSphere on iSCSI

VMware recently updated a paper that covers Best Practices for running vSphere on iSCSI storage. This paper is similar in nature to the Best Practices for running vSphere on NFS paper that was updated not too long ago. VMware has tried to involve their storage partners in these papers and reached out to NetApp & EMC to gather their best practices to include in the NFS paper. They did something similar with the iSCSI paper by reaching out to HP and Dell who have strong iSCSI storage products. As a result you’ll see my name and Jason Boche’s in the credits of the paper but the reality is I didn’t contribute much to it besides hooking VMware up with some technical experts at HP.

So if you’re using iSCSI be sure and give it a read, if you’re using NFS be sure and give that one a read and don’t forget to read VMware’s vSphere storage documentation that is specific to each product release.