Author's posts

Nov 23 2016

How will VM Encryption in vSphere 6.5 impact performance?

VMware finally introduced native VM-level encryption in vSphere 6.5 which is a welcome addition, but better security always comes with a cost and with encryption that cost is additional resource overhead which could potentially impact performance. Overall I think VMware did a very good job integrating encryption in vSphere, they leveraged Storage Policy Based Management (SPBM) and the vSphere APIs for I/O Filtering (VAIO) to seamlessly integrate encryption into the hypervisor. Prior to vSphere 6.5, you didn’t see VAIO used for more than I/O caching, well encryption is a perfect use case for it as VAIO allows direct integration right at the VM I/O stream.

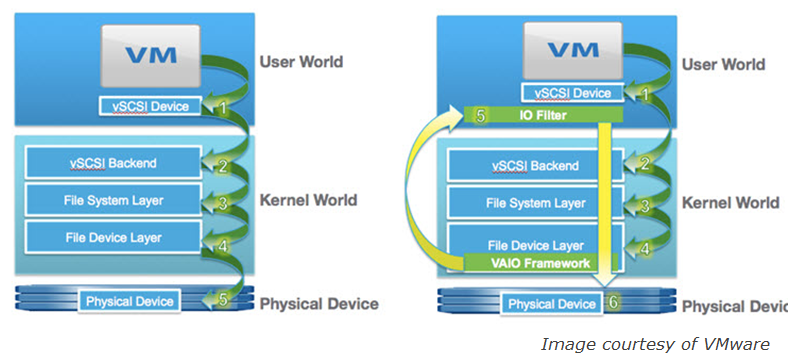

So let’s take a closer look at where the I/O filtering occurs with VAIO. Normally storage I/O initiates at the VM’s virtual SCSI device (User World) and then makes it way through the VMkernel before heading onto the physical I/O adapter and to the physical storage device. With VAIO the filtering is done close to the VM in the User World with the rest of the VAIO framework residing in the VMkernel as shown in the below figure, on the left is the normal I/O path without VAIO and on the right is with VAIO:

When an I/O goes through the filter there are several actions that an application can take on each I/O, such as fail, pass, complete or defer it. The action taken will depend on the application’s use case, a replication application may defer I/O to another device, a caching application may already have a read request cached so it would complete the request instead of sending it on to the storage device. With encryption it would presumably defer the I/O to the encryption engine to be encrypted before it is written to it’s final destination storage device.

When an I/O goes through the filter there are several actions that an application can take on each I/O, such as fail, pass, complete or defer it. The action taken will depend on the application’s use case, a replication application may defer I/O to another device, a caching application may already have a read request cached so it would complete the request instead of sending it on to the storage device. With encryption it would presumably defer the I/O to the encryption engine to be encrypted before it is written to it’s final destination storage device.

So there are definitely a few more steps that must be taken before encrypted data is written to disk, how will that impact performance? VMware did some testing and published a paper on the performance impact of using VM encryption. Performing encryption is mostly a CPU intensive as you have to do complicated math to encrypt data, the type of storage that I/O is written to plays a factor as well but not in the way you would think. With conventional spinning disk there is actually less performance impact from encryption compared to faster disk types like SSD’s and NVMe. The reason for this is that because data is written to disk faster the CPU has to work harder to keep up with the faster I/O throughput.

The configuration VMware tested with was running on Dell PowerEdge R720 servers with two 8-core CPU’s, 128GB memory and with both Intel SSD (36K IOPS Write/75K IOPS Read) – and Samsung NVMe (120K IOPS/Write750K IOPS Read) storage. Testing was done with Iometer using both sequential and random workloads. Below is a summary of the results:

-

512KB sequential write results for SSD – little impact on storage throughput and latency, significant impact on CPU

- 512KB sequential read results for SSD – little impact on storage throughput and latency, significant impact on CPU

- 4KB random write results for SSD – little impact on storage throughput and latency, medium impact on CPU

- 4KB random read results for SSD – little impact on storage throughput and latency, medium impact on CPU

- 512KB sequential write results for NVMe – significant impact on storage throughput and latency, significant impact on CPU

- 512KB sequential read results for NVMe – significant impact on storage throughput and latency, significant impact on CPU

- 4KB random write results for NVMe – significant impact on storage throughput and latency, medium impact on CPU

- 4KB random read results for NVMe – significant impact on storage throughput and latency, medium impact on CPU

As you can see, there isn’t much impact on SSD throughput and latency but with the more typical 4KB workloads the CPU overhead is moderate (30-40%) with slightly more overhead on reads compared to writes. With NVMe storage there is a lot of impact to storage throughput and latency overall (60-70%) with moderate impact to CPU overhead (50%) with 4KB random workloads. The results varied a little bit based on the number of workers (vCPUs) available used.

They also tested with VSAN using a hybrid configuration consisting of 1 SSD & 4 10K drives, below is a summary of those results:

-

512KB sequential read results for vSAN – slight-small impact on storage throughput and latency, small-medium impact on CPU

- 512KB sequential write results for vSAN – slight-small impact on storage throughput and latency, small-medium impact on CPU

-

4KB sequential read results for vSAN – slight-small impact on storage throughput and latency, slight impact on CPU

- 4KB sequential write results for vSAN – slight-small impact on storage throughput and latency, small-medium impact on CPU

The results with VSAN varied a bit based on the number of workers used, with less workers (1) there was only a slight impact, as you added more work workers there was more impact to throughput, latency and CPU overhead. Overall though the impact was more reasonable at 10-20%.

Now your mileage will of course vary based on many factors such as your workload characteristics and hardware configurations but overall the faster your storage and the slower your CPUs and # of them the more performance penalty you can be expected to encounter. Also remember you don’t have to encrypt your entire environment and you can pick and choose which VM’s you want to encrypt using storage policies so that should lessen the impact of encryption. If you have a need for encryption and the extra security it provides it’s just the price you pay, how you use it is up to you. With whole VM’s being capable of slipping out of your data center over a wire or in someone’s pocket, encryption is invaluable protection for your sensitive data. Below are some resources for more information on VM encryption in vSphere 6.5:

- What’s New in vSphere 6.5: Security (VMware vSphere Blog)

- vSpeaking Podcast Episode 29: vSphere 6.5 Security (VMware Virtually Speaking Podcast)

- VMworld 2016 USA INF8856 vSphere Encryption Deep Dive Technology Preview (VMworld TV)

- VMworld 2016 USA INF8850 vSphere Platform Security (VMworld TV)

- The difference between VM Encryption in vSphere 6.5 and vSAN encryption (Yellow Bricks)

Nov 22 2016

Looking for an affordable backup solution for your VMware environment? Check out Nakivo

Nakivo is a relatively new company in the backup solution segment for VMware environments, having been founded in 2012. I’ll be honest I had not heard of them until recently but once I looked into them I was impressed with their solutions and rapid growth rate. Their core product, Nakivo Backup & Replication provides companies with a robust data protection solution for their VMware environment and features an impressive list of enterprise grade features at an affordable price point.

Nakivo Backup & Replication can be deployed in a variety of methods including as a virtual appliance, on Windows or Linux, on AWS and something I found pretty slick, directly on a Synology or Western Digital NAS device. I have a Synology NAS unit as part of my home lab and they are great units that are available from small 2 drive models all the way up to 12 drive models that can be rack mounted or put on a shelf. To install it inside your Synology NAS unit you simply download the NAS package from Nakivo and then go to the Package Center in the Synology management console and install it. Once installed you can then access it from within your Synology management console.

Nakivo Backup & Replication can be deployed in a variety of methods including as a virtual appliance, on Windows or Linux, on AWS and something I found pretty slick, directly on a Synology or Western Digital NAS device. I have a Synology NAS unit as part of my home lab and they are great units that are available from small 2 drive models all the way up to 12 drive models that can be rack mounted or put on a shelf. To install it inside your Synology NAS unit you simply download the NAS package from Nakivo and then go to the Package Center in the Synology management console and install it. Once installed you can then access it from within your Synology management console.

As far as features go, Nakivo Backup & Replication has plenty of them including the following:

- Instant File/Object recovery

- Data deduplication and compression

- Multi-thread processing and network acceleration

- Flash VM boot (instant restore from repository)

- Screenshot backup verification (pretty cool!)

- Application aware backups (i.e. MS SQL, Exchange, Active Directory)

- Full Synthetic Data Storage (smaller & faster backups)

- Certified VMware Ready and VMware vCloud support

Plus a lot more, for the full list you can check out the Nakivo Backup & Replication product page or datasheet. That all sounds great, but what’s it going to cost you? I was impressed by their affordable pricing as well, their entry point is $199/socket for Pro Essentials and $299 for Enterprise Essentials which gets you more features. Their Essentials licensing is similar to VMware’s Essentials offering as they are sized for smaller SMB environments and have a limit of 6 licenses per environment. Their Pro ($399/socket) and Enterprise ($599/socket) licenses offer the same feature sets as their Essentials counterparts but with unlimited scalability. Nakivo even makes it attractive to move from a competitor’s platform with special discounted $149/socket trade-in price. You can view the full pricing, licensing and feature information here.

Nakivo lets you try before you buy with a trial edition and they also offer a free edition as well. Their free edition is fully functional but has a limit of protecting only 2 VMs. So if you want to ensure that your VMware environment is well-protected from data corruption, malware, user mistakes, hardware failures or disasters give Nakivo a look and get peace of mind for an affordable price.

Nov 21 2016

vSphere C# Client officially gone in vSphere 6.5 but what are the HTML5 client limitations

Earlier this year I wrote about the end of life for our beloved vSphere C# thick client. Now that vSphere 6.5 is here VMware delivered on their promise to eliminate the C# client in vSphere 6.5 in lieu of the new HTML5 client that they have been working on to replace the current flash-based vSphere web client that everybody hates on. The new HTML5 web based client is much faster then the old flash client and is a great improvement but the only problem is that its only half done and has a lot of limitations right now. From the vSphere 6.5 release notes:

Earlier this year I wrote about the end of life for our beloved vSphere C# thick client. Now that vSphere 6.5 is here VMware delivered on their promise to eliminate the C# client in vSphere 6.5 in lieu of the new HTML5 client that they have been working on to replace the current flash-based vSphere web client that everybody hates on. The new HTML5 web based client is much faster then the old flash client and is a great improvement but the only problem is that its only half done and has a lot of limitations right now. From the vSphere 6.5 release notes:

As of vSphere 6.5, VMware is discontinuing the installable desktop vSphere Client, one of the clients provided in vSphere 6.0 and earlier. vSphere 6.5 does not support this client and it is not included in the product download. vSphere 6.5 introduces the new HTML5-based vSphere Client, which ships with vCenter Server alongside the vSphere Web Client. Not all functionality in the vSphere Web Client has been implemented for the vSphere Client in the vSphere 6.5 release.

So in vSphere 6.5 you pretty much have two options for managing your vSphere environment: use the older, flash based web client that is fully functional, or use the new HTML5 web client which performs much better but is not full functional so you have to keep switching back to the flash based web client. Not really an ideal situation and hopefully VMware kicks it into high gear with the HTML5 client development so you can use it without limitations.

This link to VMware’s documentation provides a pretty long list of over 200 unsupported functionality actions in the HTML5 client. Note that now that the vSphere C# Client is officially dead they are referring to the HTML5 client as the vSphere Client going forward. The new vSphere Client is built into the vSphere 6.5 vCenter Server Appliance, you can get the latest HTML5 client builds at the VMware Fling page as it is also delivered independently of vSphere and works with vSphere 6.0 & 6.5.

Nov 18 2016

New PowerCLI cmdlets to support VVol replication in vSphere 6.5

With the release of vSphere 6.5 and support for VVol replication there was also a need to be able to manage and automate certain replication related tasks. Out of the box there is no integration with VMware’s automation products such as SRM and vRealize Orchestrator and Automation. So to be able to actually use the VVol replication capabilities in vSphere 6.5 VMware had to develop new cmdlets for it. There are only a few scenarios associated with VVol replication that you can perform actions on. That includes doing either a planned or unplanned failover, a test failover, reversing replication and forcing a sync replication.

VMware posted PowerCLI 6.5R1 yesterday and below are the cmdlets related to VVol replication along with a brief description on what they do. Customers will have to create their own scripts for now until VMware adds support for VVol replication tasks into their automation products. Also note right now there is no cmdlet for doing a test failover which essentially clones the replicated VVols at the target site and exposes them to vSphere for testing. So if you want to do that (who wouldn’t) you’ll have to use one of their other programming interfaces such as Java.

- Get-SpbmFaultDomain – This cmdlet retrieves fault domains based on name or ID filter. The fault domain ID is globally unique.

- Get-SpbmReplicationGroup – This cmdlet retrieves replication groups. The replication groups can be of type source or target.

- Get-SpbmReplicationPair – This cmdlet retrieves the relation of replication groups in a pair of source and target replication group.

- Start-SpbmReplicationFailover – This cmdlet performs a failover of the devices in the specified replication groups. This cmdlet should be called at the replication target location. After the operation succeeds, the devices will be ready to be registered by using the virtual machine file path.

- Start-SpbmReplicationPrepareFailover – This cmdlet prepares the specified replication groups to fail over.

- Start-SpbmReplicationReverse – This cmdlet initiates reverse replication, by making the currently failed over replication group the source and its peer replication group the target. The devices in the replication group will start getting replicated to this new target site, which was the source before the failover.

- Sync-SpbmReplicationGroup – This cmdlet synchronizes the data between source and replica for the specified replication group. The replicas of the devices in the replication group are updated and a new point in time replica is created. This function should be called at the replication target location

Nov 18 2016

Which storage vendors support VVols 2.0 in vSphere 6.5?

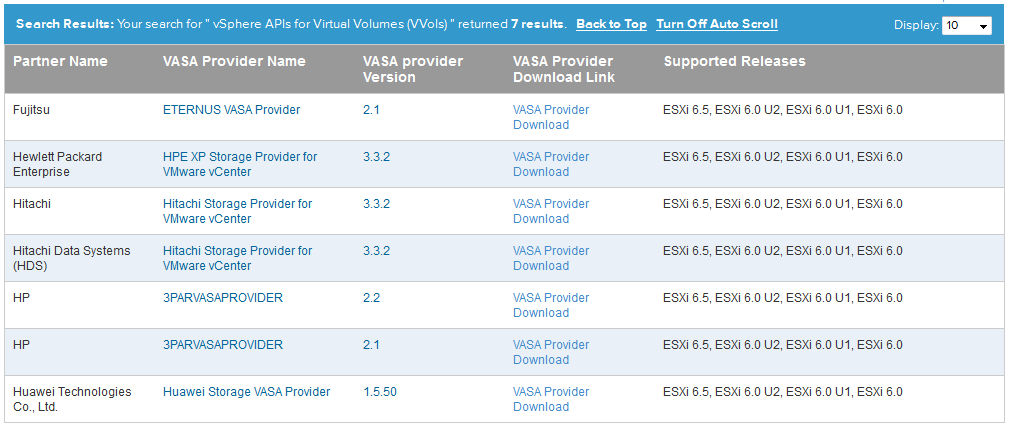

Answer: Not many at all. The vSphere 6.5 release introduces the next iteration of VVols that is built on the updated VASA 3.0 specification that brings support for array based replication which was not supported with VVols in vSphere 6. If you take a look at the VVols HCL for vSphere 6.5 there are only 4 vendors listed there for supporting VVols right now. This is pretty much in line with what happened with the first release of VVols in vSphere 6, only 4 vendors supported VVols on day 1 of the vSphere 6 launch as well.

The 4 vendors listed in the HCL for supporting VVols in vSphere 6.5 are Fujitisu, Hitachi (HDS), HPE & Huawei. One thing that is not clear though is of those 4 vendors, which of them support replication with VVols. I had heard that there would be an indication of some sort in the HCL listing if a vendor supported VVol replication, presumably this would be listed in the Feature section. If this is the case it looks like there are no vendors that support VVol replication yet. Supporting VVols in vSphere 6.5 doesn’t automatically mean that you support replication as well. I know with HPE specifically, 3PAR does not yet support replication despite supporting VVols in vSphere 6.5, replication support will be coming in an upcoming 3PAR OS release.

The 4 vendors listed in the HCL for supporting VVols in vSphere 6.5 are Fujitisu, Hitachi (HDS), HPE & Huawei. One thing that is not clear though is of those 4 vendors, which of them support replication with VVols. I had heard that there would be an indication of some sort in the HCL listing if a vendor supported VVol replication, presumably this would be listed in the Feature section. If this is the case it looks like there are no vendors that support VVol replication yet. Supporting VVols in vSphere 6.5 doesn’t automatically mean that you support replication as well. I know with HPE specifically, 3PAR does not yet support replication despite supporting VVols in vSphere 6.5, replication support will be coming in an upcoming 3PAR OS release.

I’m not really surprised by the lack of support on day 1 as implementing replication of VVols is complicated and requires a lot of development work. I’ve seen this first hand as I sit in on weekly meetings with the 3PAR VVol development team. To be fair, I don’t expect most vendors to rush their VVol support out the door as there are not really a lot of customers that are upgrading to vSphere 6.5 on day 1. The VVol design partners and reference platforms (HPE, Dell, NetApp) have an edge as they have been working longer at it and also working close with VMware to develop and test VVols through the vSphere 6.5 development lifecycle so I would expect them to be quicker to market with VVols 2.0 support.

So if you don’t see your vendor on the HCL today, have patience as they will likely introduce support for VVols when they are are ready. Remember that VVols is a specification that VMware dictates and it’s up to each vendor to develop their own capabilities within that specification however that want to. I suspect if you check the HCL again in 3 months it will start to grow larger, I’m sure Dell, NetApp and Nimble may be there soon. So stay tuned, if you’re really antsy to replicate VVols check with your storage vendor and ask them when they will deliver it. VVols 2.0 delivers both maturity in the VASA specification as well as replication support so it’s going to be worth the wait.

Nov 17 2016

A comparison of VMFS5 & VMFS6 in vSphere 6.5

vSphere 6.5 has introduced a new VMFS version 6 and there are a few changes in it compared to VMFS version 5 that you should be aware of. You have to love VMware’s crazy out of sync versioning across their product lines, now naturally you would think vSphere 6.0 would have VMFS6 in it but VMware kept it at VMFS5 with an incremental version and VMFS6 is new with vSphere 6.5. The table below highlights the difference between the two that you should be aware of but I also wanted to make you aware of some additional info you should know when upgrading to vSphere 6.5 or operating in a mixed vSphere version environment.

The first is once again you can’t upgrade in place existing VMFS5 volumes to VMFS6. That royally sucks and you have to plan migrations by creating new VMFS6 datastores, migrating VMs to them with Storage vMotion and then deleting the VMFS5 datastores when you are done to get back your disk space. Depending on your environment and how much space you have available on your array this can be a long and painful migration. [BEGIN VVols Plug] With VVols you don’t have to deal with any of that BS as you aren’t using VMFS and don’t have to constantly upgrade a file system [END VVols Plug]

Now you might think, screw that, a file system is just a file system and I’ll stick with VMFS5, but you miss out on the automatic space reclamation that is finally back in vSphere 6.5. Be aware that the new 512e drive support in vSphere 6.5 is supported on either VMFS6 or VMFS5 as long as the host is running ESXi 6.5. Beyond that there isn’t too much difference between the two, they also both now support 512 LUNs/VMFS datastores per host as well (note vSphere 6.5 storage doc incorrectly states 1024). So you may end up sticking with VMFS5 but I think the automatic reclamation does make for a compelling use case to upgrade to VMFS6.

So it’s up to you to decide, if you want to learn more be sure and look through the vSphere 6.5 storage documentation, and if you are fed up with VMFS upgrades and want something way cooler give VVols a serious look.

Feature & Functionality VMFS6 VMFS5

Can vSphere 6.5 host access? Yes Yes

Can vSphere 6.0 and earlier hosts access? No Yes

VMFS Datastores per Host 512* 512*

512n storage device support Yes Yes

512e storage device support Yes Yes (Not on local 512e devices)

Automatic space reclamation (UNMAP) Yes No

Manual space reclamation (esxcli) Yes Yes

Space reclamation within guest OS Yes Limited

GPT storage device partitioning Yes Yes

MBR storage device partitioning No Yes

Block size 1MB 1MB

Default snapshot type SEsparse VMFSsparse (virtual disks < 2 TB SEsparse (virtual disks > 2 TB)

Virtual disk emulation type 512n 512n

Support of small files of 1 KB Yes Yes

Nov 16 2016

How the Top vBlogs are performing (or how to optimize your WordPress site)

My site has recently been plagued by performance issues and I spent a lot of time trying to figure out the cause as well as optimizing it so it would perform much better. It was pretty bad and pages were taking 8-12 seconds to load on average. As a former server admin my natural instinct was to immediately blame the hardware platform that my site was running on. In my case I run WordPress hosted by InMotion Hosting so I started by calling them and blaming them for my slow hosting.

They had me run some external tests and from those the underlying causes were uncovered (it wasn’t a server issue). Having eliminated the hosting platform as the cause I did a bunch of research and tried a lot of optimization plug-ins and methods in an effort to speed up the site. When I was done the combination of everything I had done had sped up the site considerably to the point that I am now happy with my overall performance.

Now that I am past that I thought I would pass on some lessons learned to help other who sites might not be optimized properly which can cause your blog visitor experience to suffer not to mention impact how google searches your site. Google has stated that PageSpeed, which is a measurement that they use when ranking search results will directly impact how your site is ranked when user search for content. They have measurements for both mobile and desktop page load times, the slower your site takes to load, the worse you end up in their rankings. So having a site that is well optimized is very beneficial to both your visitors and to your visibility on the internet.

So let’s start on how to analyze your site, I mainly used one online tool for that which is a site called GTmetrix. All you do is go to their site, put in your blog URL and they run a variety of tests on it and generate a report.that has detailed scores, benchmarks, timings and recommendations. They run your site through both Google PageSpeed testing and YSlow testing so your site is analyzed from 2 different perspectives. The results can point you in the right direction of what to fix and the recommendations they provide can tell you how to do it. When you run a report make sure you click on the Waterfall tab to see the load times for all your website elements.

My site was initially scoring as an F grade by both PageSpeed and YSlow, so I had a lot of work to do, below are the various things I did to bring that score up an A & B grade.

Implement a caching plug-in

Implement a caching plug-in

I went with W3 Total Cache which has excellent reviews, it’s fairly easy to install but there are a lot of knobs you have to turn to configure it optimally, here is a guide to get it set properly although every site may vary.

Implement CDN caching

Having a content delivery network (CDN) cache your content for you can help your site perform considerably faster as they deliver content from CDN servers across the world instead of from your site. CDN servers are typically closer geographically to visitors and can serve content more efficiently. W3 Total Cache can work together with a CDN to provide the best possible site performance. I signed up for a free CloudFlare CDN account, updated my DNS nameservers to point to the CloudFlare nameservers and then configured the CloudFlare extension inside the W3 Total Cache Plugin. All in in it wasn’t that difficult and you can read how to do it here.

Optimize your database

Your WordPress MySQL database can accumulate a lot of remnants and junk over time, so the best way to clean it up is to use a plug-in that will scan all your tables and make clean-up recommendations that you can execute. I first disabled revisions on my site as the more you edit a doc (which I do frequently on my link pages) you will create more revisions of it that fill up your database. I used the Advanced Database Cleaner plug-in which did the job nicely, be sure and backup your database first using a plug-in or through your provider.

Modify ajax heartbeats

Disabling or limiting ajax heartbeats is recommended to improve performance, here is a good article on how to do that with a plug-in. In my case the admin-ajax.php was causing a lot of delay in load times which was mostly caused by a plug-in (still trying to fix that). I identified that in the Waterfall tab in the GTMetrix report, there was a GET to admin-ajax.php that was taking 6-7 seconds to complete.

Optimize your images

I tend to use a lot of images in my content and unless you optimize them properly it can really slow your site down as non-optimized images can take much longer time to load. I initially looked at the WP Smush plug-in but ended up going with the EWWW Image Optimizer plug-in instead. It will run though all your existing images and optimize them and reduce their total size which will reduce their load time.

Force browser caching

I inserted some code into my .htaccess file to force browser caching, you can read how to do that here.

Investigate your plug-ins

Plug-ins can frequently be the cause of slow performance but unfortunately there isn’t any easy way to determine which one is at fault. There is a plug-in called P3 Performance Profiler that GoDaddy created for WordPress to analyze plug-in performance but unfortunately it hasn’t been updated in over 2 years and does not work with the latest versions of WordPress so avoid it. If you look the GTMetrix Waterfall results you might be able to identify a slow plug-in but if you suspect a plug-in is causing performance issues the easiest way to identify which one is to disable them one at a time and run a new GTMetrix scan afterwards to see if your site performance improves. Once you know which plug-in is causing it go talk to the developer or switch to a different plug-in that offers the same functionality.

How did the Top vBlogs score?

So once I had my site optimized I was curious as to how other Top vBlogs were performing so I ran the top 10 through GTMetrix and below are the results. Hats off to Chris Wahl and Scott Lowe whose sites are blazing fast, Alan Renouf’s site was generating 404 errors in the analysis so I skipped it and included Chad Sakac instead. I encourage other bloggers to test out their site as well, see how you score and let me know in the comments, also let me know what you did to improve it and hopefully what I posted here helped you out.

Site URL PageSpeed Score YSlow Score Page Load Time Total Page Size Requests

http://www.yellow-bricks.com/ C (79%) E (51%) 3.5s .97MB 57

http://www.virtuallyghetto.com/ B (84%) D (62%) 6.5s 879KB 77

http://cormachogan.com/ B (83%) D (61%) 3.4s 940KB 79

http://www.frankdenneman.nl/ C (75%) D (66%) 4.7s 1.05MB 58

http://wahlnetwork.com/ A (96%) B (86%) 1.7s 368KB 40

http://www.vladan.fr/ C (75%) E (57%) 5.2s 1.53MB 130

http://blog.scottlowe.org/ A (96%) B (87%) 0.8s 190KB 11

http://www.ntpro.nl/blog/ F (35%) E (58%) 3.6s 1.28MB 35

http://www.derekseaman.com/ C (77%) E (54%) 8.4s 1.14MB 124

http://virtualgeek.typepad.com/ D (63%) D (67%) 6.6s 6.35MB 70

Nov 15 2016

Configuration Maximum changes from vSphere 6.0 to vSphere 6.5

vSphere 6.5 is now available and with every release VMware makes changes to the configuration maximums for vSphere. Since VMware never highlights what has changed between releases in their official Configuration Maximum 6.5 documentation, I thought I would compare the document with the 6.0 version and list the changes between the versions here.

Configuration vSphere 6.5 vSphere 6.0

Virtual Machine Maximums

RAM per VM 6128GB 4080GB

Virtual NVMe adapters per VM 4 N/A

Virtual NVMe targets per virtual SCSI adapter 15 N/A

Virtual NVMe targets per VM 60 N/A

Virtual RDMA Adapters per VM 1 N/A

Video memory per VM 2GB 512MB

ESXi Host Maximums

Logical CPUs per host 576 480

RAM per host 12TB 6TB *some exceptions

LUNs per server 512 256

Number of total paths on a server 2048 1024

FC LUNs per host 512 256

LUN ID 0 to 16383 0 to 1023

VMFS Volumes per host 512 256

FT virtual machines per cluster 128 98

vCenter Maximums

Hosts per vCenter Server 2000 1000

Powered-on VMs per vCenter Server 25000 10000

Registered VMs per vCenter Server 35000 15000

Number of host per datacenter 2000 500

Maximum mixed vSphere Client (HTML5) + vSphere Web

Client simultaneous connections per VC60 (30 Flex, 30 maximum HTML5) N/A

Maximum supported inventory for vSphere Client

(HTML5)10,000 VMs, 1,000 Hosts N/A

Host Profile Datastores 256 120

Host Profile Created 500 1200

Host Profile Attached 500 1000

Platform Services Controller Maximums

Maximum PSCs per vSphere Domain 10 8

vCenter Server Extensions Maximums

[VUM] VMware Tools upgrade per ESXi host 30 24

[VUM] Virtual machine hardware upgrade per host 30 24

[VUM] VMware Tools scan per VUM server 200 90

[VUM] VMware Tools upgrade per VUM server 200 75

[VUM] Virtual machine hardware scan per VUM server 200 90

[VUM] Virtual machine hardware upgrade per VUM server 200 75

[VUM] ESXi host scan per VUM server 232 75

[VUM] ESXi host patch remediation per VUM server 232 71

[VUM] ESXi host upgrade per VUM server 232 71

Virtual SAN Maximums

Virtual machines per cluster 6000 6400

Number of iSCSI LUNs per Cluster 1024 N/A

Number of iSCSI Targets per Cluster 128 N/A

Number of iSCSI LUNs per Target 256 N/A

Max iSCSI LUN size 62TB N/A

Number of iSCSI sessions per Node 1024 N/A

iSCSI IO queue depth per Node 4096 N/A

Number of outstanding writes per iSCSI LUN 128 N/A

Number of outstanding IOs per iSCSI LUN 256 N/A

Number of initiators who register PR key for a iSCSI LUN 64 N/A

Storage Policy Maximums

Maximum number of VM storage policies 1024 Not Published

Maximum number of VASA providers 1024 Not Published

Maximum number of rule sets in VM storage

policy16 N/A

Maximum capabilities in VM storage policy

rule set64 N/A

Maximum vSphere tags in virtual machine storage policy 128 Not Published