Author's posts

Apr 12 2017

About public voting for VMworld sessions

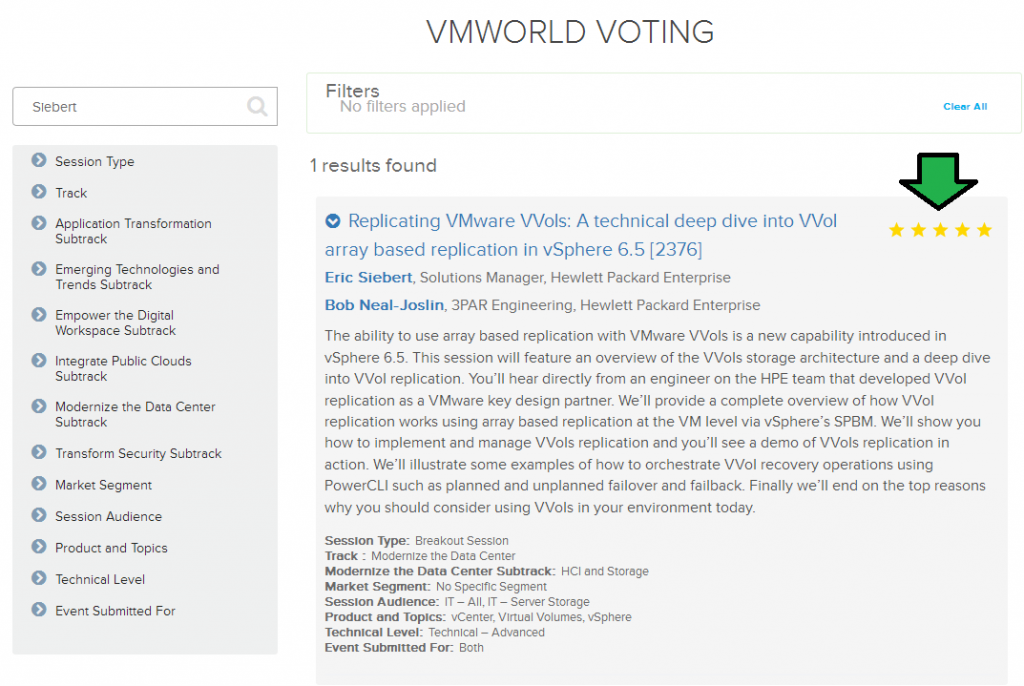

Each year VMware saves a small chunk of VMworld session slots that can make it through what they call Customer Choice which can be voted on by anyone through the public voting process which is going on right now through tomorrow.

You may have noticed that the public voting process has changed this year. In prior years you could vote for a session much like you would do in an election, you would click a star next to a session to vote for it. In this manner you could only positively impact a session’s chances of being approved if it garnered enough votes. This year they have changed it and you can now rate a session from 1-5 stars, more in line like you would rate a movie with 1 star being you hated it and 5 stars being you loved it. With this change anyone can now either positively or negatively influence session approval.

I’m not sure I like this change that much as it opens up the opportunity for abuse of the system, were anyone that has a grudge, who doesn’t like someone/company or just wants to screw around can shoot down a session chances of making it. Because of this change VMware yanked most of the partner sessions from the public voting a few days after the voting opened as partners could and probably did cast negative votes against their competitor sessions. Apparently VMware pulled them as a direct response to complaints from partners about this very issue.

Now sessions are all scored and judged both internally by VMware and by a hand picked content committee, the public voting part is just merely another component that can influence session approval or denial. I’d really like to see it go back to the old way, let people just give a thumbs up for sessions they like and keep the negative influence out of it. Hopefully after they see the impact of this change they reconsider and go back to that. If you haven’t voted yet, please consider doing so, the voting closes at the end of the day tomorrow.

Apr 11 2017

An overview of what’s new in VMware vSAN 6.6

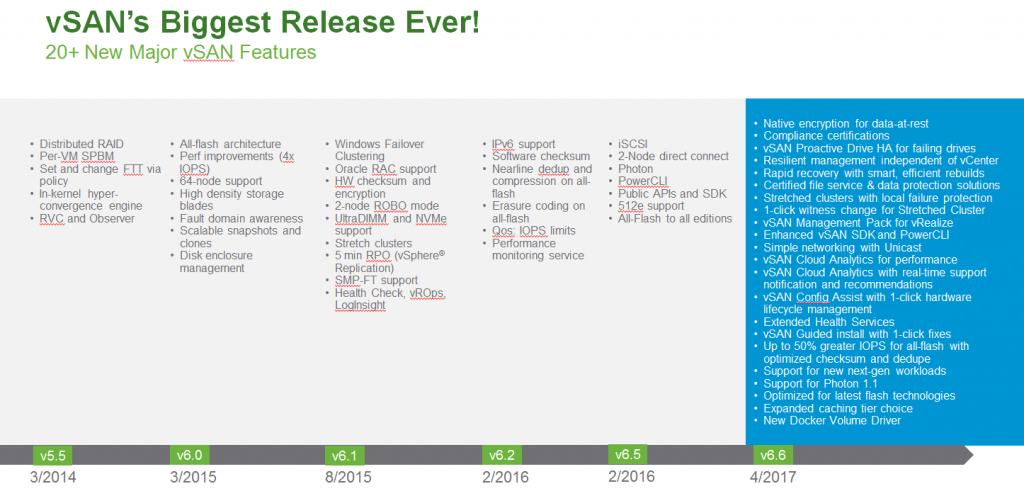

It was back in October when VMware announced vSAN 6.5 and now just 6 months later they are announcing the latest version of vSAN, 6.6. With this new release vSAN turns 3 years old and it’s come a long way in those 3 years. VMware claims this is the biggest release yet for vSAN but if you look at what’s new in this release it’s mainly a combination of a lot of little things rather than some of the big things in prior releases like dedupe, compression, iSCSI support, etc. It is however an impressive list of enhancements in this release which should make for an exciting upgrade for customers.

As usual with each new release, VMware posts their customer adoption numbers for vSAN and as of right now they are claiming 7,000+ customers, below is their customer counts by release.

- Aug 2015 – vSAN 6.1 – 2,000 customers

- Feb 2016 – vSAN 6.2 – 3,000 customers

- Aug 2016 – vSAN 6.5 – 5,000 customers

- Apr 2017 – vSAN 6.6 – 7,000 customers

From the numbers it shows that VMware is adding about 2,000 customers every 6 months or about 11 customers a day which is an impressive growth rate. Now back to what’s new in this release, the below slide illustrates the impressive list of what’s new compared to prior versions backing up VMware’s claim of the biggest vSAN release ever.

Now some of these things are fairly minor and I don’t know if I would claim they are ‘major’ features. This release seems to polish and enhance a lot of things to make for an overall more improved and mature product. I’m not going to go into a lot of detail on all these but I will highlight a few things, first here’s the complete list in an easier to read format:

Now some of these things are fairly minor and I don’t know if I would claim they are ‘major’ features. This release seems to polish and enhance a lot of things to make for an overall more improved and mature product. I’m not going to go into a lot of detail on all these but I will highlight a few things, first here’s the complete list in an easier to read format:

- Native encryption for data-at-rest

- Compliance certifications

- vSAN Proactive Drive HA for failing drives

- Resilient management independent of vCenter

- Rapid recovery with smart, efficient rebuilds

- Certified file service & data protection solutions

- Stretched clusters with local failure protection

- 1-click witness change for Stretched Cluster

- vSAN Management Pack for vRealize

- Enhanced vSAN SDK and PowerCLI

- Simple networking with Unicast

- vSAN Cloud Analytics for performance

- vSAN Cloud Analytics with real-time support notification and recommendations

- vSAN Config Assist with 1-click hardware lifecycle management

- Extended Health Services

- vSAN Guided install with 1-click fixes

- Up to 50% greater IOPS for all-flash with optimized checksum and dedupe

- Support for new next-gen workloads

- Support for Photon 1.1

- Optimized for latest flash technologies

- Expanded caching tier choice

- New Docker Volume Driver

Support for data at rest encryption isn’t really anything new as it was introduced in vSphere 6.5 and can be used with any type of storage device and applied to individual VMs. Encryption with vSAN can also be done at the cluster level now so your entire vSAN environment is encrypted for those that desire it. Encryption is resource intensive though and adds overhead as VMware documented so you may instead want to implement it at the VM level instead.

—————– Begin update

As Lee points out in the comments, a big difference with encryption in vSAN 6.6 is that storage efficiencies are preserved. This is notable as data is now encrypted after it is deduped and compressed which is important as if you first encrypted it using the standard VM encryption in vSphere 6.5, you wouldn’t really be able to dedupe or compress it effectively. Essentially what happens in vSAN 6.6 is writes are broken into 4K blocks, they then get a checksum, then get deduped, then compressed, and finally encrypted (512b or smaller blocks)

—————– End update

VMware has made improvements to stretched clustering in vSAN 6.6 allowing for storage redundancy both within a site AND across sites at the same time. This provides protection against entire site outages as expected but also protection against host outages within a site. They also made it easier to configure options that allow you to protect VMs across a site, or just within a single site. Finally they made it easier to change the host location of the witness component which is essentially the 3rd party mediator between 2 sites.

Performance improvements are always welcomed especially around features that can tax the host and impact workloads like dedupe and compression. In vSAN 6.6 VMware spent considerable time optimizing I/O handling and efficiency to help reduce overhead and improve overall performance. To accomplish this they did a number of things which are detailed below:

- Improved checksum – Checksum read and write paths have been optimized to avoid redundant table lookups and also takes a very optimal path to fetch checksum data. Checksum reads are the significant beneficiary

- Improved deduplication – Destages in log order for more predictable performance. Especially for sequential writes. Optimize multiple I/O to the same Logical Block Address (LBA). Increases parallelization for dedupe.

- Improved compression – New efficient data structure to compress meta-data writes. Meta-data compaction helps with improving performance for guest and backend I/O.

- Destaging optimizations – Proactively destage data to avoid meta-data build up and impact guest IOPS or re-sync IOPs. Can help with large number of deletes, which invoke metadata writes. More aggressive destaging can help in write intensive environments, reducing times in which flow control needs to throttle. Applies to hybrid and all flash.

- Object management improvements (LSOM File System) – Reduce compute overhead by using more memory. Optimize destaging by reducing cache/CPU thrashing.

- iSCSI for vSAN performance improvements made possible by: Upgraded edition of FreeBSD used in vSAN™. vSAN™ 6.5 used FreeBSD 10.1. vSAN™ 6.6 uses version 10.3. General improvements of LSOM.

- More accurate guidance on cache sizing. Earlier proposal was based on 10% usable capacity. Didn’t represent larger capacity footprints well.

VMware performed testing between vSAN 6.5 & vSAN 6.6 using 70/30 random workloads and found 53%-63% improvement in performance which are quite significant. If nothing else this alone makes for a good reason to upgrade.

To find out even more about vSAN 6.6 check out the below links from VMware:

- What’s New with VMware vSAN 6.6 (Virtual Blocks blog)

- vSAN 6.6 – Native Data-at-Rest Encryption (Virtual Blocks blog)

- vSAN 6.6 Online Health Check and performance improvements (Virtual Blocks blog)

- Goodbye Multicast (Virtual Blocks blog)

- 04/11: What’s New in vSAN 6.6 (VMware webinar)

Apr 04 2017

Public voting for VMworld 2017 sessions is open through April 13th

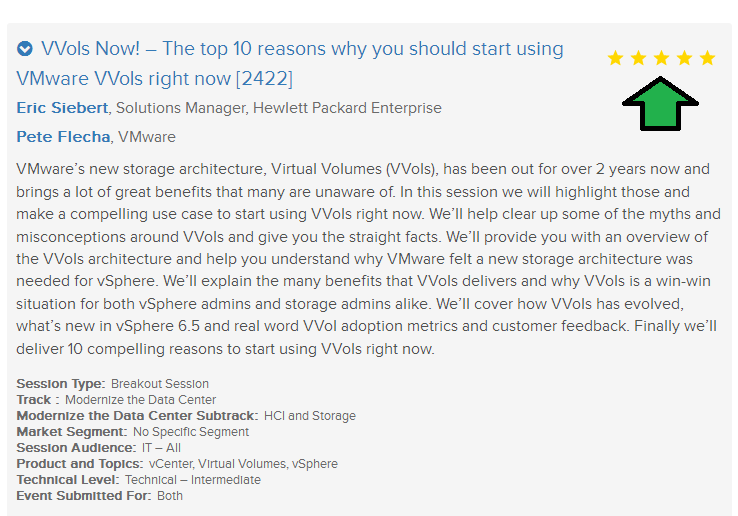

Public voting for VMworld 2017 sessions is now open through April 13th. The public voting is just one part of a whole scoring process that includes also content committee voting and sponsor voting. VMware reserves a small chunk of session slots (5%) that they call Customer Choice that can make it in via the public voting, the remainder of the session slots typically are filled up by VMware sessions, sponsor sessions and sessions that score favorably through the content committee voting.

I’d greatly appreciate your consideration in voting for my 2 submissions, one is a session on the new VVols array based replication feature in vSphere 6.5, my co-speaker is one of our VVol engineers who has been doing the development work on VVol replication for us so it will be a very technical session. The other is a why VVols type session with Pete Flecha from VMware that covers the top 10 reasons why you would want to implement VVols right now. Simply search on my last name (Siebert) or session IDs (2376 & 2422) to find them.

You can vote on as many sessions as you want, since the list is so large (1,499) you are better of searching or filtering it on topics that interest you. You can only cast one vote for a session though. To vote on sessions do the following just go to the VMworld Session Voting page and click the Vote Now button at the bottom of the page. If you don’t have an existing VMworld account you can create one for free, you’ll need to enter some basic required information (username, email, address info) and then an account will be created for you.

Once you are at the Content Catalog you will see the fill list of sessions, enter a search term (i.e. speaker name, VVols, VSAN, etc.) or select filters from the left side (track/sub-track/type). Note that VMware has changed the voting method this year, in prior years you simply clicked a star icon to vote for a session, this year you can actually grade the session on a scale from 1 star (poor) to 5 stars (excellent). So instead of just simply selecting all the sessions that you like, now they want you to grade each session (see below figure). The big difference here is by clicking the stars this year you’re not simply voting like a thumbs up for the session, your votes can now either positively or negatively influence the overall scoring for the session.

You can vote for as many sessions as you want, so head on over and rock the vote!

Apr 03 2017

VMFS vs. RDM – Fight!

The question often comes up, when should I use Raw Device Mappings (RDMs) instead of traditional VMFS/VMDK virtual disks. The answer for this usually is, always use VMFS unless you fit into specific use cases. An RDM is raw LUN that is presented directly to a VM instead of creating a traditional virtual disk on a VMFS datastore. Because a VM is directly accessing a LUN there is a natural tendency to think that a RDM would provide better performance as a VM is reading/writing directly to a LUN and not having to deal with the overhead of the VMFS file system. While you might think that, it’s not the case, RDM’s provide the same performance levels as a VM on VMFS does. To prove this VMware performed testing and published a white paper way back in 2007 on ESX 3.0 were they did head to head testing of the same workloads on a VM on VMFS and a VM using an RDM. The conclusions of their testing was:

- “The performance tests described in this study show that VMFS and RDM provide similar I/O throughput for most of the workloads we tested.”

They went on to list some of the use cases that one might want to use an RDM such as a clustering solution like MSCS or when you need to take array snapshots of a single VM. Some additional use cases might be around an application that needs to write SCSI commands directly to a LUN or if you may potentially need to move an application from a VM to a physical server where you can move the LUN. Performance should really never be a valid use case for using an RDM as they also have some disadvantages which include more difficult management and certain vSphere feature restrictions.

They also tested VMFS vs RDM again with vSphere 5.1 and once again the conclusions were the same:

- “What you should takeaway from this vSphere 5.1 data, as well as all previously published data, is that there is really no performance difference between VMDK and RDM and this holds true for all versions of our platform. +/- 1% is insignificant in today’s infrastructure and can often be attributed to noise.”

Well those older recommendations still apply today and to proof that VMware just published a new white paper that examine SQL Server performance on vSphere 6.5. As part of that white paper they chose revisit the VMFS vs RDM topic and included testing of SQL Server performance on both VMFS and RDM disk. Not surprisingly the results were the same, in fact they found VMFS performed ever so slightly better than an RDM disk. The conclusions of this recent testing was:

- “The results from DVD Store 3 running on the same host reveals that, as expected, the performance is virtually identical; in fact, just like the vSphere 5.1 case, the VMFS case slightly (1%) outperformed RDM.”

You can see the results in the below graph which validates VMware’s recommendation to use VMFS by default unless you have a special functional requirement that would require using an RDM.

Mar 21 2017

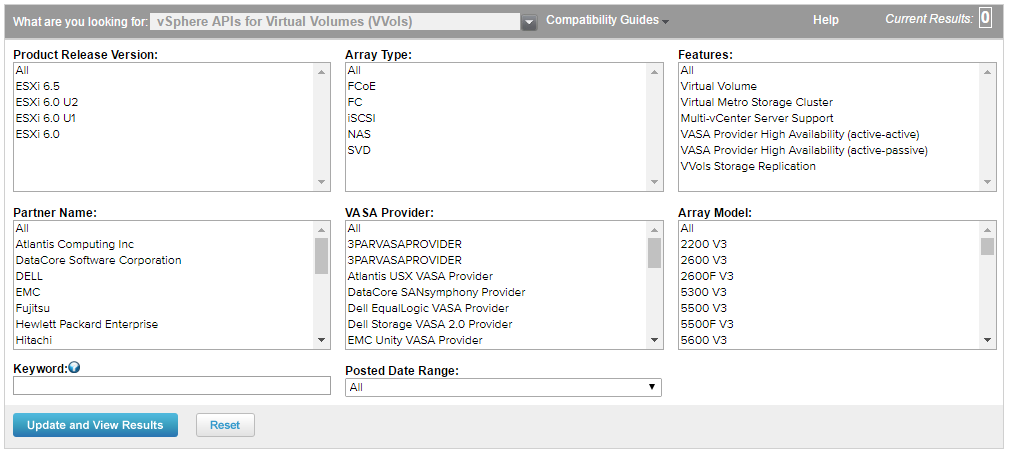

An overview of current storage vendor support for VMware Virtual Volumes (VVols)

VMware’s new external storage architecture, Virtual Volumes (VVols), turned 2 years old this month as it launched as part of vSphere 6.0. The new VASA specification for VVols has been under development by VMware for over 6 years and it’s up to storage vendors to set their own development pace for supporting it. In this post I’ll provide an update on how each storage vendor is positioned to support VVols, this is entirely based on what is published in the VVols HCL (see below figure) and some research I have done. It will not cover things like the capabilities and scalability for VVols that is up to each vendor to dictate what they are capable of doing with VVols and how many VVols that they can support on a storage array.

Let’s first take a look in detail at the categories listed in the table below and explain them a bit further:

Let’s first take a look in detail at the categories listed in the table below and explain them a bit further:

Vendor

On day 1 of the vSphere 6.0 launched there were only 4 vendors that had support for VVols. There are 17 vendors that support VVols today, almost all of the bigger vendors like IBM, HDS, HPE, EMC and NetApp support them but there are a number of smaller vendors that do as well such as SANBlaze, Primary Data, Fujitsu, NEC and Atlantis. Noticeably absent is Pure Storage which is odd as they demoed VVols at Tech Field Day back in 2014. You also might note that NAS vendors tend to be less interested in developing support for VVols as NAS allows an array to already see individual VMs as they are written as files and not using LUNs. Because of this NAS arrays already have the ability to interact with VMs using vendor developed tools so the VVols solution becomes less appealing to them with all the extra development work to achieve roughly the same outcome.

Array Model

Each array family must be certified for VVols and it’s up to each vendor to decide on how and if they want to do that. With EMC they chose to do it through Unity instead of doing it directly within the storage array. There are some additional vendors like DataCore, SANBlaze and Primary Data that sell storage virtualization products that sit in front of any brand primary storage arrays and aggregate storage to ESXi host, these vendors build VVols into their software so they can support VVols even if the back-end storage array does not. The VVol HCL will list exactly what model arrays are supported by each vendor and the firmware that is required for VVols support.

Array Type (Protocols)

Again it is up to each vendor to decide which protocols they will support with VVols on each array model, VVols is protocol agnostic and will work with FCoE, NFS, iSCSI, FV and SVD. Note some vendors will only support block protocols, others just NAS, some support all protocols. The VVol HCL will list which protocols are supported by each vendor for their arrays that support VVols. You’ll see SVD listed as a an array type, that stands for “Storage Virtualization Device”. The only 2 vendors listed for that array type are IBM (SVC) and NetApp (ONTAP).

vSphere Version

This lists the versions of vSphere that are supported by a storage array with VVols, the options include all the update versions of 6.0 (U1-U3) and 6.5. The 6.0 versions are basically all similar to each other and they all run under the VASA 2.0 specification. With 6.5, VASA 3.0 is the new VASA specification which introduces support for VVol replication. However an array can be certified on vSphere 6.5 and only support VASA 2.0, supporting 6.5 does not automatically indicate VVol replication support, this support is specifically noted in the Features field in the HCL. Right now there are only 9 vendors that provide VVol support on vSphere 6.5

VASA Provider Type

This one isn’t explicitly listed in the HCL, I had to do my own research to determine this. The VASA Provider component can be deployed either internal to an array (embedded in the array controllers/firmware) or deployed externally as a pre-built VM appliance or as software that can be installed on a VM or physical server. It is up to each vendor to determine how they want to do this, the internal method has it’s advantages by being easier to deploy, manage and highly available. The external method however tends to be easier for vendors to develop as they don’t have to modify array firmware and use array resources and can simply bolt it on as an external component. The only hardware (not VSA/SVD) storage vendors that deploy it internally are HPE, SolidFire NexGen and Nimble Storage. Some of the storage virtualization vendors like EMC (Unity) and Primary Data build it into their software so it’s considered internal.

VASA Provider HA

The VASA Provider is a critical component in the VVols architecture and if it becomes unavailable you can’t do certain VVol operations like powering on a VM. As a result you want to ensure that is is highly available, with internal VASA Providers this doesn’t apply as much as the array would have to go down to take down the VASA Provider and if that happens you have bigger problems. With external VASA Providers it is more critical to protect it as it is more vulnerable to things like a host crash, network issues, someone powering it off, etc. There are different ways to provide HA for the VASA Provider and it’s up to each vendor to figure out how they want to do it. You can rely on vSphere HA to restart it in event of a host crash or a vendor can build their own solution to protect it.

The VASA Provider HA feature in the HCL only indicates if a vendor has created and certified an active-active or active-passive HA solution for the VASA Provider using multiple VASA providers which only 3 vendors have done (NexGen, SANBlaze and IBM). Other vendors may have different ways of providing HA for the VASA Provider so check with your vendor to see how they implement it and understand what the risks are.

Multi vCenter Servers

Support for multiple vCenter Servers registering and connecting to a storage array is indicated in the feature column of the VVol HCL.Right now there are only 5 vendors listed with that support. Again check with your vendor to see where they are at with this.

Array Based Replication

Support for array based replication of VVols is new in vSphere 6.5 as part of the VASA 3.0 specification. On day 1 of the vSphere 6.5 launch not one vendor supported it, a bit later Nimble Storage showed up first to support it with HPE recently announcing it for 3PAR and should be showing up on the HCL any day now. Having seen first hand the amount of engineering effort it takes to support VVols replication I can understand why there are so few vendors that support it today but I expect you will see more show up over time.

Vendor Array Models Protocols vSphere Version VASA Provider Type VASA Provider HA Multi vCenter Servers Array Based Replication

Atlantis Computing USX NAS 6.0 Internal No No No

DataCore Software SANsymphony FC, iSCSI 6.0 External (physical or VM) No No No

Dell Equalogic iSCSI 6.0 External (VSM VM appliance) No No No

EMC VMAX, Unity FC (VMAX, Unity), iSCSI (Unity), NAS (Unity) 6.0 (VMAX, Unity), 6.5 (Unity) Internal (Unity), External (VMAX) (VM appliance) No No No

Fujitsu Eternus FC, FCoE, iSCSI 6.0, 6.5 External (physical or VM) No Yes No

Hewlett Packard Enterprise 3PAR StoreServ, XP7 FC, iSCSI 6.0, 6.5 Internal (3PAR), External (XP7) N/A (3PAR), No (XP7) Yes (3PAR), No (XP7) Yes (3PAR), No (XP7)

Hitachi Data Systems VSP FC, iSCSI, NAS 6.0, 6.5 External (VM appliance) No No No

Huawei Technologies OceanStor FC, iSCSI 6.0, 6.5 External (VM appliance) No No No

IBM XIV, SVC FC, iSCSI, SVD 6.0 (XIV, SVC), 6.5 (XIV) External (IBM Storage Integration Server) Yes (active-passive) Yes No

NEC iStorage FC, iSCSI 6.0, 6.5 External (Windows app) No No No

NetApp FAS FC, iSCSI, NAS 6.0 External (VM appliance) No No No

NexGen Storage/Pivot3 N5 iSCSI 6.0 Internal Yes (active-active) No No

Nimble Storage CS, AF FC, iSCSI 6.0, 6.5 Internal N/A Yes Yes

Primary Data DataSphere NAS 6.0 Internal No No No

SANBlaze Technology VirtuaLUN FC, iSCSI 6.0 External (Linux app) Yes (active-active) Yes No

SolidFire SF iSCSI 6.0 Internal No No No

Tintri T NAS 6.0, 6.5 External No No No

Mar 07 2017

The Top 100 VMware/virtualization people you MUST follow on Twitter – 2017 edition

John Troyer posted a list today of the Top 50 Overall VMware Influencers which is an algorithmic list that he created based on who is followed the most on Twitter by other “insiders.” I’m honored to be a part of that list and it reminded me that I had not updated my old list that I started in 2009 of my Top 100 VMware/virtualization people that you must follow on Twitter. The last time I updated that list was in 2014 so I went through it again, removed some people that don’t tweet that much anymore or whose tweets aren’t VMware related and also added some new people that I thought were worthy of being on the list.

Putting together these types of lists is always difficult, I try and research a bit to see who is fairly active on Twitter and tweets about VMware & virtualization stuff a good amount of time. While John’s list is based more on analytics, mine is purely based on people I know and deal with. It is entirely possible I missed some people though (I’m sure I did) that should be on the list, it wasn’t easy to limit it to 100 as there are tons of great people that tweet about VMware & virtualization but I did the best I could. So I apologize in advance if I missed someone that I probably should of included.

So without further ado, click the image below to see my Twitter list of the Top 100 VMware/virtualization people you MUST follow on Twitter.

Also here are some additional lists on the 1st 25 people that I followed on Twitter and also the Top 25 & 50 vBloggers. Enjoy!

- Top 25 vBloggers from the vLaunchPad

- Top 50 vBloggers from the vLaunchPad

Mar 06 2017

Top vBlog 2017 starting soon, make sure your site is included

I’ll be kicking off Top vBlog 2017 very soon and my vLaunchPad website is the source for the blogs included in the Top vBlog voting each year so please take a moment and make sure your blog is listed. Every year I get emails from bloggers after the voting starts wanting to be added but once it starts its too late as it messes up the ballot. I’ve also archived a bunch of blogs that have not blogged in over a year in a special section, those archived blogs still have good content so I haven’t removed them but since they are not active they will not be on the Top vBlog ballot.

Once again this year blogs must have at least 10 posts last year to be included on the ballot. Some additional changes this year include adding new instruments that will contribute to the voting scoring including Google PageSpeed score, # of posts and private committee judging of your blog. Read more about these changes in this post.

So if you’re not listed on the vLaunchpad, here’s your last chance to get listed. Please use this form and give me your name, blog name, blog URL, twitter handle & RSS URL. I do have a number of listings from people that already filled out the form that I need to get added, the site should be updated in the next 2 weeks to reflect any additions or changes. I’ll post again once that is complete so you can verify that your site is listed. So hurry on up so the voting can begin, the nominations for voting categories will be opening up very soon.

And thank you once again to Turbonomic for sponsoring Top vBlog 2017, stay tuned!

Feb 23 2017

One more vendor now supports VVol replication

A few weeks ago I wrote on how to find out which storage vendors support the new VVols replication capability in vSphere 6.5. At that time there was only one vendor that was listed on the VMware VVols HCL as supporting it, Nimble Storage. There is now one more vendor that supports it, HPE with their 3PAR StoreServ arrays with their upcoming 3PAR OS 3.3.1 release, you can read more about it in this post that I wrote, you can also expect to see them listed in the HCL very soon. Which vendor will be next? If I had to guess I would say NetApp, they are a VVol design partner as well and have historically been pretty quick with delivering VVol support.