There’s been a lot of confusion lately surrounding the UNMAP command which is used to reclaim deleted space on thin provisioning that is done at the storage array level. The source of the confusion is VMware’s support for it which has changed since the original introduction of the feature in vSphere 5.0. As a result I wanted to try and sum up everything around it to clear up any misconceptions around it.

So what exactly is UNMAP?

UNMAP is a SCSI command (not a vSphere 5 feature) that is used with thin provisioned storage arrays as a way to reclaim space from disk blocks that have been written to after the data that resides on those disk blocks has been deleted by an application or operating system. UNMAP serves as the mechanism that is used by the Space Reclamation feature in vSphere 5 to reclaim space left by deleted data. With thin provisioning once data has been deleted that space is still allocated by the storage array because it is not aware that the data has been deleted which results in inefficient space usage. UNMAP allows an application or OS to tell the storage array that the disk blocks contain deleted data so the array can un-allocate them which reduces the amount of space allocated/in use on the array. This allows thin provisioning to clean-up after itself and greatly increases the value and effectiveness of thin provisioning.

So why is UNMAP important?

In vSphere there are several storage operations that will result in data being deleted on a storage array. These operations include:

- Storage vMotion, when a VM is moved from one datastore to another

- VM snapshot deletion

- Virtual machine deletion

Of these operations, Storage vMotion has the biggest impact on the efficiency of thin provisioning because entire virtual disks are moved between disks which results in a lot of wasted space that cannot be reclaimed without additional user intervention. The impact of Storage vMotion on thin provisioning prior to vSphere 5 was not significant because Storage vMotion was not a commonly used feature and was mainly used for planned maintenance on storage arrays. However with the introduction of Storage DRS in vSphere 5, Storage vMotion will be a common occurrence as VMs can now be dynamically moved around between datastores based on latency and capacity thresholds. This will have a big impact on thin provisioning because reclaiming the space consumed by VMs that have been moved becomes critical in order to maintaining an efficient and effective space allocation of thin provisioning storage capacity. Therefore UNMAP is important as it allows vSphere to inform the storage array when a Storage vMotion occurs or a VM or snapshot is deleted so those blocks can quickly be reclaimed to allow thin provisioned LUNs to stay as thin as possible.

How does UNMAP work in vSphere?

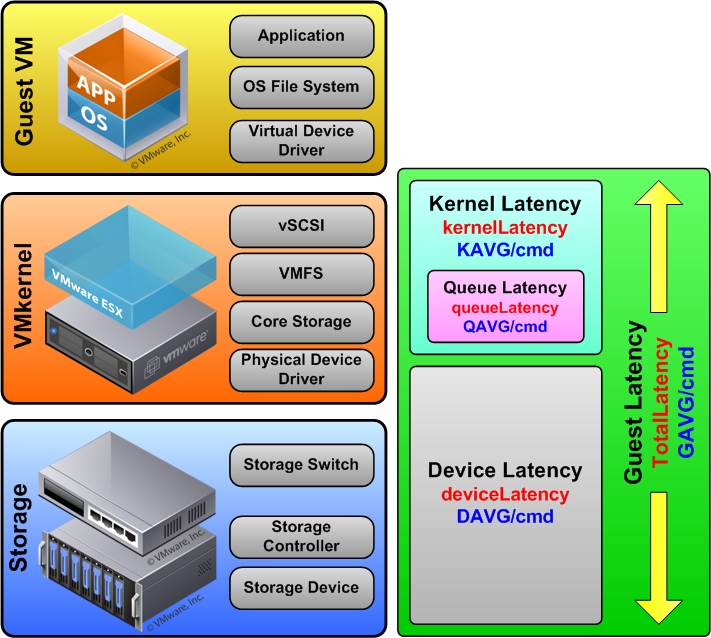

Prior to vSphere 5 and UNMAP when an operation occurred that deleted VM data from a VMFS volume, vSphere just looked up the inode on the VMFS file system and deleted the inode pointer which mapped to the blocks on disk. This shows the space as free on the file system but the disk blocks that were in use on the storage array are not touched and the array is not aware that they contain deleted data.

In vSphere 5 with UNMAP when those operations occur the process is more elaborate from a storage integration point of view, the inodes are still looked up like before, but instead of just removing the inode pointers a list of the logical block addresses (LBA) must be obtained. The LBA list contains the locations of all the disk blocks on the storage array that the inode pointers map to. Once vSphere has the LBA list it can start sending SCSI UNMAP commands for each range of disk blocks to free up the space on the storage array. Once the array acknowledges the UNMAP, the process repeats as it loops through the whole range of LBA’s to UNMAP.

So what is the issue with UNMAP in vSphere?

ESXi 5.0 issues UNMAP commands for space reclamation in critical regions during several operations with the expectation that the operation would complete quickly. Due to varied UNMAP command completion response times from the storage devices, the UNMAP operation can result in poor performance of the system which is why VMware is recommending that it be disabled on ESXi 5.0 hosts. The implementation and response times for the UNMAP operation may vary significantly among storage arrays. The delay in response time to the UNMAP operation can interfere with operations such as Storage vMotion and Virtual Machine Snapshot consolidation and can cause those operations to experience timeouts as they are forced to wait for the storage array to respond.

How can UNMAP be disabled?

In vSphere support for UNMAP is enabled by default but can be disabled via the command line interface, there is currently no way to disable this using the vSphere Client. Esxcli is a multi-purpose command that can be run from ESXi Tech Support Mode, the vSphere Management Assistant (vMA) or the vSphere CLI. Setting the EnableBlockDelete parameter to 0 disabled UNMAP functionality, setting it to 1 (the default) enables it. The syntax for disabling UNMAP functionality is shown below. Note the reference to VMFS3, this is just the parameter category and is the same regardless of if you are using VMFS3 or VMFS5.

- esxcli system settings advanced set –int-value 0 –option /VMFS3/EnableBlockDelete

or you can use:

- esxcfg-advcfg -s 1 /VMFS3/EnableBlockDelete

This command must be run individually on each ESXi host to disable UNMAP support. You can check the status of this parameter on a host by using the following command.

- esxcfg-advcfg -g /VMFS3/EnableBlockDelete

It is possible to use a small shell script to automate the process of disabling UNMAP support to make it easier to update many hosts. A pre-built script to do this can be downloaded from here. Note changing this parameter takes effect immediately; there is no need to reboot the host for it to take effect. Without disabling the UNMAP feature, you may experience timeouts with operations such as Storage vMotion and VM snapshot deletion.

How did UNMAP change in vSphere 5.0 Update 1?

Soon after vSphere 5.0 was released VMware recalled the UNMAP feature and issued a KB article stating it was not supported and should be disabled via the advanced setting. With vSphere 5.0 Update 1 they disabled UNMAP by default, but they went one step further, if you try and enable it via the advanced setting it will still remain disabled. So there is no way to do automatic space reclamation in vSphere at the moment. What VMware did instead was introduce a manual reclamation method by modifying the vmkfstools CLI command and adding a parameter to it. The syntax for this is as follows:

- vmkfstools -y <percent of free space>

The percentage of free space will create a virtual disk of the specified size, the command is run against an individual VMFS volume. So if a datastore has 800GB of free space left and you specify 50 as the free space parameter it will create a virtual disk 400GB in size, then delete it and UNMAP the space. This manual method of UNMAP has a few issues though, first it’s not very efficient, it’s creating a virtual disk without any regard for if the blocks are already reclaimed/un-used or not. Second it’s a very I/O intensive process and it is not recommended to run it during any peak workload times. Next it’s a manual process and you can’t really schedule it within vCenter Server, there are some scripting methods to get it run but it’s a bit of a chore. Finally since it’s a CLI command there is no way to really monitor it or log what occurs.

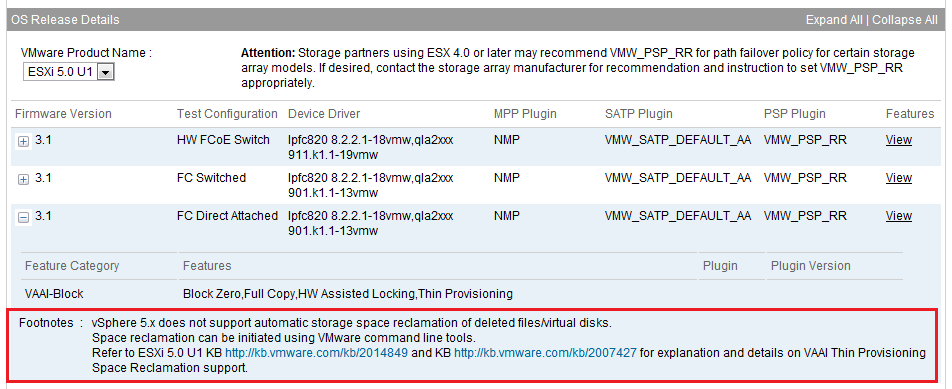

Another thing that VMware did in vSphere 5.0 Update 1 was invalidate all of the testing that was done with UNMAP for the Hardware Compatibility Guide. They required vendors to re-test with the manual CLI method and added a footnote to the HCG that said automatic reclamation is not supported and space can be reclaimed via command line tools as shown below.

The manual CLI method of using UNMAP is not ideal, it works but it’s a bit of a kludge, until VMware introduces manual support again there is not a lot of value in using it because of the limitations.

When will VMware support automatic reclamation again?

Only time will tell, hopefully the next major release will enable it again. This was quite a setback for VMware and there was no easy fix so it may take a while before VMware has perfected it. Once it is back it will be a great feature to utilize as it really makes thin provisioning at the storage array level very efficient.

Where can I find out more about UNMAP?

Below are some useful links about UNMAP:

- Disabling VAAI Thin Provisioning Block Space Reclamation (UNMAP) in ESXi 5.0 (VMware KB)

- vStorage APIs for Array Integration FAQ (VMware KB)

- Using vmkfstools to reclaim VMFS deleted blocks on thin-provisioned LUNs (VMware KB)

- VAAI Thin Provisioning Block Reclaim/UNMAP Issue (vSphere Blog)

- VAAI Thin Provisioning Block Reclaim/UNMAP is back in 5.0U1 (vSphere Blog)

- VAAI Thin Provisioning Block Reclaim/UNMAP In Action (vSphere Blog)