Infinio just released version 3.0 of their Accelerator product which uses server-side host caching to accelerate storage operations. This is a milestone release for Infinio as they are now leveraging the vSphere APIs for I/O Filtering (VAIO) which were introduced in vSphere 6. VAIO is to storage I/O much as the VMsafe APIs introduced years ago was to host networking traffic as it allows 3rd party products to reside directly inline with storage I/O via vmKernel interfaces instead of trying to intercept I/O through more bolt-on external interfaces. Infinio Accelerator 3.0 also has some other great new features but let’s first start with a deep dive on VAIO so you can better understand why this is a big deal.

The vSphere APIs for I/O Filtering were announced at VMworld in 2014 and later quietly introduced in vSphere 6 Update 1. I say quietly as you probably didn’t hear a lot of noise from VMware on this as it’s more an under the covers enabler for 3rd party vendors and not a flashy new vSphere feature. Don’t take that quiet introduction though as an indicator of how important this new feature is, it’s a big deal and a powerful enabler for any product that interacts with storage I/O in vSphere.

To give you a better understanding of what VAIO is all about I’m going to summarize a post that VMware did last year that does a good job of explaining it. As I mentioned VAIO is not really a feature, it’s an API framework built into vSphere similar to other storage APIs such as VAAI, VASA, VADP, etc. that allows 3rd party applications to interact with the storage I/O stream at the VM level in a certified and integrated manner. Prior to VAIO vendors had to get creative with how they tapped into storage I/O by doing things like sitting inline via a virtual appliance through storage I/O traffic. Because it is integrated into vSphere it can also be managed and applied via the vSphere Storage Policy Based Management (SPBM) engine that is used with VSAN and VVols.

The benefits of this are it allows for much more efficient interaction with I/O, it also simplifies and standardizes how vendors interact with I/O and it is easier to manage overall. The real advantage of VAIO is for applications that interact with I/O close to the host and it’s VM’s which includes uses cases such as host based caching and replication applications that need to work as close as possible to the source of the I/O. With applications and hardware that operate near the end of the I/O stream their is less of an advantage and not many use cases, this would essentially include SAN and NAS devices as they are much more distant from where the filtering occurs.

Some additional use cases would be to any type of security application, such as malware scanners that need to scan I/O in real time. In addition an application that needs to encrypt data would be another good use case for VAIO. Essentially think of it like this, any application that needs to stop and look at each I/O as it leaves or enters a VM and then do something with that I/O (encrypt, replicate, scan, cache) is a good use case for VAIO. It’s important to note though that with the first release of VAIO (ESXi 6.0 U1) only caching and replication use cases are officially supported. VMware will probably certify other use cases in future vSphere releases.

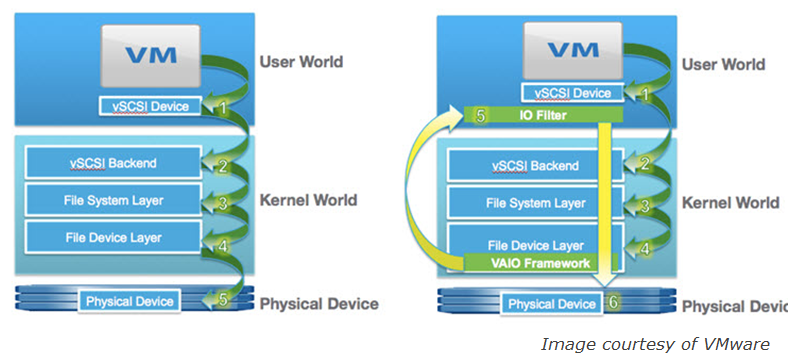

So let’s now take a closer look at where the I/O filtering occurs with VAIO. So normally storage I/O initiates at the VM’s virtual SCSI device (User World) and then makes it way through the VMkernel before heading onto the physical I/O adapter and to the physical storage device. With VAIO the filtering is done close to the VM in the User World with the rest of the VAIO framework residing in the VMkernel as shown in the below figure, on the left is the normal I/O path without VAIO and on the right is with VAIO:

When an I/O goes through the filter there are several actions that an application can take on each I/O, such as fail, pass, complete or defer it. The action taken will depend on the application’s use case, a replication application may defer I/O to another device, a caching application may already have a read request cached so it would complete the request instead of sending it on to the storage device.

When an I/O goes through the filter there are several actions that an application can take on each I/O, such as fail, pass, complete or defer it. The action taken will depend on the application’s use case, a replication application may defer I/O to another device, a caching application may already have a read request cached so it would complete the request instead of sending it on to the storage device.

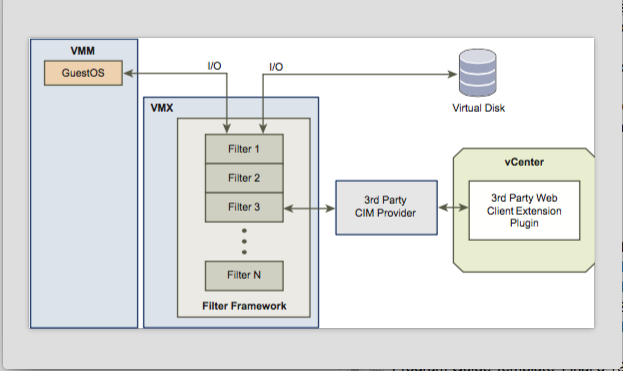

There are two classes of filters right now (caching, replication) and there can be more than one VAIO filter active simultaneously. Filters are executed in class order so an I/O may hit a replication filter first before it hits a cache filter, once an I/O has made it through all the filters it continues on to it’s destination be it to the VM or to a storage device. The below diagram from VMware illustrates the overall VAIO architecture and I/O path:

VAIO is a perfect match for applications like Infinio Accelerator that are host based and need to see every I/O to perform caching to improve performance. The important role that Infinio Accelerator plays is to prevent I/O from having to travel to a destination storage device which takes time to complete especially if it’s a remote storage device such as a SAN or NAS. A single I/O can take 5-10ms to make that journey or even longer if the device is very busy so to be able to cancel that journey and have the I/O instead take a shortcut from local cache can greatly improve performance. With VAIO the job that Infinio Accelerator performs is much easier as everything is integrated into the hypervisor and I/O can be filtered faster as the filtering occurs even closer to the VM.

VAIO is a perfect match for applications like Infinio Accelerator that are host based and need to see every I/O to perform caching to improve performance. The important role that Infinio Accelerator plays is to prevent I/O from having to travel to a destination storage device which takes time to complete especially if it’s a remote storage device such as a SAN or NAS. A single I/O can take 5-10ms to make that journey or even longer if the device is very busy so to be able to cancel that journey and have the I/O instead take a shortcut from local cache can greatly improve performance. With VAIO the job that Infinio Accelerator performs is much easier as everything is integrated into the hypervisor and I/O can be filtered faster as the filtering occurs even closer to the VM.

The difference that VAIO makes is dramatic, Infinio has performed testing and was able to achieve 1,000,000 IOPS with 20GB/sec throughput and 80 μs response time in their test environment with Infinio Accelerator using VAIO integration. Infinio Accelerator can improve overall storage performance and has some key use cases with I/O intensive workloads such as enterprise database applications and virtual desktops (VDI).

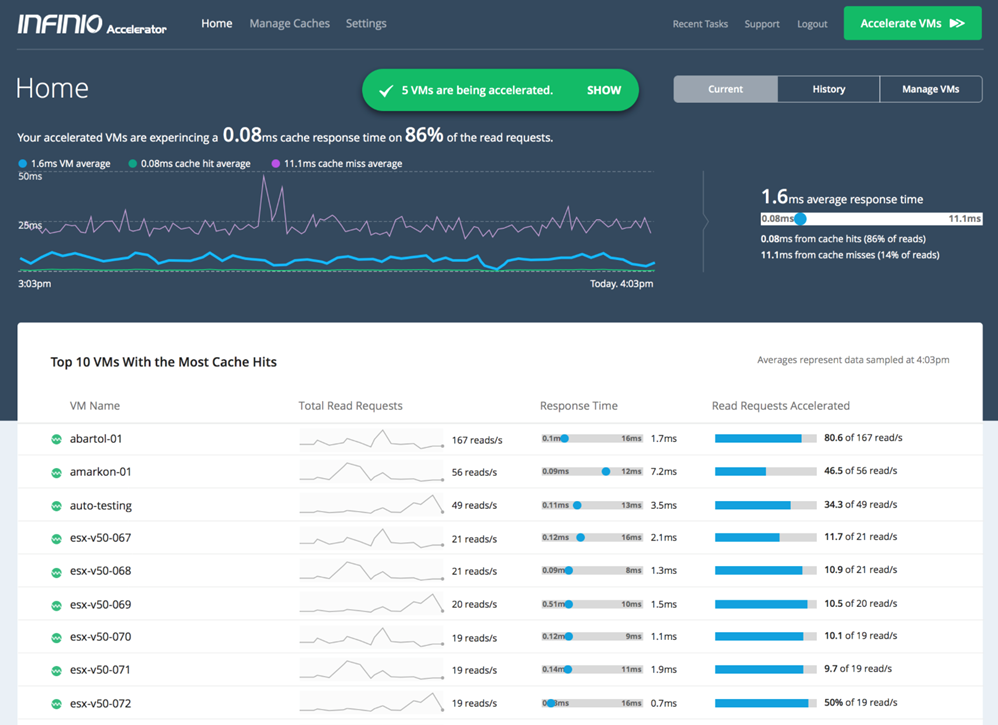

In addition to VAIO support, Infinio Accelerator 3.0 also introduces support for SSD & Flash devices which add an additional caching tier for colder cached data. Infinio Accelerator’s strength has always been the use of lightning fast host RAM as a caching tier, support for SSD & Flash devices allows them to extend that caching even further for even greater efficiency by moving colder data to persistent storage rather than expiring it. Infinio Accelerator supports the latest vSphere release (6.0 U2), is certified through the VMware Ready program and supports any storage that is supported by vSphere including SAN, NAS, DAS, VVols & vSAN. Here’s a screenshot of Infinio Accelerator in action:

If you haven’t seen what Infinio can do for you I encourage you to give them a try, they offer a free fully functional 30-day trial that installs quickly without any disruption to your current environment. They also have a recorded product demo that you can see the product in action. Below are some links for more information on both VAIO and Infinio Accelerator.

If you haven’t seen what Infinio can do for you I encourage you to give them a try, they offer a free fully functional 30-day trial that installs quickly without any disruption to your current environment. They also have a recorded product demo that you can see the product in action. Below are some links for more information on both VAIO and Infinio Accelerator.

Infinio Accelerator links

- Running vSphere 6? Why you should care about VAIO – blog post

- Infinio Accelerator 3.0 brings exceptional performance to VMware-based environments – press release

- VAIO Primer: Part I “What is VAIO?” – blog post

- CEO Reflections on our Latest Release: The more things change, the more they stay the same – blog post

- Infinio Accelerator – datasheet

- Infinio Accelerator Product Overview – white paper

VAIO links

- vSphere APIs for IO Filtering – Virtual Blocks blog post

- vSphere APIs for I/O Filtering (VAIO) Program – VMware Developer Center

- vSphere 6.0 Storage – documentation