Author's posts

Feb 28 2010

The importance of the Hardware Compatibility List

VMware publishes a list of all server hardware that is supported with vSphere which includes servers, I/O adapters and storage and SAN devices. This list is continually updated and is most commonly referred to as the Hardware Compatibility List (HCL), VMware changed the name for it a while back to the VMware Compatibility Guide as it is now referred to. The guide used to be published as PDF files only that you could read through to see if your hardware was listed but is now available as an online interactive webpage that is searchable and filterable as well. The guide lists hardware that is supported by vSphere but if hardware is not listed it does not mean it will not work with vSphere. In many cases the hardware will still work but because it is not listed VMware may not provide you support if you have problems with it. Servers and storage devices are two areas that are very common where they work with vSphere despite not being listed in the guide. I/O devices like network adapters and storage controllers though are less likely to work if they are not listed because they rely on drivers loaded in the VMkernel to work properly. If the driver is not one of the limited ones load in the VMkernel than your device is not going to work.

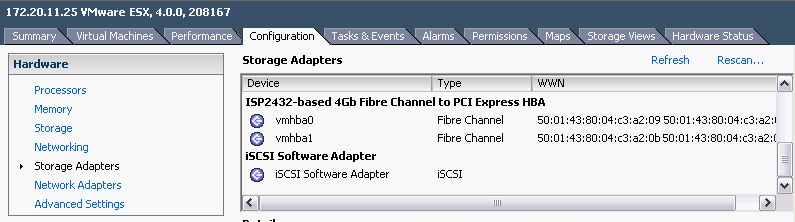

I don’t go through the guide that often as I assume the new mainstream hardware from large vendors like HP will always work and be supported. However last week that assumption bit me. We had just received some new hardware from HP which included a DL385 G6 server and an MSA G2 iSCSI storage array. I wanted to use hardware iSCSI initiators with TCP/IP Offload Engines (TOE) due to high CPU and I/O demands of the applications running on that server so I initially ordered HP’s only TOE card that was available which was the NC380T, however after we placed the order we were informed that the card was no longer available and was replaced by the newer NC382T. After receiving everything and assembling it and loading ESX on it I found that the NC382T was not listed under Storage Adapters in the vSphere Client as TOE cards should be as they are not treated as network adapters in vSphere. Only my SATA adapter, P410 smart array controller for local storage, Fibre Channel adapters and iSCSI software iniator were showing up under Storage Adapters.

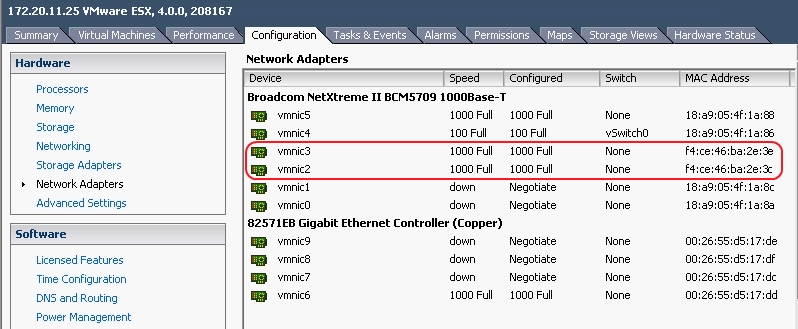

The NC382T was showing up under Network Adapters instead and could not be used as a hardware initiator.

So this new TOE card from HP that we bought to use as a hardware initiator could not be used for that purpose. After discovering this I checked VMware’s hardware guide to see what HP iSCSI adapters were listed. Much to my surprise there was only one HP model listed and after looking up that adapter on HP’s website I found that it was for blade systems only and was therefore no use to my server.

So this new TOE card from HP that we bought to use as a hardware initiator could not be used for that purpose. After discovering this I checked VMware’s hardware guide to see what HP iSCSI adapters were listed. Much to my surprise there was only one HP model listed and after looking up that adapter on HP’s website I found that it was for blade systems only and was therefore no use to my server.

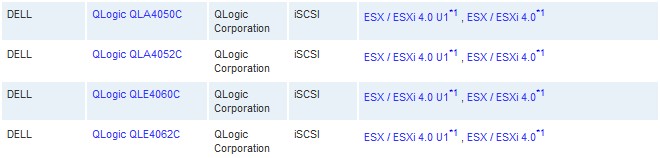

Now HP OEM’s many of their storage adapters as many of them are made by QLogic and Emulex. Most of the other vendors listed in the guide for iSCSI adapters had many QLogic adapters re-branded under their name but not HP.

Now HP OEM’s many of their storage adapters as many of them are made by QLogic and Emulex. Most of the other vendors listed in the guide for iSCSI adapters had many QLogic adapters re-branded under their name but not HP.

After confirming with HP that the blade adapter was the only one that they OEM’d I was forced to get the QLogic branded adapter instead. The card that seemed to be the most popular was the QLE4062C adapter, so now we have one of those on order and may end up just using the NC382T as a regular NIC instead.

After confirming with HP that the blade adapter was the only one that they OEM’d I was forced to get the QLogic branded adapter instead. The card that seemed to be the most popular was the QLE4062C adapter, so now we have one of those on order and may end up just using the NC382T as a regular NIC instead.

So the moral of this story is always check the Compatibility Guide especially when ordering a I/O adapters so you don’t end up with hardware that you can’t use with vSphere. If you want to know more about the guide such as how hardware gets added/removed from it and VMware’s support policy check out this blog post that I did a while ago on it.

Feb 17 2010

Need vSphere training? There’s an app for that…

I received a copy of Train Signal’s new vSphere Pro Series Vol. 1 which includes 3 modules on VMware View/ThinApp, Cisco Nexus 1000V and PowerCLI. The new Pro series is aimed towards users that want more than the basics and want to learn more about some of the advanced features in vSphere. I particularly like this 1st volume because it covers 3 areas I’m not very strong in and would like to learn more about. The instructor on the first module on View & ThinApp is David Davis, Rick Scherer is the instructor on the Cisco Nexus 1000V module and Hal Rottenberg is the instructor for PowerCLI. All three are experienced and knowledgeable vSphere veterans and very qualified to teach each subject. So head on over to Train Signal’s website and check it out, it’s regularly $599 but is available for a limited time for half-price ($297), you can also check out a demo of it here.

Feb 16 2010

Interesting blog

Thought I would share a blog that I’ve been reading now for quite some time that has some great information in it. I found it by accident while searching for information once, it’s VMware’s Technical Account Manager (TAM) blog were they generate newsletters roughly every week with lots of information for VMware’s many TAM’s. If you’re a blogger it’s not unusual to find links to some of your posts in these newsletters as VMware uses them to help keep their TAM’s informed with all the latest tips and tricks. So head on over there and give it a read.

Feb 16 2010

All good things must come to an end

Just a reminder that VMware will remove older versions of VI3 from their download site and only allow you to download the very latest versions beginning in May. So if you don’t already have them downloaded, get them while you can. I have links to all the download locations for the older versions here. Sometimes its nice to have older versions to play around with.

Also VI3 will switch to Extended support in May of this year, just shy of it’s 4 year anniversary, here’s VMware’s definition of it:

New hardware platforms are no longer supported, new guest OS updates may or may not be applied, and bug fixes are limited to critical issues. Critical bugs are deviations from specified product functionality that cause data corruption, data loss, system crash, or significant customer application down time and there is no work-around that can be implemented.

VI3 has been rock solid since its release and the switch to extended support is no big deal for those still using it. Extended support lasts for another 3 years, you can read the details here.

Here’s the official announcement:

Announcement: End of availability for VI

Effective May 2010, VMware will remove all but the most recent versions of our Virtual Infrastructure product binaries from our download web site. As of this date, these Virtual Infrastructure products will have reached end of general support according to the published support policy. The downloadable products removed will include both ESX and Virtual Center releases. For reference, the VMware VI3 support policy can be viewed at this location: www.vmware.com/support/policies/lifecycle/vi.

By removing older releases, VMware is establishing a long-term sustainable product maintenance line for older ESX product releases which have transitioned into the Extended Support life cycle phase. This enables us to baseline all patches and critical fixes against these baselines. This translates to faster customer turn-around and greater product stability during the extended support phase.

Virtual Infrastructure products being removed by May 2010:

- ESX 3.5 versions 3.5 GA, Update 1, Update 2, Update 3 and Update 4

- ESX 3.0 versions 3.0 GA, 3.0.1, 3.0.2 and 3.0.3

- ESX 2.x versions 2.5.0 GA, 2.5.1, 2.5.2, 2.1.3, 2.5.3, 2.0.2, 2.1.2 and 2.5.4

- Virtual Center 2.5 GA, 2.5 Update 1, 2.5 Update 2, 2.5 Update 3, 2.5 Update 4 and 2.5 Update 5

- Virtual Center 2.0

Virtual Infrastructure products remaining for Extended Support:

These versions will be the baseline for ongoing support during the Extended Support phase. All subsequent patches issued will be based solely upon the releases below.

- ESX 3.5 Update 5 will remain throughout the duration of Extended Support

- ESX 3.0.3 Update 1 will remain throughout the duration of Extended Support

- Virtual Center 2.5 Update 6 expected in early 2010

Customers may stay at a prior version, however VMware’s patch release program during Extended Support will be continued with the condition that all subsequent patches will be based on the latest baseline. In some cases where there are release dependencies, prior update content may be included with patches.

http://www.vmware.com/support/policies/lifecycle/vi/faq.html

Feb 10 2010

New storage toys and new storage woes

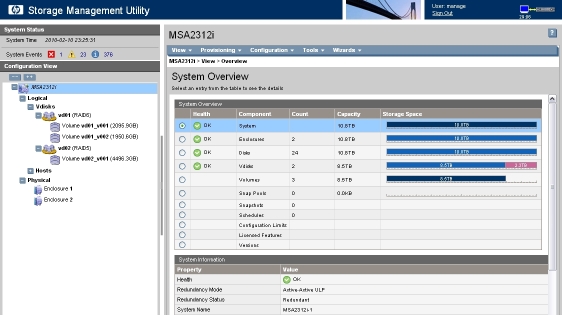

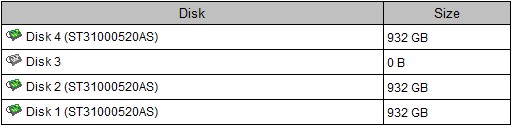

In the last week I’ve gotten some new storage devices, both at work and at home. Unfortunately I’ve experienced problems with both and its not been as fun of a week as I would of liked. The new work storage device is a HP MSA-2312i which is the iSCSI version of their Modular Storage Array line.

The new home storage device is an Iomega ix4-200d 4TB which is a relatively low cost network storage device that supports iSCSI & NFS and much more.

The MSA problems have all been firmware related, basically it kept getting stuck in a firmware upgrade loop, if you own one or plan on buying one I would say don’t upgrade the firmware unless you have a reason to and if you do make sure you schedule downtime. I’ll be sharing some tips for upgrading the firmware on that unit later on.

The Iomega problems are from a flaky hard drive presumably, not long after I plugged the unit in and started configuring it I received the message that drive 3 was missing. After talking to support and rebuilding the RAID group the problem briefly went away and then came right back. They graciously waived the $25 replacement fee (it’s brand new, they better!) and refused to expedite the shipping unless I paid $40 (again, its brand new, you would think they would want to make a new customer happy). Having a flaky drive in a brand new unit doesn’t exactly inspire confidence in storing critical data on the device so I’ll have to see how it goes once the drive is replaced.

So look forward to some upcoming posts on using and configuring both devices. The MSA will be used as part of a Domino virtualization project and I’ll be doing performance testing on it in various configurations. The Iomega I’ll be using with VMware Workstation 7 on my home computer as both iSCSI & NFS datastores.

Feb 01 2010

Virtualizing Domino – Pt.1 – The Journey Begins

I started doing Lotus Domino server administration back in 1994 on version 3.0, about 5 years ago the company I work for decided to outsource it to a hosting provider. While it was outsourced I was still pretty involved with the management and administration of the environment. We recently decided to bring it back in-house due to cost-savings and because we have a DR site that we can leverage for business continuity. I was the project lead for transitioning the environment to the provider and I am again the project lead for bringing it back. That means I have to architect our environment, build it, plan and perform the migration and make sure everything works smoothly. Since we virtualized most of our environment several years ago it’s only natural to also virtualize the new Domino environment. This series of blog posts will cover the many steps of the project and will focus on the virtualization aspect of it. The project will likely take about 3-4 months to complete and right now I’m at the very beginning stages. So I’ll begin with a recap of where the project is at right now.

Planning & architecting

This is the most importance phase and one you can’t afford to screw up, I chose to virtualize Domino so it was necessary to make sure I had a virtual environment that could handle the taxing resource demands that Domino servers require. Our environment is about 1,500 total users and somewhere between small and medium, we have many access methods including LAN, WAN, internet and both Notes Client, web-based, Blackberry and other smartphone users. I planned for 2 clustered servers at our corporate HQ, 1 server in the DMZ for Notes Client passthru, Lotus Traveler and SMTP, 1 Blackberry Enterprise Server and 1 server at our DR warm site. Web access to email from the public internet was a requirement so the challenge was trying to find a secure solution for that. I focused on 2 solutions for that, using a hardware reverse proxy device in the DMZ and using a server running reverse proxy software. I looked at hardware solutions from F5 Networks who make very nice hardware that is very customizable and integrates well with Domino, unfortunately we didn’t have the budget for it though. So the solution I chose was using IBM Websphere Secure Proxy Server running on a Windows server in the DMZ.

Next it was time to find hardware to use, I needed one new virtual host at corporate HQ, one at the DR site and more storage. We have a Hitachi AMS-500 SAN (2GB fiber) that we could add storage to as an option, but this was expensive and didn’t get us much storage for our budget. Instead I looked at lower cost iSCSI solutions, in particular the MSA line from HP, they are very affordable, easy to use and perform very well. My biggest concern though was making sure it had enough performance for the very intensive disk I/O demands that Domino had. So I did some research looking at IOPs numbers for the MSA and comparing them to Iometer tests that I ran inside a VM running on the AMS-500. I found that the MSA had equal or better performance compared to the AMS-500 so I chose that instead. We were able to obtain an iSCSI MSA with dual controllers, an extra shelf loaded with 450GB drives for a total of about 10TB raw storage and were able to stay in our budget. For servers we went with HP DL-385 G6 servers with AMD 6-core processors.

Now with Domino there are a couple of important hardware considerations that you should be aware of. First is Domino loves memory, it will use everything you give it as it does a lot of caching. So having an adequate amount of memory is a must. The next is with CPU’s, Domino takes full advantage of the new AMD RVI and Intel EPT technology in newer processors so having this is a must. In fact this can make a huge difference in performance compared to processors without it. Finally when it comes to virtualization using vSphere was a must, there have been numerous performance improvements in vSphere that are very beneficial to Domino. IBM has published some tech notes in the past about high CPU usage on virtualized Domino servers compared to physical ones with identical workloads. They point out that you should make sure you have suitable hardware and the proper architecture but also seem to place the blame on the overhead of the virtualization layer. They go as far as to not recommend virtualizing larger Domino workloads. Well the combination of newer CPU technologies and vSphere now make that possible and allow almost any Domino workload to be successfully virtualized. So to summarize:

- Only use CPUs with AMD RVI & Intel EPT

- Have plenty of memory, don’t plan on using overcommitment

- Make sure your storage can keep up with Domino

- Make sure you are using vSphere and not VI3

Next up is building the hardware including the MSA and vSphere servers and configuring the iSCSI storage and networking. Some additional bonuses with using vSphere is the many improvements to iSCSI including support for Jumbo Frames, faster initiators and support for multi-pathing. So stay tuned for part 2…

Jan 28 2010

Lessons learned in a power outage

Having experienced several complete data center power outages I’ve learned some important lessons over the years when it comes to virtualizing your infrastructure. Today I experienced another power outage and my previous experiences ensured that I was ready for this one and I thought I would share some tips:

- DNS is the most critical component in your environment, for almost anything to work properly you need a DNS server up first. If you have all your DNS servers virtualized and they are on shared storage its going to be very difficult to bring them up because everything else that needs to come up first usually relies on DNS. Therefore you should make sure you have at least one DNS server on the local storage of one of your hosts, that way you can get it up early on and not have to wait for your shared storage to come up. If you want to go a step further keep one as a physical server also, so you can quickly and easily get DNS up right away.

- Active Directory, DHCP & any other authentication servers are also very important, having a workstation up and running is very handy so you can centrally connect to hosts and power things back on. If you’re using DHCP for your workstations and servers you again will want one up as soon as possible so you can get them on the network and get going. Not having an Active Directory server available can make Windows servers take a very long time to boot. So again, keep an AD & DHCP server on local storage or on a physical server so you can quickly get them up as soon as possible. AD, DNS & DHCP are critical to a Windows environment and without them available you’ll find that the rest of your environment is mostly useless.

- Know your ESX command line, if your vCenter Server and other workstations are not available you’ll need to start VM’s using the command line. Even if your DNS server is on a local VM you won’t be able to start it without the vSphere Client. Therefore you’ll have to log into the ESX console and manually start it, if you don’t know the command to do this that could be a problem. Keep a cheat sheet by your hosts with the basic commands that you’ll need like vmware-cmd to get things up and running using the console.

- Know your host IP addresses, if DNS is not up yet you won’t be able to connect to your hosts using putty or the vSphere client using their host names, you probably won’t know their IP address and without a DNS server can’t look them up. Therefore keep a list of your host IP addresses so you can use that to connect to them.

- Know how to re-scan storage, your hosts may come up before your shared storage, once your shared storage is up you’ll need to rescan from your hosts so they will see it and you can restart the VM’s on it. You can do this using the vSphere client by clicking on Configuration, Storage Adapters, selecting your HBA and clicking the Rescan button and then select search for new devices. You can also do this using the command line esxcfg-rescan utility.

- Make sure you know where your datacenter keys are if you use a electronic card scanner to open your doors. Most systems are placed in the datacenter and if the power goes out your doors are not going to work. There is nothing worse than running around trying to find keys in a crisis to get into the datacenter. And make sure you don’t keep the keys in datacenter or you’ll have to break down the door to get in. (thanks Tony DiMaggio for reminding me about this one)

Being prepared is critical in crisis situation to ensure you can react quickly to get things back up and running. Sometimes it takes a crisis to point out any shortcomings that you may have in your environment but thinking ahead can save you from big headaches later on.

Jan 25 2010

Coming soon: a new vLaunchpad

I’ve been maintaining the vLaunchpad using Dreamweaver which has been difficult at times so I thought I would explore other options. I’ve resisted using WordPress as I had for my main site because the page layout is kind of unique and not easily re-produced in WordPress. Well after much theme hacking I think I have it ported over to WordPress pretty well. I’ve done extensive CSS modifications to make sure I would fit as much information as possible in one area and not have to resort to lots of scrolling or multiple pages. It’s not done yet but it’s getting close, I still need to add all the blog links and do some other work to it. I’ve also moved it to the clouds, which is GoDaddy’s new hosted grid computing offering. So let me know what you think, I’m open to all your suggestions, tell me what you like, don’t like and want to see added to it. One thing you’ll notice is I added all of Hany Michael’s great vDiagrams to the sidebar for easy access to them.

So go check out the new version of the vLaunchpad and let me know what you think, just remember it’s not done yet and I have lots of changes to make to it. Thanks to the Netherlands gang (Duncan, Eric & Gabe) for their early feedback that helped me get the design right.