VMware publishes a list of all server hardware that is supported with vSphere which includes servers, I/O adapters and storage and SAN devices. This list is continually updated and is most commonly referred to as the Hardware Compatibility List (HCL), VMware changed the name for it a while back to the VMware Compatibility Guide as it is now referred to. The guide used to be published as PDF files only that you could read through to see if your hardware was listed but is now available as an online interactive webpage that is searchable and filterable as well. The guide lists hardware that is supported by vSphere but if hardware is not listed it does not mean it will not work with vSphere. In many cases the hardware will still work but because it is not listed VMware may not provide you support if you have problems with it. Servers and storage devices are two areas that are very common where they work with vSphere despite not being listed in the guide. I/O devices like network adapters and storage controllers though are less likely to work if they are not listed because they rely on drivers loaded in the VMkernel to work properly. If the driver is not one of the limited ones load in the VMkernel than your device is not going to work.

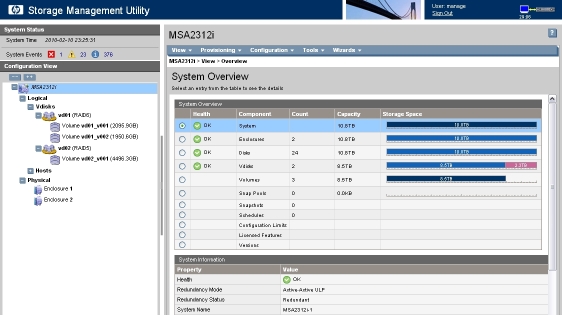

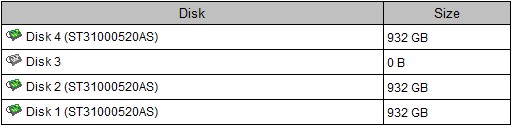

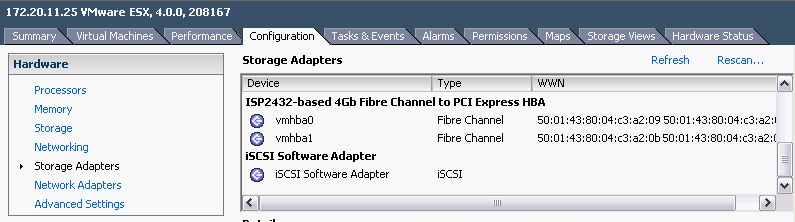

I don’t go through the guide that often as I assume the new mainstream hardware from large vendors like HP will always work and be supported. However last week that assumption bit me. We had just received some new hardware from HP which included a DL385 G6 server and an MSA G2 iSCSI storage array. I wanted to use hardware iSCSI initiators with TCP/IP Offload Engines (TOE) due to high CPU and I/O demands of the applications running on that server so I initially ordered HP’s only TOE card that was available which was the NC380T, however after we placed the order we were informed that the card was no longer available and was replaced by the newer NC382T. After receiving everything and assembling it and loading ESX on it I found that the NC382T was not listed under Storage Adapters in the vSphere Client as TOE cards should be as they are not treated as network adapters in vSphere. Only my SATA adapter, P410 smart array controller for local storage, Fibre Channel adapters and iSCSI software iniator were showing up under Storage Adapters.

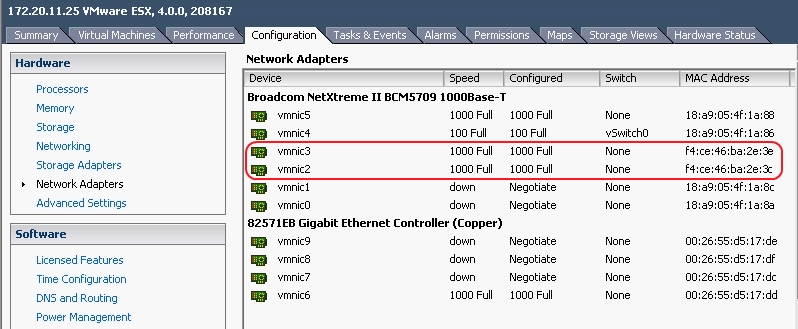

The NC382T was showing up under Network Adapters instead and could not be used as a hardware initiator.

So this new TOE card from HP that we bought to use as a hardware initiator could not be used for that purpose. After discovering this I checked VMware’s hardware guide to see what HP iSCSI adapters were listed. Much to my surprise there was only one HP model listed and after looking up that adapter on HP’s website I found that it was for blade systems only and was therefore no use to my server.

So this new TOE card from HP that we bought to use as a hardware initiator could not be used for that purpose. After discovering this I checked VMware’s hardware guide to see what HP iSCSI adapters were listed. Much to my surprise there was only one HP model listed and after looking up that adapter on HP’s website I found that it was for blade systems only and was therefore no use to my server.

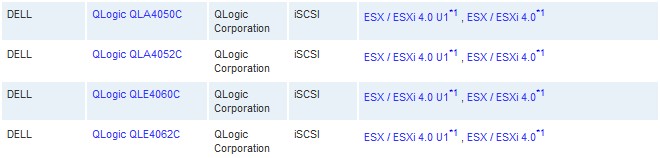

Now HP OEM’s many of their storage adapters as many of them are made by QLogic and Emulex. Most of the other vendors listed in the guide for iSCSI adapters had many QLogic adapters re-branded under their name but not HP.

Now HP OEM’s many of their storage adapters as many of them are made by QLogic and Emulex. Most of the other vendors listed in the guide for iSCSI adapters had many QLogic adapters re-branded under their name but not HP.

After confirming with HP that the blade adapter was the only one that they OEM’d I was forced to get the QLogic branded adapter instead. The card that seemed to be the most popular was the QLE4062C adapter, so now we have one of those on order and may end up just using the NC382T as a regular NIC instead.

After confirming with HP that the blade adapter was the only one that they OEM’d I was forced to get the QLogic branded adapter instead. The card that seemed to be the most popular was the QLE4062C adapter, so now we have one of those on order and may end up just using the NC382T as a regular NIC instead.

So the moral of this story is always check the Compatibility Guide especially when ordering a I/O adapters so you don’t end up with hardware that you can’t use with vSphere. If you want to know more about the guide such as how hardware gets added/removed from it and VMware’s support policy check out this blog post that I did a while ago on it.