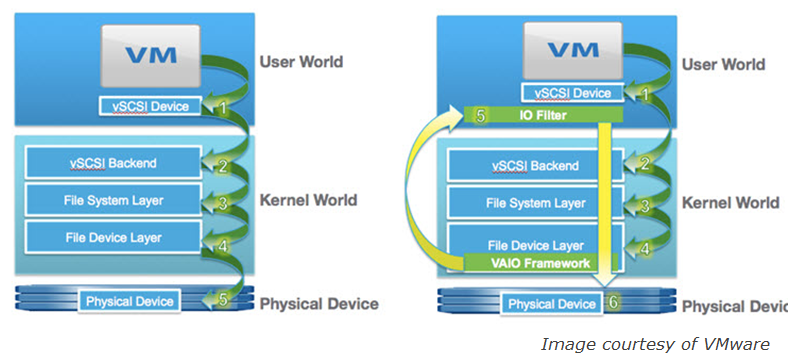

VMware finally introduced native VM-level encryption in vSphere 6.5 which is a welcome addition, but better security always comes with a cost and with encryption that cost is additional resource overhead which could potentially impact performance. Overall I think VMware did a very good job integrating encryption in vSphere, they leveraged Storage Policy Based Management (SPBM) and the vSphere APIs for I/O Filtering (VAIO) to seamlessly integrate encryption into the hypervisor. Prior to vSphere 6.5, you didn’t see VAIO used for more than I/O caching, well encryption is a perfect use case for it as VAIO allows direct integration right at the VM I/O stream.

So let’s take a closer look at where the I/O filtering occurs with VAIO. Normally storage I/O initiates at the VM’s virtual SCSI device (User World) and then makes it way through the VMkernel before heading onto the physical I/O adapter and to the physical storage device. With VAIO the filtering is done close to the VM in the User World with the rest of the VAIO framework residing in the VMkernel as shown in the below figure, on the left is the normal I/O path without VAIO and on the right is with VAIO:

When an I/O goes through the filter there are several actions that an application can take on each I/O, such as fail, pass, complete or defer it. The action taken will depend on the application’s use case, a replication application may defer I/O to another device, a caching application may already have a read request cached so it would complete the request instead of sending it on to the storage device. With encryption it would presumably defer the I/O to the encryption engine to be encrypted before it is written to it’s final destination storage device.

When an I/O goes through the filter there are several actions that an application can take on each I/O, such as fail, pass, complete or defer it. The action taken will depend on the application’s use case, a replication application may defer I/O to another device, a caching application may already have a read request cached so it would complete the request instead of sending it on to the storage device. With encryption it would presumably defer the I/O to the encryption engine to be encrypted before it is written to it’s final destination storage device.

So there are definitely a few more steps that must be taken before encrypted data is written to disk, how will that impact performance? VMware did some testing and published a paper on the performance impact of using VM encryption. Performing encryption is mostly a CPU intensive as you have to do complicated math to encrypt data, the type of storage that I/O is written to plays a factor as well but not in the way you would think. With conventional spinning disk there is actually less performance impact from encryption compared to faster disk types like SSD’s and NVMe. The reason for this is that because data is written to disk faster the CPU has to work harder to keep up with the faster I/O throughput.

The configuration VMware tested with was running on Dell PowerEdge R720 servers with two 8-core CPU’s, 128GB memory and with both Intel SSD (36K IOPS Write/75K IOPS Read) – and Samsung NVMe (120K IOPS/Write750K IOPS Read) storage. Testing was done with Iometer using both sequential and random workloads. Below is a summary of the results:

-

512KB sequential write results for SSD – little impact on storage throughput and latency, significant impact on CPU

- 512KB sequential read results for SSD – little impact on storage throughput and latency, significant impact on CPU

- 4KB random write results for SSD – little impact on storage throughput and latency, medium impact on CPU

- 4KB random read results for SSD – little impact on storage throughput and latency, medium impact on CPU

- 512KB sequential write results for NVMe – significant impact on storage throughput and latency, significant impact on CPU

- 512KB sequential read results for NVMe – significant impact on storage throughput and latency, significant impact on CPU

- 4KB random write results for NVMe – significant impact on storage throughput and latency, medium impact on CPU

- 4KB random read results for NVMe – significant impact on storage throughput and latency, medium impact on CPU

As you can see, there isn’t much impact on SSD throughput and latency but with the more typical 4KB workloads the CPU overhead is moderate (30-40%) with slightly more overhead on reads compared to writes. With NVMe storage there is a lot of impact to storage throughput and latency overall (60-70%) with moderate impact to CPU overhead (50%) with 4KB random workloads. The results varied a little bit based on the number of workers (vCPUs) available used.

They also tested with VSAN using a hybrid configuration consisting of 1 SSD & 4 10K drives, below is a summary of those results:

-

512KB sequential read results for vSAN – slight-small impact on storage throughput and latency, small-medium impact on CPU

- 512KB sequential write results for vSAN – slight-small impact on storage throughput and latency, small-medium impact on CPU

-

4KB sequential read results for vSAN – slight-small impact on storage throughput and latency, slight impact on CPU

- 4KB sequential write results for vSAN – slight-small impact on storage throughput and latency, small-medium impact on CPU

The results with VSAN varied a bit based on the number of workers used, with less workers (1) there was only a slight impact, as you added more work workers there was more impact to throughput, latency and CPU overhead. Overall though the impact was more reasonable at 10-20%.

Now your mileage will of course vary based on many factors such as your workload characteristics and hardware configurations but overall the faster your storage and the slower your CPUs and # of them the more performance penalty you can be expected to encounter. Also remember you don’t have to encrypt your entire environment and you can pick and choose which VM’s you want to encrypt using storage policies so that should lessen the impact of encryption. If you have a need for encryption and the extra security it provides it’s just the price you pay, how you use it is up to you. With whole VM’s being capable of slipping out of your data center over a wire or in someone’s pocket, encryption is invaluable protection for your sensitive data. Below are some resources for more information on VM encryption in vSphere 6.5:

- What’s New in vSphere 6.5: Security (VMware vSphere Blog)

- vSpeaking Podcast Episode 29: vSphere 6.5 Security (VMware Virtually Speaking Podcast)

- VMworld 2016 USA INF8856 vSphere Encryption Deep Dive Technology Preview (VMworld TV)

- VMworld 2016 USA INF8850 vSphere Platform Security (VMworld TV)

- The difference between VM Encryption in vSphere 6.5 and vSAN encryption (Yellow Bricks)