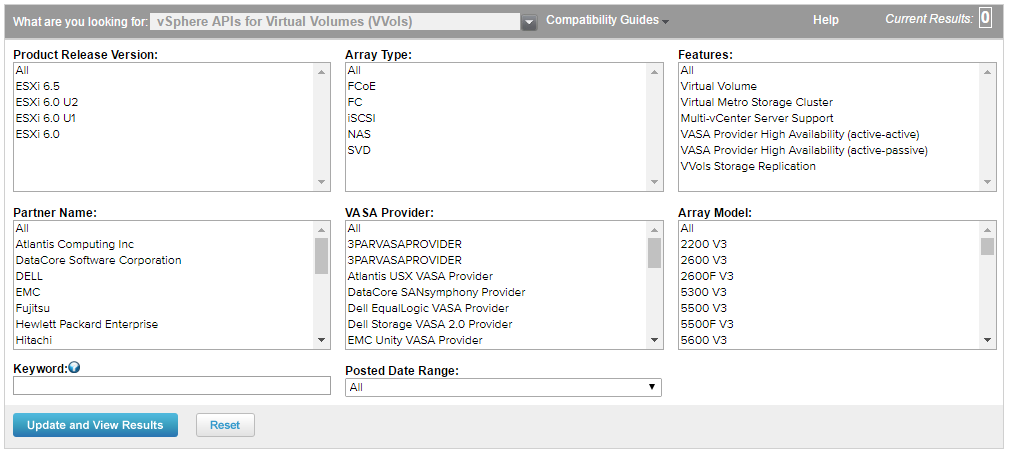

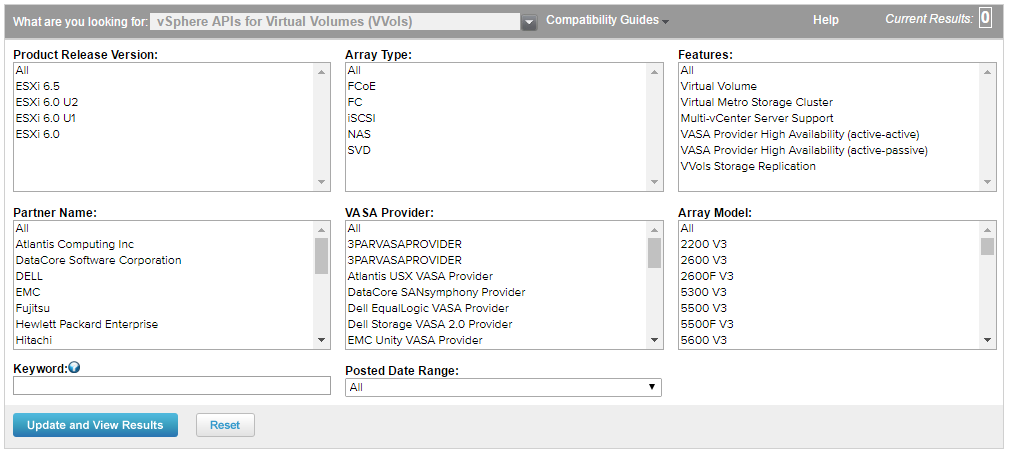

VMware’s new external storage architecture, Virtual Volumes (VVols), turned 2 years old this month as it launched as part of vSphere 6.0. The new VASA specification for VVols has been under development by VMware for over 6 years and it’s up to storage vendors to set their own development pace for supporting it. In this post I’ll provide an update on how each storage vendor is positioned to support VVols, this is entirely based on what is published in the VVols HCL (see below figure) and some research I have done. It will not cover things like the capabilities and scalability for VVols that is up to each vendor to dictate what they are capable of doing with VVols and how many VVols that they can support on a storage array.

Let’s first take a look in detail at the categories listed in the table below and explain them a bit further:

Let’s first take a look in detail at the categories listed in the table below and explain them a bit further:

Vendor

On day 1 of the vSphere 6.0 launched there were only 4 vendors that had support for VVols. There are 17 vendors that support VVols today, almost all of the bigger vendors like IBM, HDS, HPE, EMC and NetApp support them but there are a number of smaller vendors that do as well such as SANBlaze, Primary Data, Fujitsu, NEC and Atlantis. Noticeably absent is Pure Storage which is odd as they demoed VVols at Tech Field Day back in 2014. You also might note that NAS vendors tend to be less interested in developing support for VVols as NAS allows an array to already see individual VMs as they are written as files and not using LUNs. Because of this NAS arrays already have the ability to interact with VMs using vendor developed tools so the VVols solution becomes less appealing to them with all the extra development work to achieve roughly the same outcome.

Array Model

Each array family must be certified for VVols and it’s up to each vendor to decide on how and if they want to do that. With EMC they chose to do it through Unity instead of doing it directly within the storage array. There are some additional vendors like DataCore, SANBlaze and Primary Data that sell storage virtualization products that sit in front of any brand primary storage arrays and aggregate storage to ESXi host, these vendors build VVols into their software so they can support VVols even if the back-end storage array does not. The VVol HCL will list exactly what model arrays are supported by each vendor and the firmware that is required for VVols support.

Array Type (Protocols)

Again it is up to each vendor to decide which protocols they will support with VVols on each array model, VVols is protocol agnostic and will work with FCoE, NFS, iSCSI, FV and SVD. Note some vendors will only support block protocols, others just NAS, some support all protocols. The VVol HCL will list which protocols are supported by each vendor for their arrays that support VVols. You’ll see SVD listed as a an array type, that stands for “Storage Virtualization Device”. The only 2 vendors listed for that array type are IBM (SVC) and NetApp (ONTAP).

vSphere Version

This lists the versions of vSphere that are supported by a storage array with VVols, the options include all the update versions of 6.0 (U1-U3) and 6.5. The 6.0 versions are basically all similar to each other and they all run under the VASA 2.0 specification. With 6.5, VASA 3.0 is the new VASA specification which introduces support for VVol replication. However an array can be certified on vSphere 6.5 and only support VASA 2.0, supporting 6.5 does not automatically indicate VVol replication support, this support is specifically noted in the Features field in the HCL. Right now there are only 9 vendors that provide VVol support on vSphere 6.5

VASA Provider Type

This one isn’t explicitly listed in the HCL, I had to do my own research to determine this. The VASA Provider component can be deployed either internal to an array (embedded in the array controllers/firmware) or deployed externally as a pre-built VM appliance or as software that can be installed on a VM or physical server. It is up to each vendor to determine how they want to do this, the internal method has it’s advantages by being easier to deploy, manage and highly available. The external method however tends to be easier for vendors to develop as they don’t have to modify array firmware and use array resources and can simply bolt it on as an external component. The only hardware (not VSA/SVD) storage vendors that deploy it internally are HPE, SolidFire NexGen and Nimble Storage. Some of the storage virtualization vendors like EMC (Unity) and Primary Data build it into their software so it’s considered internal.

VASA Provider HA

The VASA Provider is a critical component in the VVols architecture and if it becomes unavailable you can’t do certain VVol operations like powering on a VM. As a result you want to ensure that is is highly available, with internal VASA Providers this doesn’t apply as much as the array would have to go down to take down the VASA Provider and if that happens you have bigger problems. With external VASA Providers it is more critical to protect it as it is more vulnerable to things like a host crash, network issues, someone powering it off, etc. There are different ways to provide HA for the VASA Provider and it’s up to each vendor to figure out how they want to do it. You can rely on vSphere HA to restart it in event of a host crash or a vendor can build their own solution to protect it.

The VASA Provider HA feature in the HCL only indicates if a vendor has created and certified an active-active or active-passive HA solution for the VASA Provider using multiple VASA providers which only 3 vendors have done (NexGen, SANBlaze and IBM). Other vendors may have different ways of providing HA for the VASA Provider so check with your vendor to see how they implement it and understand what the risks are.

Multi vCenter Servers

Support for multiple vCenter Servers registering and connecting to a storage array is indicated in the feature column of the VVol HCL.Right now there are only 5 vendors listed with that support. Again check with your vendor to see where they are at with this.

Array Based Replication

Support for array based replication of VVols is new in vSphere 6.5 as part of the VASA 3.0 specification. On day 1 of the vSphere 6.5 launch not one vendor supported it, a bit later Nimble Storage showed up first to support it with HPE recently announcing it for 3PAR and should be showing up on the HCL any day now. Having seen first hand the amount of engineering effort it takes to support VVols replication I can understand why there are so few vendors that support it today but I expect you will see more show up over time.

| Vendor | Array Models | Protocols | vSphere Version | VASA Provider Type | VASA Provider HA | Multi vCenter Servers | Array Based Replication |

| Atlantis Computing | USX | NAS | 6.0 | Internal | No | No | No |

| DataCore Software | SANsymphony | FC, iSCSI | 6.0 | External (physical or VM) | No | No | No |

| Dell | Equalogic | iSCSI | 6.0 | External (VSM VM appliance) | No | No | No |

| EMC | VMAX, Unity | FC (VMAX, Unity), iSCSI (Unity), NAS (Unity) | 6.0 (VMAX, Unity), 6.5 (Unity) | Internal (Unity), External (VMAX) (VM appliance) | No | No | No |

| Fujitsu | Eternus | FC, FCoE, iSCSI | 6.0, 6.5 | External (physical or VM) | No | Yes | No |

| Hewlett Packard Enterprise | 3PAR StoreServ, XP7 | FC, iSCSI | 6.0, 6.5 | Internal (3PAR), External (XP7) | N/A (3PAR), No (XP7) | Yes (3PAR), No (XP7) | Yes (3PAR), No (XP7) |

| Hitachi Data Systems | VSP | FC, iSCSI, NAS | 6.0, 6.5 | External (VM appliance) | No | No | No |

| Huawei Technologies | OceanStor | FC, iSCSI | 6.0, 6.5 | External (VM appliance) | No | No | No |

| IBM | XIV, SVC | FC, iSCSI, SVD | 6.0 (XIV, SVC), 6.5 (XIV) | External (IBM Storage Integration Server) | Yes (active-passive) | Yes | No |

| NEC | iStorage | FC, iSCSI | 6.0, 6.5 | External (Windows app) | No | No | No |

| NetApp | FAS | FC, iSCSI, NAS | 6.0 | External (VM appliance) | No | No | No |

| NexGen Storage/Pivot3 | N5 | iSCSI | 6.0 | Internal | Yes (active-active) | No | No |

| Nimble Storage | CS, AF | FC, iSCSI | 6.0, 6.5 | Internal | N/A | Yes | Yes |

| Primary Data | DataSphere | NAS | 6.0 | Internal | No | No | No |

| SANBlaze Technology | VirtuaLUN | FC, iSCSI | 6.0 | External (Linux app) | Yes (active-active) | Yes | No |

| SolidFire | SF | iSCSI | 6.0 | Internal | No | No | No |

| Tintri | T | NAS | 6.0, 6.5 | External | No | No | No |