Just a reminder that VMware will remove older versions of VI3 from their download site and only allow you to download the very latest versions beginning in May. So if you don’t already have them downloaded, get them while you can. I have links to all the download locations for the older versions here. Sometimes its nice to have older versions to play around with.

Also VI3 will switch to Extended support in May of this year, just shy of it’s 4 year anniversary, here’s VMware’s definition of it:

New hardware platforms are no longer supported, new guest OS updates may or may not be applied, and bug fixes are limited to critical issues. Critical bugs are deviations from specified product functionality that cause data corruption, data loss, system crash, or significant customer application down time and there is no work-around that can be implemented.

VI3 has been rock solid since its release and the switch to extended support is no big deal for those still using it. Extended support lasts for another 3 years, you can read the details here.

Here’s the official announcement:

Announcement: End of availability for VI

Effective May 2010, VMware will remove all but the most recent versions of our Virtual Infrastructure product binaries from our download web site. As of this date, these Virtual Infrastructure products will have reached end of general support according to the published support policy. The downloadable products removed will include both ESX and Virtual Center releases. For reference, the VMware VI3 support policy can be viewed at this location: www.vmware.com/support/policies/lifecycle/vi.

By removing older releases, VMware is establishing a long-term sustainable product maintenance line for older ESX product releases which have transitioned into the Extended Support life cycle phase. This enables us to baseline all patches and critical fixes against these baselines. This translates to faster customer turn-around and greater product stability during the extended support phase.

Virtual Infrastructure products being removed by May 2010:

- ESX 3.5 versions 3.5 GA, Update 1, Update 2, Update 3 and Update 4

- ESX 3.0 versions 3.0 GA, 3.0.1, 3.0.2 and 3.0.3

- ESX 2.x versions 2.5.0 GA, 2.5.1, 2.5.2, 2.1.3, 2.5.3, 2.0.2, 2.1.2 and 2.5.4

- Virtual Center 2.5 GA, 2.5 Update 1, 2.5 Update 2, 2.5 Update 3, 2.5 Update 4 and 2.5 Update 5

- Virtual Center 2.0

Virtual Infrastructure products remaining for Extended Support:

These versions will be the baseline for ongoing support during the Extended Support phase. All subsequent patches issued will be based solely upon the releases below.

- ESX 3.5 Update 5 will remain throughout the duration of Extended Support

- ESX 3.0.3 Update 1 will remain throughout the duration of Extended Support

- Virtual Center 2.5 Update 6 expected in early 2010

Customers may stay at a prior version, however VMware’s patch release program during Extended Support will be continued with the condition that all subsequent patches will be based on the latest baseline. In some cases where there are release dependencies, prior update content may be included with patches.

http://www.vmware.com/support/policies/lifecycle/vi/faq.html

![]()

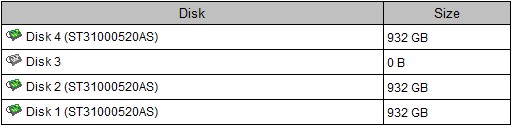

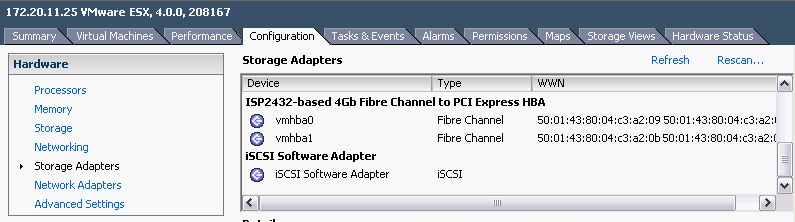

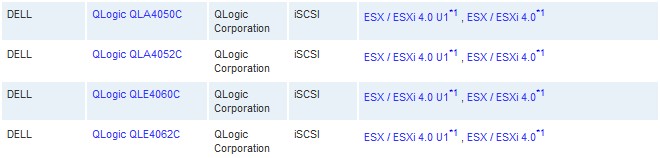

So this new TOE card from HP that we bought to use as a hardware initiator could not be used for that purpose. After discovering this I checked VMware’s hardware guide to see what HP iSCSI adapters were listed. Much to my surprise there was only

So this new TOE card from HP that we bought to use as a hardware initiator could not be used for that purpose. After discovering this I checked VMware’s hardware guide to see what HP iSCSI adapters were listed. Much to my surprise there was only  Now HP OEM’s many of their storage adapters as many of them are made by QLogic and Emulex. Most of the other vendors listed in the guide for iSCSI adapters had many QLogic adapters re-branded under their name but not HP.

Now HP OEM’s many of their storage adapters as many of them are made by QLogic and Emulex. Most of the other vendors listed in the guide for iSCSI adapters had many QLogic adapters re-branded under their name but not HP. After confirming with HP that the blade adapter was the only one that they OEM’d I was forced to get the QLogic branded adapter instead. The card that seemed to be the most popular was the

After confirming with HP that the blade adapter was the only one that they OEM’d I was forced to get the QLogic branded adapter instead. The card that seemed to be the most popular was the