Storage is the slowest and most complex host resource, and when bottlenecks occur, they can bring your virtual machines (VMs) to a crawl. In a VMware environment, Storage I/O Control provides much needed control of storage I/O and should be used to ensure that the performance of your critical VMs are not affected by VMs from other hosts when there is contention for I/O resources.

Storage I/O Control was introduced in vSphere 4.1, taking storage resource controls built into vSphere to a much broader level. In vSphere 5, Storage I/O Control has been enhanced with support for NFS data stores and clusterwide I/O shares.

Prior to vSphere 4.1, storage resource controls could be set on each host at the VM level using shares that provided priority access to storage resources. While this worked OK for individual hosts, it is common for many hosts to share data stores, and since each host worked individually to control VM access to disk resources, VMs on one host could limit the amount of disk resources on other hosts.

The following example illustrates the problem:

- Host A has a number of noncritical VMs on Data Store 1, with disk shares set to Normal

- Host B runs a critical SQL Server VM that is also located on Data Store 1, with disk shares set to High

- A noncritical VM on Host A starts generating intense disk I/O due to a job that was kicked off; since Host A has no resource contention, the VM is given all the storage I/O resources it needs

- Data Store 1 starts experiencing a lot of demand for I/O resources from the VM on Host A

- Storage performance for the critical SQL VM on Host B starts to suffer as a result

How Storage I/O Control works

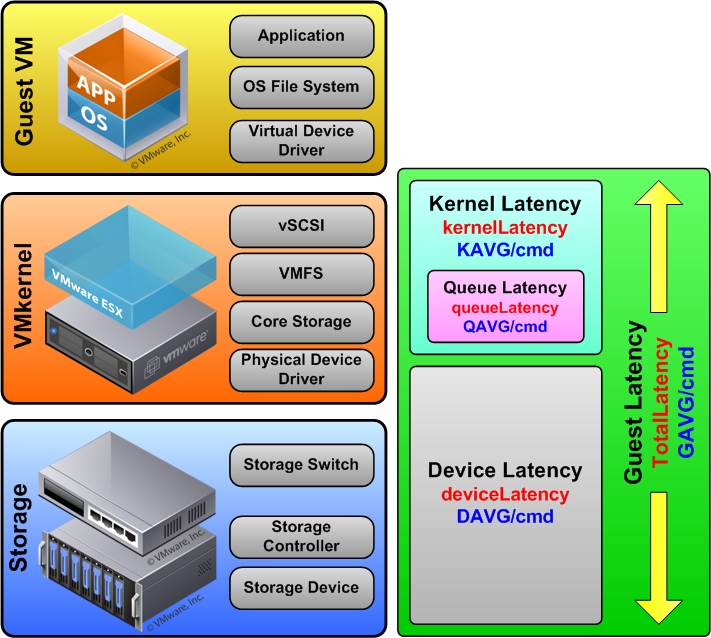

Storage I/O Control solves this problem by enforcing storage resource controls at the data store level so all hosts and VMs in a cluster accessing a data store are taken into account when prioritizing VM access to storage resources. Therefore, a VM with Low or Normal shares will be throttled if higher-priority VMs on other hosts need more storage resources. Storage I/O Control can be enabled on each data store and, once enabled, uses a congestion threshold that measures latency in the storage subsystem. Once the threshold is reached, Storage I/O Control begins enforcing storage priorities on each host accessing the data store to ensure VMs with higher priority have the resources they need.

Read the full article at searchvirtualstorage.com…