Many virtual environments lack consistency and uniformity and are often made up of a diverse mix of hardware and software components. This diversity could include things like multiple vendors for hardware components as well as hypervisors from different vendors. Since budgets play a big factor in determining the hardware and software used in a virtual environment, often times there will be variety of equipment from different vendors. In other cases customers want to use best of breed hardware and software which typically means having to go to different vendors to get the configuration they desire.

For hardware it’s not uncommon to see different hardware vendors used across servers, storage and networking, and sometimes different hardware vendors within the same resource group (i.e. storage). When it comes to hypervisor software the most commonly used software is VMware vSphere but it’s not uncommon to see Microsoft’s Hyper-V inside the same data center as a majority of customers use Microsoft for their server OS which gives them access to Hyper-V. Another common mix for client virtualization is running Citrix XenDesktop on top of VMware vSphere. Whatever the reasons are for choosing different vendors to build out a virtual environment, it’s rare to find a datacenter that has the same brand hardware across servers, storage and networking and only a single hypervisor platform running on it.

As a result of this melting pot of different hardware and hypervisors, managing these heterogeneous virtual environments can become extremely difficult as management tools tend to be specific to a hardware or hypervisor platform. Having so many management tool silos can add complexity, increase administration overhead and increase costs. To help offset that we’ll cover 5 tips for managing heterogeneous virtual environments so you can do it more effectively.

1 – Group similar hardware together for maximum effectiveness

If you are going to use a mix of different brands and hardware models you can’t just throw it all together and expect it to work effectively and efficiently. Every hardware platform has its own quirks and nuances and because virtualization is very picky about physical hardware you should group similar hardware together whenever possible. When it comes to servers this is usually done at the cluster level so features like vMotion and Live Migration that move VMs from host to host can ensure CPU compatibility. Because AMD & Intel CPUs use different architectures you cannot move a running VM across hosts with different processor vendors. There are also limitations on doing this within processor families of a single CPU vendor that you need to be careful of. Some of these can be overcome using features like VMware’s Enhanced vMotion Compatibility (EVC) but it can still cause administration headaches.

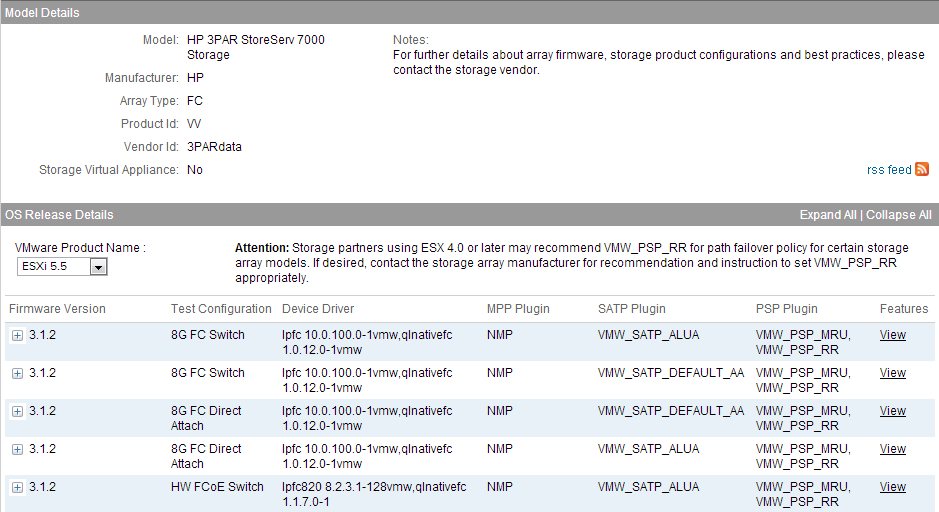

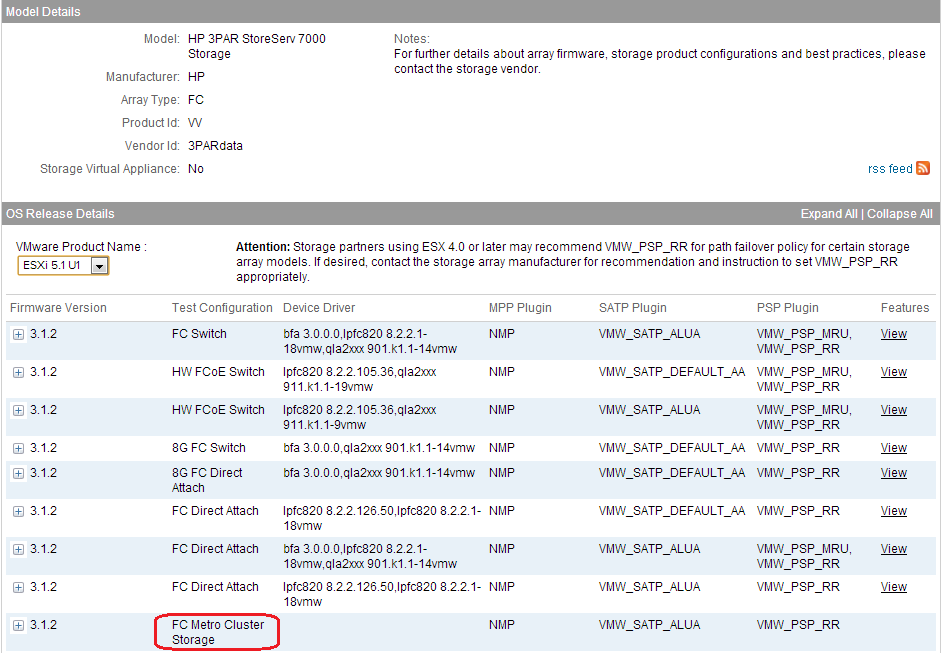

Shared storage you can intermix more easily as many storage features built into the hypervisor like thin provisioning and Storage vMotion will work independently of the underlying physical storage hardware. With storage you can use different vendor arrays side by side in a virtual environment without any issues but you should be aware of the differences and limitations between file-based (NAS) and block-based (SAN) arrays. You can use file and block arrays together in a virtual environment but due to protocol and architecture differences you may run into issues with feature support and integration across file and block arrays. Therefore you should try and group similar storage arrays together as well so you can get take advantage of features and integration that may only work with within a storage array product family and within a storage protocol. By doing some planning up front and grouping similar hardware together you can increase efficiency, improve management and avoid incompatibility problems.

2 – Try and stick to one hypervisor platform for your production environment

I’ve seen surveys that say more than 65% of companies that have deployed virtualization are using multiple hypervisors. That number seems pretty high and what I question is how those companies are deploying multiple hypervisors. Someone taking a survey that has 100 vSphere hosts and 1 Hyper-V host can say they are running multiple hypervisors but the reality is they are using one primary hypervisor. When you start mixing hypervisors together for whatever reason you run into all sorts of issues that can have a big impact on your virtual environment. The biggest issues with this tend to be in these areas:

- Training – You have to make sure your staff is continually trained on 2 different hypervisor platforms

- Support – You need to have support contracts in place for 2 different hypervisor platforms

- Interoperability – There is some cross hypervisor interoperability between hypervisors using conversion tools but since they use different disk formats this can be cumbersome

- Management – Both VMware & Microsoft have tried to implement management of the other platform into their native management tools but it is very limited and not all that usable

- Costs – You are doubling your costs for training, support, management tools, etc

As a result it makes sense to use one hypervisor as your primary platform for most of your production environment. It’s OK to do one off’s and pockets here and there with a secondary hypervisor but try and limit that.

3 – Make strategic use of a secondary hypervisor platform

Sometimes it may make sense financially to intermix hypervisor platforms in your data center for specific use cases. Using alternative lower cost or free hypervisor platforms can help increase your use of virtualization while keeping costs down. If you do utilize a mix of hypervisors in your data center do it strategically to avoid complications and some of the issues I mentioned in the previous tip. Here are some suggestions for strategically using multiple hypervisor platforms together:

- Use one primary hypervisor platform for production and a secondary for your development and test environments. You may consider using the same hypervisor for production and test though so you can test for issues that may occur from running an application on a specific hypervisor before it goes into production.

- Create tiers based on application type and how critical it is. This can be further defined by the level of support that you have for each hypervisor. If you have 24×7 support on one and 9×5 support on another you’ll want to make sure you have all your critical apps running on the hypervisor platform with the best support contract.

- If you have remote offices you might consider having a primary hypervisor platform at your main site and using an alternate hypervisor at your remote sites.

These are just some of the logical ways to divide and conquer using a mix of hypervisors, you may find other ways that work better for you that gives you the benefits of both platforms without the headaches.

4 – Understand the differences and limitations of each platform

No matter if you’re using different hardware or hypervisor platforms you need to know what the capabilities and limitations of each platform which can impact availability, performance and interoperability. Each hypervisor platform tends to have its own disk format so you cannot easily move VMs across hypervisor platforms if needed. When it comes to features there are usually some requirements around using them and despite being similar they tend to be proprietary to each hypervisor platform.

When it comes to hardware CPU compatibility amongst hosts is a big one because moving a running VM from one host to another using vMotion or Live Migration requires both hosts to have CPUs that are the same manufacturer (i.e. Intel or AMD) as well as architecture (CPU family). With hypervisors there are some features unique to particular hypervisor platforms like the power saving features built into vSphere. Knowing the capabilities and limitations of the hardware and hypervisors that you use can help you strategically plan how to use them together more efficiently and help avoid compatibility problems.

5 – Leverage tools that can manage your environment as a whole

Managing heterogeneous environments can often be quite challenging as you have to switch between many different management tools that are specific to hardware, applications or a hypervisor platform. This can greatly increase administration overhead and decrease efficiency as well as limit the effectiveness of monitoring and reporting. When you have management silos in a data center you lack the visibility across the environment as a whole which can create unique challenges as virtual environments demand unified management. Compounding the problem is the fact that native hypervisor tools are only designed to manage a specific hypervisor so you need separate management tools for each platform. Some of the hypervisor vendors have tried to extend management to other hypervisor platforms but they are often very limited and more designed to help you migrate from a competing platform. The end result is a management mess that can cause big headaches and fuels the need for management tools that can operate at a higher level and that can bridge the gap between management silos.

SolarWinds delivers management tools that can stretch from apps to bare metal and can cover every area of your virtual environment. This provides you with one management tool that can manage multiple hypervisor platforms and also provide you with end to end visibility from the apps running in VM’s to the physical hardware that they reside on. Tools like SolarWinds Virtualization Manager deliver integrated VMware and Microsoft Hyper-V capacity planning, performance monitoring, VM sprawl control, configuration management, and chargeback automation; all in one affordable product that’s easy to download, deploy, and use. Take it even further by adding on with SolarWinds Storage Manager and Server and Application Monitor and you have a complete management solution from a single vendor that covers all your bases and creates a melting pot for your management tools to come together under a unified framework.