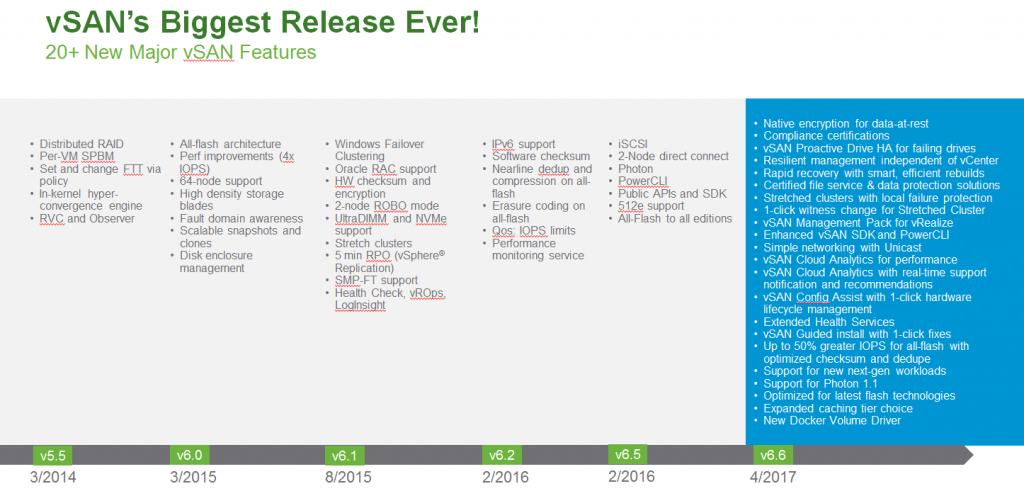

It was back in October when VMware announced vSAN 6.5 and now just 6 months later they are announcing the latest version of vSAN, 6.6. With this new release vSAN turns 3 years old and it’s come a long way in those 3 years. VMware claims this is the biggest release yet for vSAN but if you look at what’s new in this release it’s mainly a combination of a lot of little things rather than some of the big things in prior releases like dedupe, compression, iSCSI support, etc. It is however an impressive list of enhancements in this release which should make for an exciting upgrade for customers.

As usual with each new release, VMware posts their customer adoption numbers for vSAN and as of right now they are claiming 7,000+ customers, below is their customer counts by release.

- Aug 2015 – vSAN 6.1 – 2,000 customers

- Feb 2016 – vSAN 6.2 – 3,000 customers

- Aug 2016 – vSAN 6.5 – 5,000 customers

- Apr 2017 – vSAN 6.6 – 7,000 customers

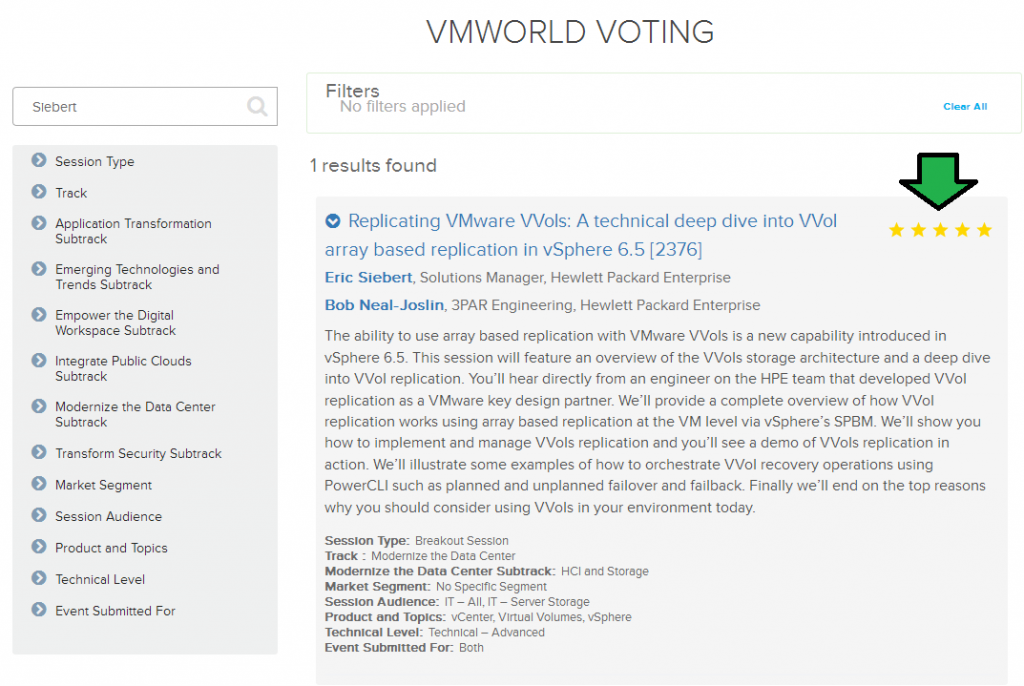

From the numbers it shows that VMware is adding about 2,000 customers every 6 months or about 11 customers a day which is an impressive growth rate. Now back to what’s new in this release, the below slide illustrates the impressive list of what’s new compared to prior versions backing up VMware’s claim of the biggest vSAN release ever.

Now some of these things are fairly minor and I don’t know if I would claim they are ‘major’ features. This release seems to polish and enhance a lot of things to make for an overall more improved and mature product. I’m not going to go into a lot of detail on all these but I will highlight a few things, first here’s the complete list in an easier to read format:

Now some of these things are fairly minor and I don’t know if I would claim they are ‘major’ features. This release seems to polish and enhance a lot of things to make for an overall more improved and mature product. I’m not going to go into a lot of detail on all these but I will highlight a few things, first here’s the complete list in an easier to read format:

- Native encryption for data-at-rest

- Compliance certifications

- vSAN Proactive Drive HA for failing drives

- Resilient management independent of vCenter

- Rapid recovery with smart, efficient rebuilds

- Certified file service & data protection solutions

- Stretched clusters with local failure protection

- 1-click witness change for Stretched Cluster

- vSAN Management Pack for vRealize

- Enhanced vSAN SDK and PowerCLI

- Simple networking with Unicast

- vSAN Cloud Analytics for performance

- vSAN Cloud Analytics with real-time support notification and recommendations

- vSAN Config Assist with 1-click hardware lifecycle management

- Extended Health Services

- vSAN Guided install with 1-click fixes

- Up to 50% greater IOPS for all-flash with optimized checksum and dedupe

- Support for new next-gen workloads

- Support for Photon 1.1

- Optimized for latest flash technologies

- Expanded caching tier choice

- New Docker Volume Driver

Support for data at rest encryption isn’t really anything new as it was introduced in vSphere 6.5 and can be used with any type of storage device and applied to individual VMs. Encryption with vSAN can also be done at the cluster level now so your entire vSAN environment is encrypted for those that desire it. Encryption is resource intensive though and adds overhead as VMware documented so you may instead want to implement it at the VM level instead.

—————– Begin update

As Lee points out in the comments, a big difference with encryption in vSAN 6.6 is that storage efficiencies are preserved. This is notable as data is now encrypted after it is deduped and compressed which is important as if you first encrypted it using the standard VM encryption in vSphere 6.5, you wouldn’t really be able to dedupe or compress it effectively. Essentially what happens in vSAN 6.6 is writes are broken into 4K blocks, they then get a checksum, then get deduped, then compressed, and finally encrypted (512b or smaller blocks)

—————– End update

VMware has made improvements to stretched clustering in vSAN 6.6 allowing for storage redundancy both within a site AND across sites at the same time. This provides protection against entire site outages as expected but also protection against host outages within a site. They also made it easier to configure options that allow you to protect VMs across a site, or just within a single site. Finally they made it easier to change the host location of the witness component which is essentially the 3rd party mediator between 2 sites.

Performance improvements are always welcomed especially around features that can tax the host and impact workloads like dedupe and compression. In vSAN 6.6 VMware spent considerable time optimizing I/O handling and efficiency to help reduce overhead and improve overall performance. To accomplish this they did a number of things which are detailed below:

- Improved checksum – Checksum read and write paths have been optimized to avoid redundant table lookups and also takes a very optimal path to fetch checksum data. Checksum reads are the significant beneficiary

- Improved deduplication – Destages in log order for more predictable performance. Especially for sequential writes. Optimize multiple I/O to the same Logical Block Address (LBA). Increases parallelization for dedupe.

- Improved compression – New efficient data structure to compress meta-data writes. Meta-data compaction helps with improving performance for guest and backend I/O.

- Destaging optimizations – Proactively destage data to avoid meta-data build up and impact guest IOPS or re-sync IOPs. Can help with large number of deletes, which invoke metadata writes. More aggressive destaging can help in write intensive environments, reducing times in which flow control needs to throttle. Applies to hybrid and all flash.

- Object management improvements (LSOM File System) – Reduce compute overhead by using more memory. Optimize destaging by reducing cache/CPU thrashing.

- iSCSI for vSAN performance improvements made possible by: Upgraded edition of FreeBSD used in vSAN™. vSAN™ 6.5 used FreeBSD 10.1. vSAN™ 6.6 uses version 10.3. General improvements of LSOM.

- More accurate guidance on cache sizing. Earlier proposal was based on 10% usable capacity. Didn’t represent larger capacity footprints well.

VMware performed testing between vSAN 6.5 & vSAN 6.6 using 70/30 random workloads and found 53%-63% improvement in performance which are quite significant. If nothing else this alone makes for a good reason to upgrade.

To find out even more about vSAN 6.6 check out the below links from VMware:

- What’s New with VMware vSAN 6.6 (Virtual Blocks blog)

- vSAN 6.6 – Native Data-at-Rest Encryption (Virtual Blocks blog)

- vSAN 6.6 Online Health Check and performance improvements (Virtual Blocks blog)

- Goodbye Multicast (Virtual Blocks blog)

- 04/11: What’s New in vSAN 6.6 (VMware webinar)