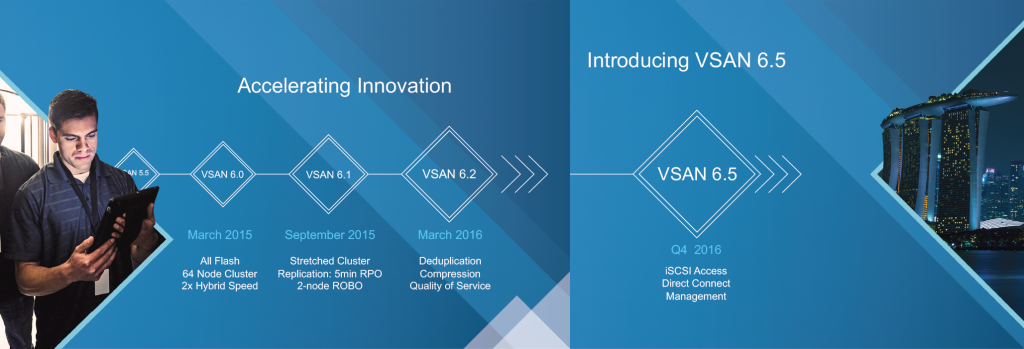

VMware has just announced a new release of VSAN as part of vSphere 6.5 and this post will provide you with an overview of what is new in this release. Before we jump into that lets like at a brief history of VSAN so you can see how it has evolved over it’s fairly short life cycle.

- August 2011 – VMware officially becomes a storage vendor with the release of vSphere Storage Appliance 1.0

- August 2012 – VMware CTO Steve Herrod announces new Virtual SAN initiative as part of his VMworld keynote (47:00 mark of this recording)

- September 2012 – VMware releases version 5.1 of their vSphere Storage Appliance

- August 2013 – VMware unveils VSAN as part of VMworld announcements

- September 2013 – VMware releases VSAN public beta

- March 2014 – GA of VSAN 1.0 as part of vSphere 5.5 Update 1

- April 2014 – VMware announces EOA of vSphere Storage Appliance

- March 2015 – VMware releases version 6.0 of VSAN as part of vSphere 6 which includes the follow enhancements: All-flash deployment model, increased scalability to 64 hosts, new on disk format, JBOD support, new vsanSparse snapshot disk type, improved fault domains and improved health monitoring. Read all about it here.

- September 2015 – VMware releases version 6.1 of VSAN which includes the following enhancements: stretched cluster support, vSMP support, enhanced replication and support for 2-node VSAN clusters. Read all about it here.

- March 2016 – VMware releases version 6.2 of VSAN which includes the following enhancements: deduplication and compression support, erasure coding support (RAID 5/6) and new QoS controls. Read all about it here.

With this 6.5 release VSAN turns 2 1/2 years old and it’s remarkable how far it has come in that time frame. Note while VMware has announced VSAN 6.5 it is not yet available, if VMware operates in their traditional manner I suspect you will see it GA sometime in 30 days as part of vSphere 6.5. Unlike previous versions there isn’t a huge list of things that are new with this release of VSAN but that doesn’t mean that there are not some big things in it. Let’s now dive into what’s new in VSAN version 6.5.

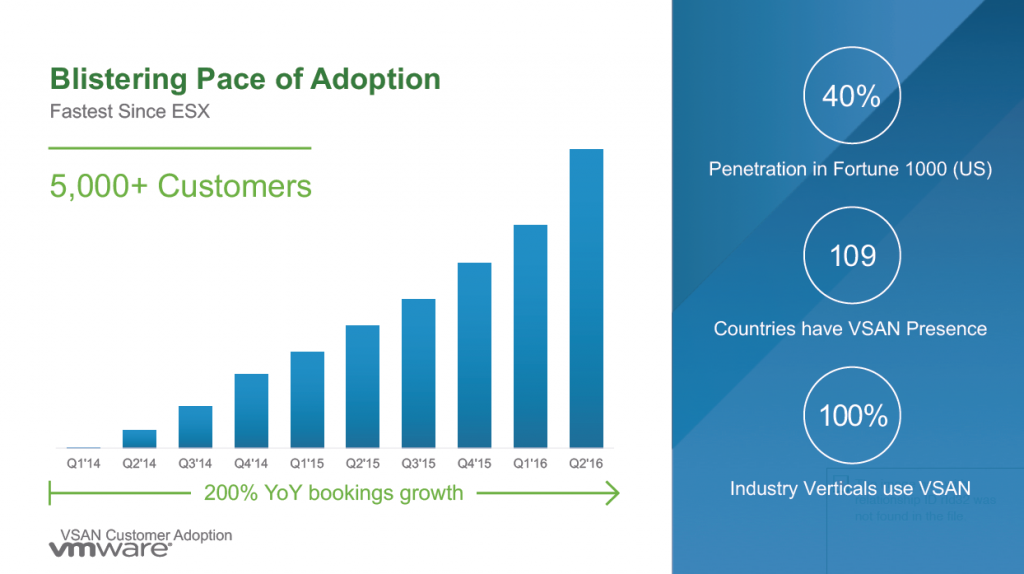

Customer adoption of VSAN continues to increase

Customer adoption of VSAN continues to increase

With this release VMware is claiming that is has over 5,000 VSAN customers and they they are the #1 hyper-converged vendor. That 5,000 number sounds low to backup that #1 claim but VMware is basing this on total revenue and not customer counts. VMware had stated that they have an over $100 million revenue run rate and over 20,000 CPU licenses sold with VSAN back when they had over 3,000 customers which would put the average VSAN deal size around $30,000. The VSAN growth rate over the past two years according to VMware has been as follows:

- Aug 2015 – VSAN 6.1 – 2,000 customers

- Feb 2016 – VSAN 6.2 – 3,000 customers

- Aug 2016 – VSAN 6.5 – 5,000 customers

From these numbers it appears that VMware has added a lot of VSAN customers in the last 6 months which can be attributed to their aggressive sales/marketing and rapid product development life-cycle.

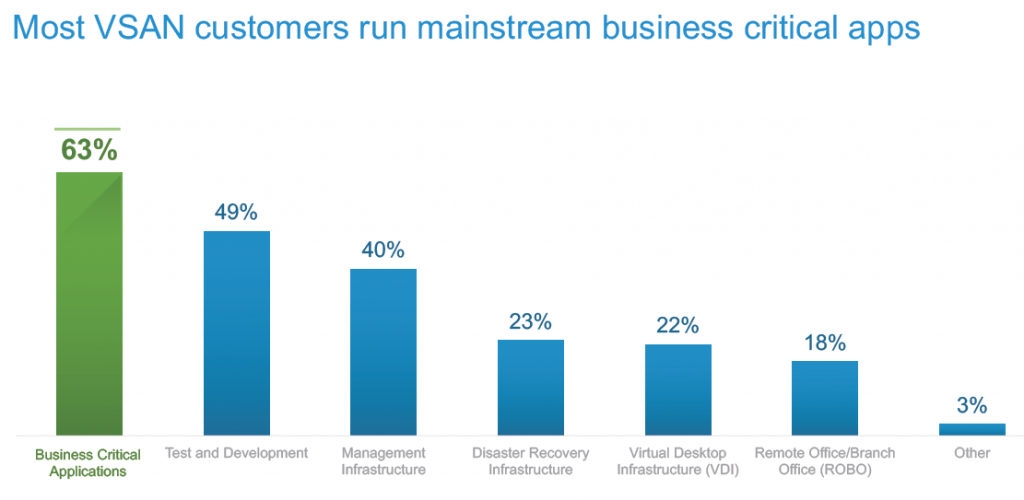

VSAN is going mainstream with the #1 use case being business critical apps

VSAN is going mainstream with the #1 use case being business critical apps

Back when VSAN was first announced VMware had positioned VSAN as more for VDI, Tier 2/3, ROBO and dev/test use cases. As VSAN has evolved and acquired increased scalability and resiliency as well as enterprise features VMware has been claiming since vSphere 6.0 that it is ready for the enterprise and business critical/Tier-1 apps. Apparently VMware has done some customer research (249 respondents) and is claiming that business critical apps are the #1 VSAN use case by quite a large margin. I don’t really doubt that number as VSAN is a significant financial investment for customers and when you are making that big of an investment in storage you are going to maximize your usage of it. Add in all-flash support and enterprise features and I can definitely see that many customers running business critical apps on VSAN as I suspect VSAN serves as the primary vSphere storage platform for those customers.

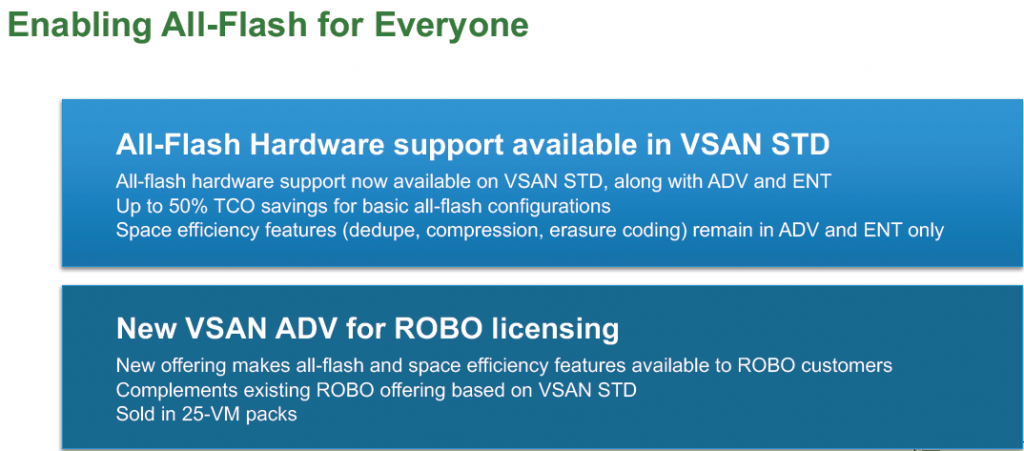

VMware is trying to make All-Flash affordable for everyone

VMware is trying to make All-Flash affordable for everyone

Based on licensing models in prior releases of VSAN you had to pay a All-Flash tax if you wanted to get the maximum performance that VSAN can offer by utilizing all SSD storage. With VSAN 6.2 the Standard license was priced at $2495/CPU and did not include the ability to use VSAN in an all-flash configuration. To get all-flash support you had to purchase VSAN Advanced license which was priced at $3,995/CPU which also included de-dupe, compression and erasure coding. VSAN Enterprise was priced at $5495/CPU and added on support for QoS and stretched clustering.

With VSAN 6.5 that all-flash tax is gone but with a caveat, you can deploy VSAN in an all-flash configuration now with the Standard license but you do not get the space efficiency features with it, for that you still have to move up to an Advanced license. In addition VMware is offering a new VSAN Advanced ROBO license that brings all-flash and space efficiency features for ROBO at a more affordable price point. This new ROBO licensing will be sold in 25-VM packs, if you exceed 25 VMs you have to move up to the regular VSAN license tiers. All in all with the affordability of all-flash media these days it looks like VMware is responding to try and not alienate customers that want to use all-flash but can’t afford higher licensing tiers.

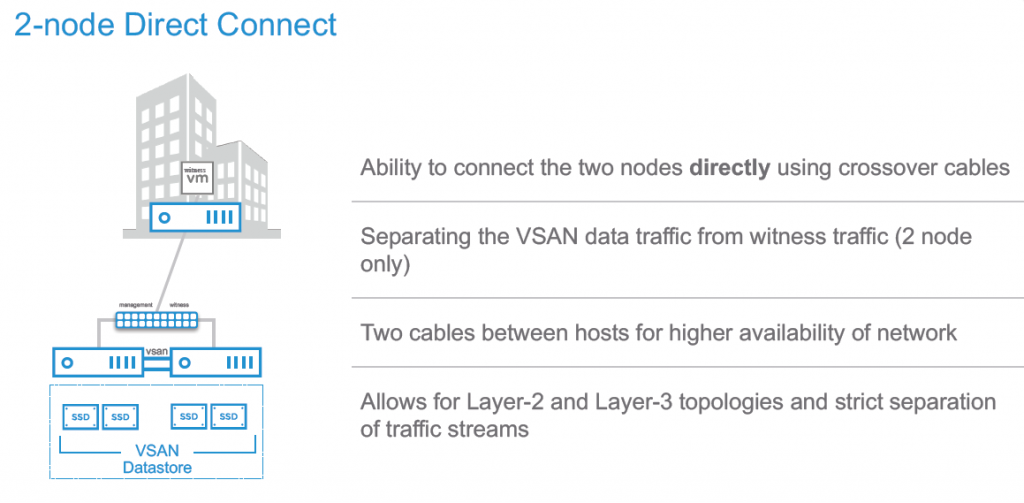

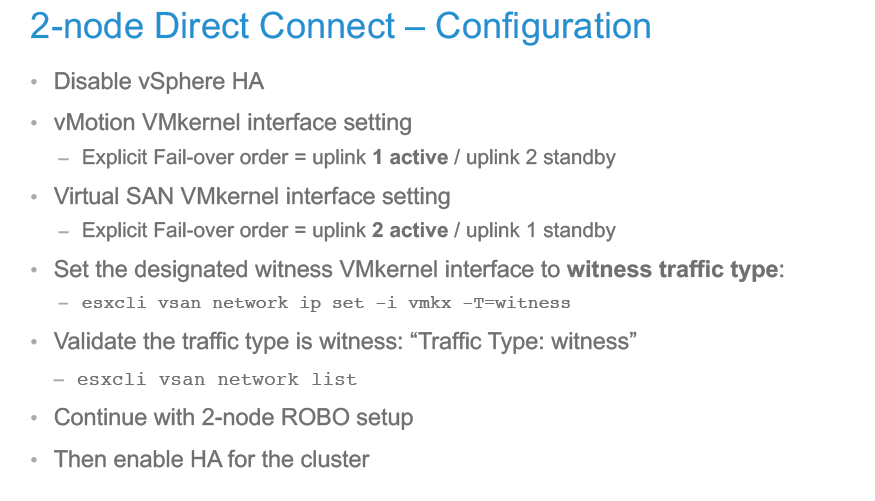

New 2-node Direct Connect deployment mode

New 2-node Direct Connect deployment mode

Remember back in the day when we used to use special cables to connect two PC’s directly together and then use Laplink to transfer data back and forth between them. Because you had a direct connection between the devices it was cheaper, easier, faster and more secure to transfer data. The same holds true with storage devices, a direct connection eliminates the need for connecting 2-nodes together via a network switch, this was fairly common with SAS storage devices.

In vSphere 6.5 VMware has come out with a new 2-node direct connect deployment model for those use cases were it might be desirable to connect only 2 VSAN nodes together such as ROBO or SMB. This can help drive down costs as it eliminates teh need for 10Gbps switches, it also reduces the deployment complexity and helps customers with compliance concerns as VSAN traffic will never touch the network. Basically this solution involves just connecting a NIC port from one VSAN node directly to another VSAN node using a special crossover network cable. In prior versions of VSAN this wasn’t possible as you had both VSAN traffic and witness traffic were occurring on the same VMkernel port so if you used a direct connection there was no way to communicate with the witness.

To make this possible in VSAN 6.5 you now have the ability to separate out witness traffic onto a separate VMkernel port which essentially de-couples it from the VSAN traffic flow. To use this solution it is recommended to use 2 VMkernel connections in an active/standbay configuration. You then have to designate which vmKernel interface will have the witness traffic by using an esxcli command. You can continue to use vMotion with this type of configuration.

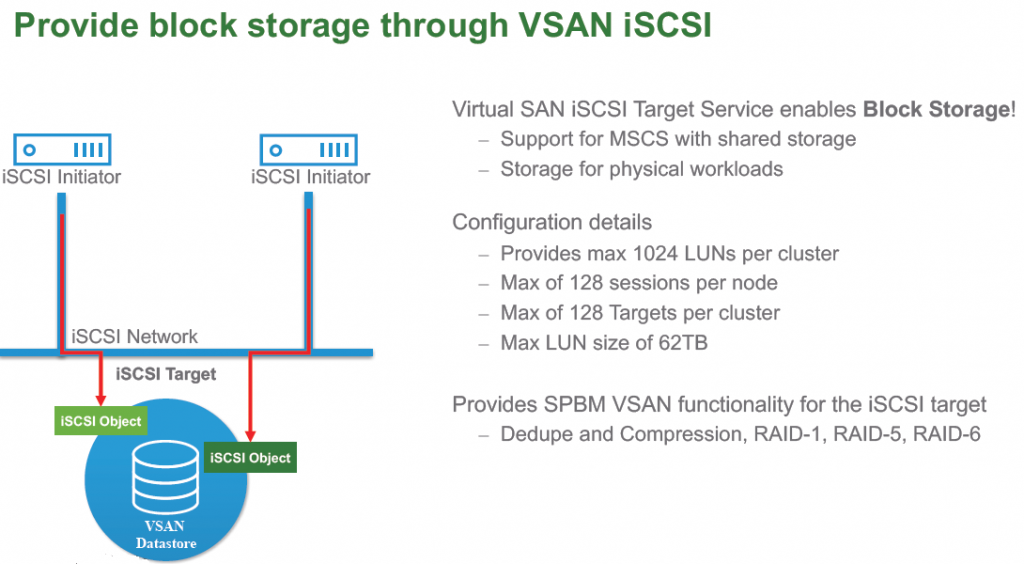

VSAN can now provide storage to more than just vSphere hosts with iSCSI support

VSAN can now provide storage to more than just vSphere hosts with iSCSI support

This is a big one, in prior releases VSAN presents out storage to ESXi hosts and other VSAN nodes via a proprietary communication protocol, what this means nothing but an ESXi host could use VSAN for shared storage. In VSAN 6.5 the proprietary protocol is still used as the main transport between hosts but support for industry standard protocols has been added in the form of iSCSI support. What this means is that potentially any device in the data center be it physical or virtual could utilize VSAN as primary storage.

Why is this big? It opens the door for VMware for VSAN to be used for just about every use case in the data center and eliminates the barrier that may have previously existed that required customers to deploy an additional primary storage array to fulfill their non-vSphere shared storage needs. In other words VSAN is now positioned to take over your entire data center. However VMware is currently not targeting this solution to support non-VSAN ESXi clusters, but those shouldn’t exist in a perfect VSAN world.

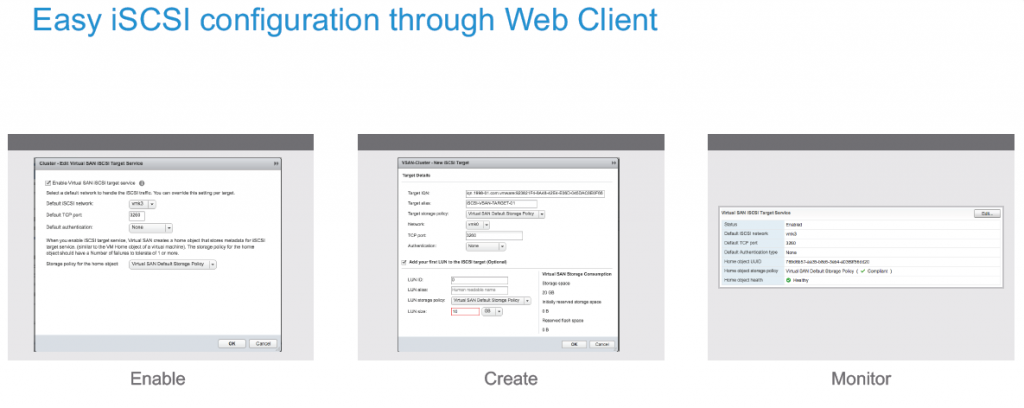

iSCSI support is being done natively from within the vmKernel and not using any type of virtual appliance. There are some scale limitations with only 128 targets supported and 1024 LUNs but the solution is compatible with Storage Policy Based Management so you can use extend SPBM to more than just VMs. To use this you must first enable the Virtual SAN iSCSI target service and select a VMkernel port which automatically sets this amongst all of the VSAN cluster nodes. You can then select a default SPBM policy and optionally configure iSCSI authentication (CHAP). You then configure LUN information such as the LUN number and size (up to 62TB) and optionally multiple initiators.

Enhanced PowerCLI support

Enhanced PowerCLI support

VSAN PowerCLI has been enhanced for those that want to automate VSAN operations to include things like Health Check remediation, iSCSI configuration, Capacity and Resync monitoring and more.

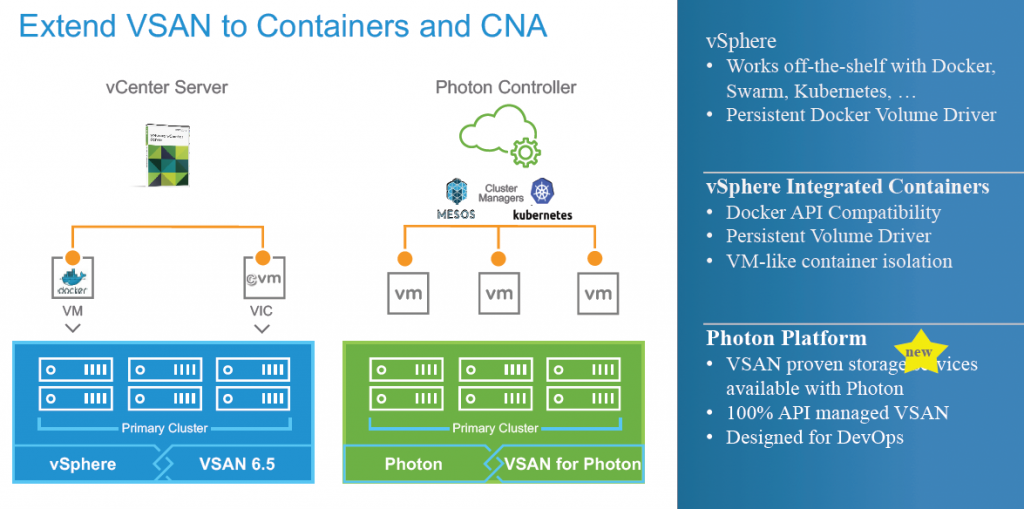

Support for Cloud Native Apps running on the Photon Platform

Support for Cloud Native Apps running on the Photon Platform

Finally VSAN support has been extended to support additional VMware Cloud Native Applications to now include the Photon Platform. This positions VSAN to be able to handle any VMware-centric container deployment model.