VMware just announced a new release of VSAN, version 6.2 and this post will provide you with an overview of what is new in this release. Before we jump into that lets like at a brief history of VSAN so you can see how it has evolved over it’s fairly short life cycle.

VMware just announced a new release of VSAN, version 6.2 and this post will provide you with an overview of what is new in this release. Before we jump into that lets like at a brief history of VSAN so you can see how it has evolved over it’s fairly short life cycle.

- August 2011 – VMware officially becomes a storage vendor with the release of vSphere Storage Appliance 1.0

- August 2012 – VMware CTO Steve Herrod announces new Virtual SAN initiative as part of his VMworld keynote (47:00 mark of this recording)

- September 2012 – VMware releases version 5.1 of their vSphere Storage Appliance

- August 2013 – VMware unveils VSAN as part of VMworld announcements

- September 2013 – VMware releases VSAN public beta

- March 2014 – GA of VSAN 1.0 as part of vSphere 5.5 Update 1

- April 2014 – VMware announces EOA of vSphere Storage Appliance

- Feburary 2015 – VMware releases version 6.0 of VSAN as part of vSphere 6 which includes the follow enhancements: All-flash deployment model, increased scalability to 64 hosts, new on disk format, JBOD support, new vsanSparse snapshot disk type, improved fault domains and improved health monitoring. Read all about it here.

- August 2015 – VMware releases version 6.1 of VSAN which includes the following enhancements: stretched cluster support, vSMP support, enhanced replication and support for 2-node VSAN clusters. Read all about it here.

With this 6.2 release VSAN turns 2 years old and it has come a long way in those two years. Note while VMware has announced VSAN 6.2 it is not yet available, if VMware operates in their traditional manner I suspect you will see it GA sometime in March as part of vSphere 6.0 Update 2. Let’s now dive into what’s new in VSAN version 6.2. After reading this post you should also check out VMware’s What’s New with VMware Virtual SAN 6.2 white paper for more detailed information.

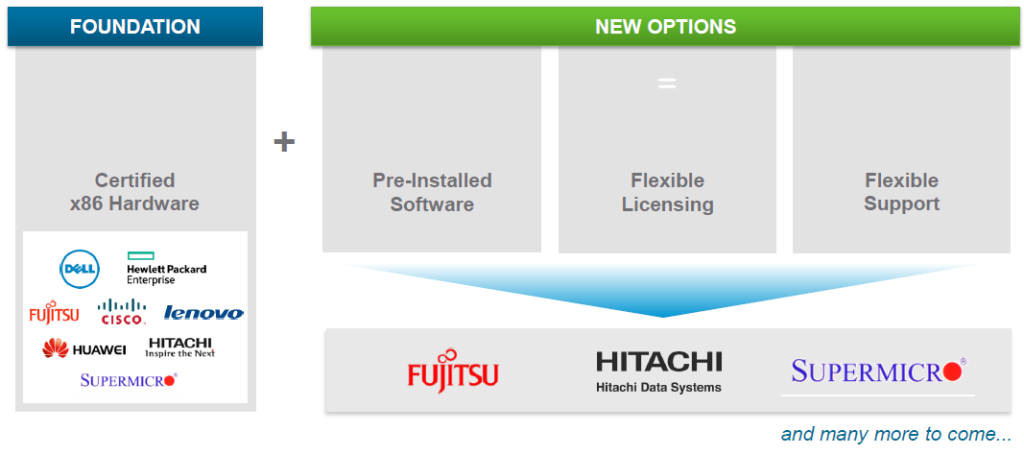

VMware continues to expand the Ecosystem and tweak licensing

VMware is continually trying to expand the market for VSAN and has put a lot of effort into working with hardware partners to expand the ecosystem. You’ll notice a couple key things here that has changed some things that have held them back in the past. The first is a more flexible licensing and support model. In addition VMware is now trying to get VSAN pre-installed on server hardware to make it even easier for customers to deploy it. You’ll see support from Fujitsu, Hitachi and SuperMicro right away on this, I suspect you’ll also see Dell and Cisco at some point, don’t hold your breath for HP Enterprise to do this though.

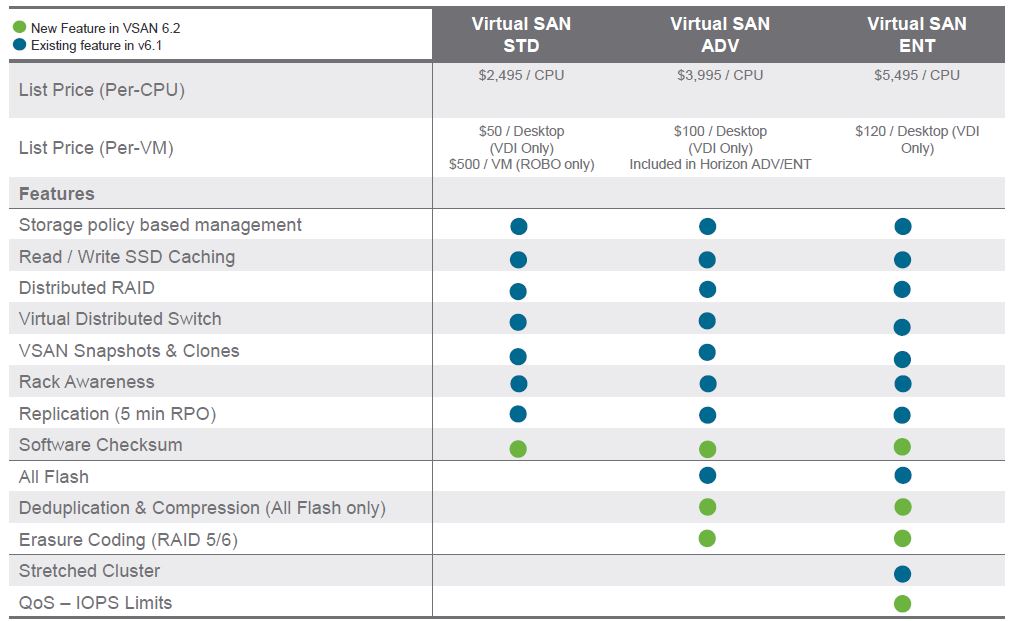

In VSAN 6.1 licensing will split into Standard and Advanced with the Advanced license getting you the ability to use the All-Flash deployment model. In VSAN 6.2 a new licensing tier is added, Enterprise which provides the ability to use Stretched Clustering and QoS (IOPS Limits). Note the new de-dupe and compression features in VSAN 6.2 are included in Advanced, also current Advanced customers are entitled to get VSAN Enterprise.

In VSAN 6.1 licensing will split into Standard and Advanced with the Advanced license getting you the ability to use the All-Flash deployment model. In VSAN 6.2 a new licensing tier is added, Enterprise which provides the ability to use Stretched Clustering and QoS (IOPS Limits). Note the new de-dupe and compression features in VSAN 6.2 are included in Advanced, also current Advanced customers are entitled to get VSAN Enterprise.

There are more VSAN customers then ever

There are more VSAN customers then ever

You would sure hope so, VMware is now claiming 3,000 VSAN customers. Back in August with the release of VSAN 6.1 they claimed 2,000 customers, so if you do the math they have added 1,000 new VSAN customers in the past 6 months. Not too bad growth but I’m sure VMware would like to see that number a lot higher after 2 years of VSAN GA. VMware is also claiming “More Customers Enable HCI with VMware HCS than Competition”, I’m not sure if I believe that claim, I wonder where they got the numbers that prove it.

What’s new the quick overview

What’s new the quick overview

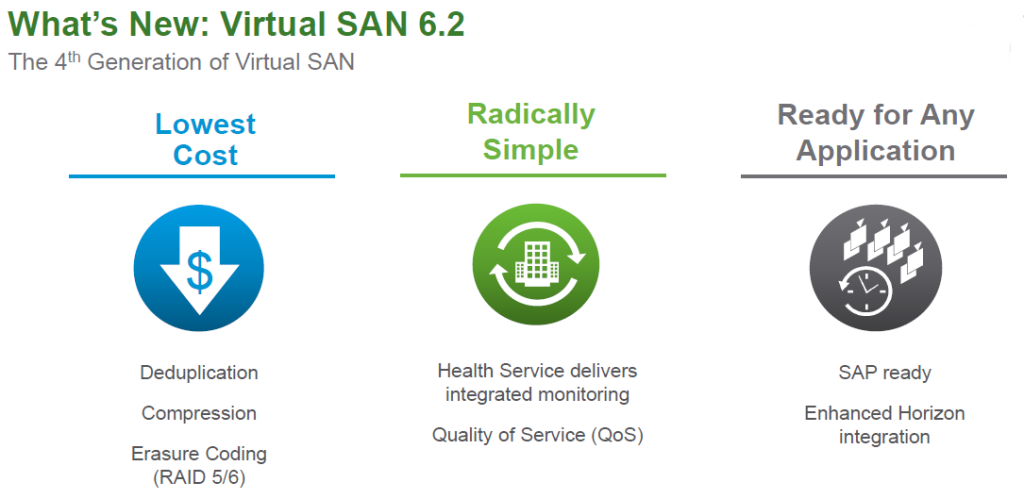

We’ll dive into these areas deeper but this gives you the quick overview of what’s new in VSAN 6.2 if you want to do the TL:DR thing. The big things are deduplication and compression, QoS and new RAID levels.

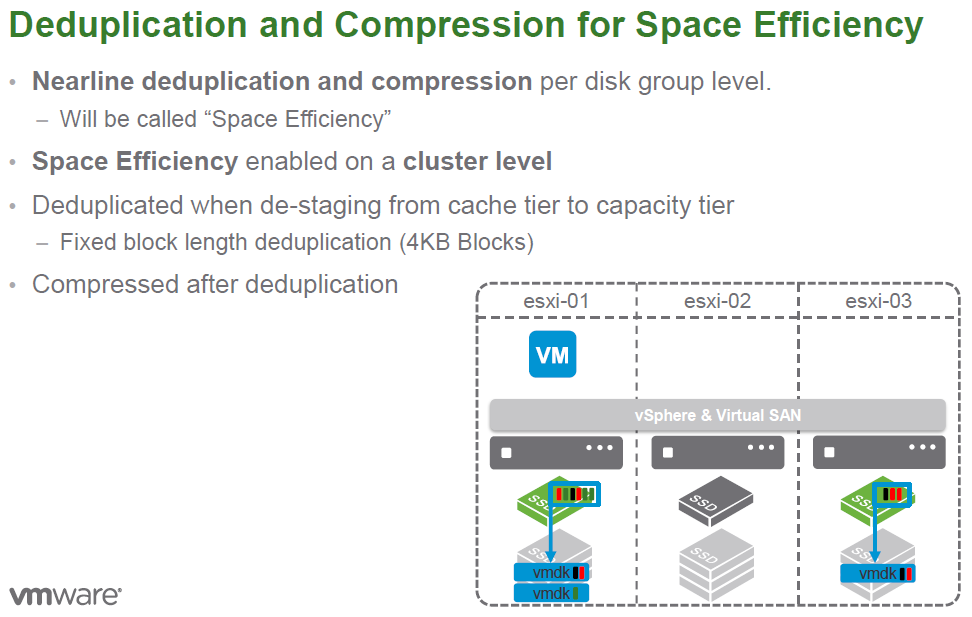

Deduplication and Compression

Deduplication and Compression

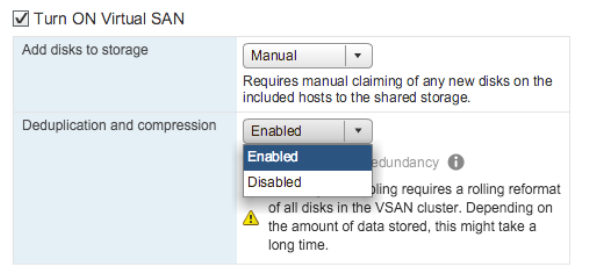

If you’re going to play in the storage big leagues you have to have these key features and VSAN now has them, but only on the All-Flash VSAN deployment model. This is pretty much in line with what you see in the industry as de-dupe and compression and SSDs are a perfect match so you can make more efficient use of the limited capacity of SSD drives. VMware hasn’t provided a lot of detail on how their implementation works under the covers beyond this slide but I suspect you will see a technical white paper on it at some point.

Note this deduplication is enabled at the cluster level so you can’t pick and choose what VSAN hosts it will be enabled on. While it is inline, the de-dupe operation occurs after data is written to the write cache and before it is moved to the capacity tier, compression happens right after de-dupe. VMware refers to this method as “nearline” and it allows them to be able to not waste resources trying to de-dupe “hot” data that is frequently changing. The de-dupe block size is fixed at 4KB, the storage industry block size tends to range from 4KB to 32KB with many vendors choosing greater than 4KB block sizes, 4KB is definitely a lot more granular which can result in higher de-dupe ratios.

Deduplication and compression are tied together with VSAN meaning they work together and you can’t just enable one or the other. Of course enabling deduplication and compression will add resource overhead to your hosts, as they are both CPU intensive operations. VMware claims it is minimal (around 5%) as they are using LZ4 compression which is designed to be extremely fast with minimal CPU overhead, but I’d like to see comparisons with this enabled and disabled to see how much impact it will be.

Deduplication and compression are tied together with VSAN meaning they work together and you can’t just enable one or the other. Of course enabling deduplication and compression will add resource overhead to your hosts, as they are both CPU intensive operations. VMware claims it is minimal (around 5%) as they are using LZ4 compression which is designed to be extremely fast with minimal CPU overhead, but I’d like to see comparisons with this enabled and disabled to see how much impact it will be.

New RAID levels

New RAID levels

VSAN has never required the use of any hardware RAID configured on the server side, you essentially use RAID-0 (no RAID) when configuring your disks and then VSAN handles redundancy by doing it’s own RAID at the VM-level. Prior to 6.2 there was only one option for this which was essentially RAID-1 (mirroring) where whole copies of a VM are written to additional hosts for redundancy in case of a host failure. While that worked OK it consumed a lot of extra disk capacity on hosts as well as more host overhead.

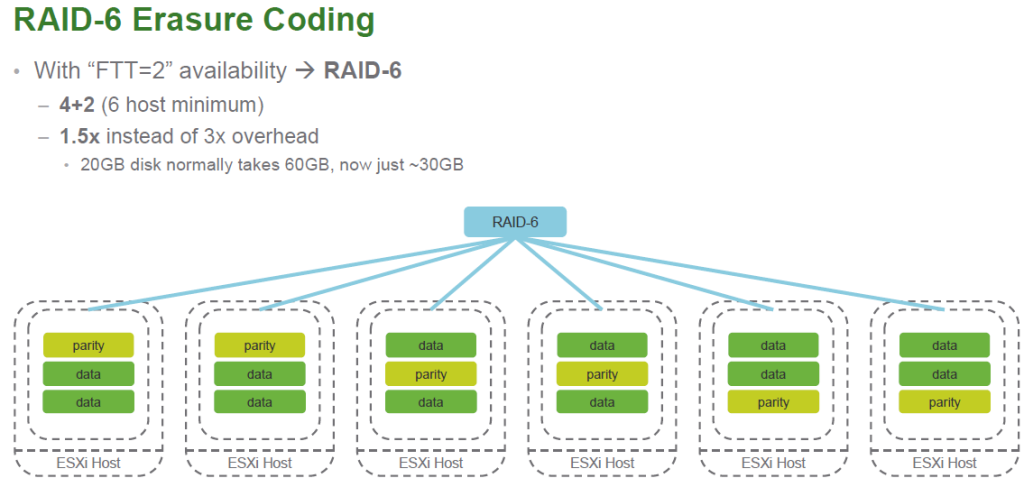

With 6.2 VMware has introduced two new RAID levels, RAID-5 and RAID-6 which improves efficiency and reduces the required capacity requirements. These new RAID levels are only available on the All-Flash deployment model and can be enabled on a per VM-level. They refer to these methods as “Erasure Coding” which is different from traditional RAID in the way that data is broken up and written. Erasure coding is supposed to be more efficient than RAID when re-constructing data and has a downside that it can be more CPU intensive than RAID. These new RAID levels work much like their equivalent traditional disk RAID levels where parity data is striped across multiple hosts. In 6.2 these new RAID levels do not support stretch clustering but VMware expects to support that later on.

RAID-5 requires a minimum of 4 hosts to enable and is configured as 3+1 where parity data is written across 3 other hosts. Using RAID-5 the parity data only requires 1.33 times the additional space where as RAID-1 always consumed double additional space (2x). As a result a VM that is 20GB in size will only consume an additional 7GB on other hosts with RAID-5, with RAID-1 it would consume 20GB as you are writing an entire copy of the entire VM to other hosts.

With RAID-6 you are providing additional protection by writing an additional parity block and as a result there is a 6 host minimum (4+2) and the parity data consumes only 1.5 times the additional space. This provides better protection to allow you to survive up to 2 host failures. Using RAID-6 a 20GB VM would only consume an additional 10GB of disk on other hosts, if you did this with RAID-1 it would consume an additional 40GB as you are writing two copies of the entire VM to other hosts.

With RAID-6 you are providing additional protection by writing an additional parity block and as a result there is a 6 host minimum (4+2) and the parity data consumes only 1.5 times the additional space. This provides better protection to allow you to survive up to 2 host failures. Using RAID-6 a 20GB VM would only consume an additional 10GB of disk on other hosts, if you did this with RAID-1 it would consume an additional 40GB as you are writing two copies of the entire VM to other hosts.

These RAID levels are tied to the Failures To Tolerate (FTT) setting in the VSAN configuration which specifies how many failures VSAN can tolerate before data loss occurs. When FTT is set to 1 RAID-5 is utilized and you can tolerate one host failure and not lose any data. When FTT is set to 2 RAID-6 is utilized, and you can tolerate two host failures and not lose any data. While there is a minimum number of hosts required to use these RAID levels once you meet that number any number of hosts is supported with them. If you have less than 4 hosts in a VSAN cluster than the old RAID-1 is used.

These RAID levels are tied to the Failures To Tolerate (FTT) setting in the VSAN configuration which specifies how many failures VSAN can tolerate before data loss occurs. When FTT is set to 1 RAID-5 is utilized and you can tolerate one host failure and not lose any data. When FTT is set to 2 RAID-6 is utilized, and you can tolerate two host failures and not lose any data. While there is a minimum number of hosts required to use these RAID levels once you meet that number any number of hosts is supported with them. If you have less than 4 hosts in a VSAN cluster than the old RAID-1 is used.

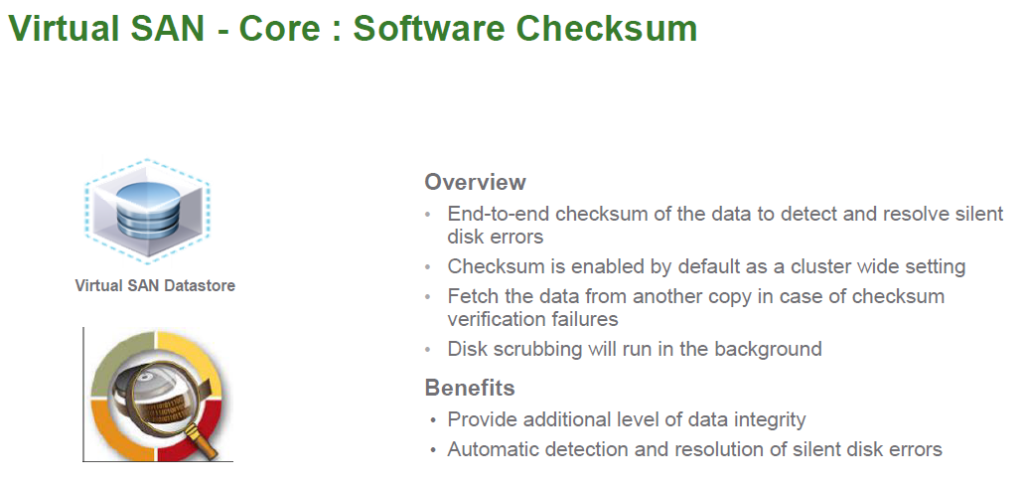

New Software Checksum

A new software checksum has been introduced for increased resiliency that is designed to provide even better data integrity and complement hardware checksums. This will help in case data corruption occurs because of disk errors. A checksum is a calculation using a hash function that essentially takes a block of data and assigns a value to it. A background process will use checksums to validate the integrity of data at rest by looking at disk blocks and comparing the current checksum of that block to it’s last know value which is stored in a table. If an error or mismatch occurs it will replace that block with another copy that is stored in parity on other hosts. While enabled by default at the cluster level and it can disabled on a per VM basis if needed.

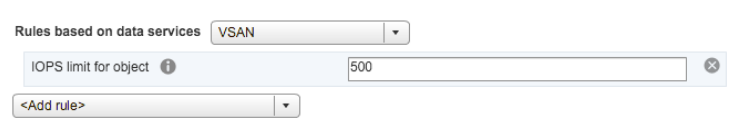

New Quality of Service (QoS) controls

New Quality of Service (QoS) controls

VSAN has some new QoS controls designed to regulate storage performance within a host to protect against noisy neighbors or for anyone looking to set and manage performance SLAs on a per VM basis. The new QoS controls work via vSphere Storage Policies and allow you to set IOPS limits on VMs and virtual disks. These limits will be initially based on a 32KB block size but that will be adjustable as needed. VMware didn’t go into a lot of detail on how this all works but it seems fairly straightforward as you are just capping the amount of IOPS that a VM can consume.

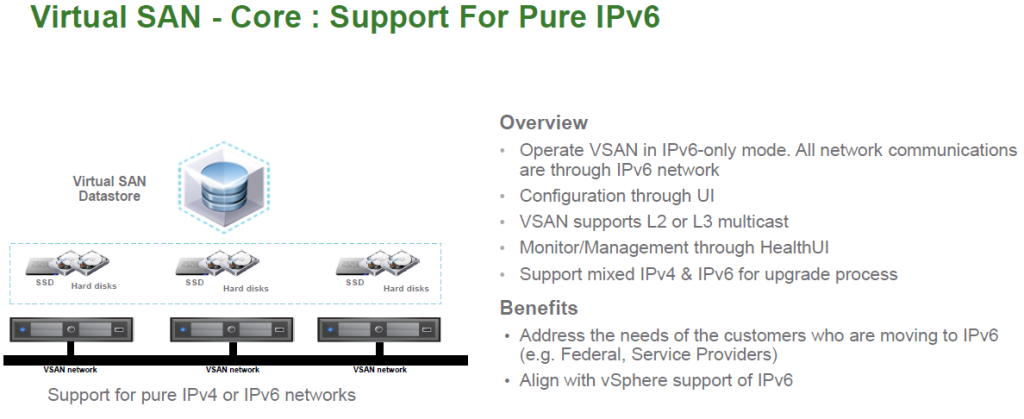

IPv6 Support

IPv6 Support

This one is pretty straightforward, vSphere has had IPv6 support for years, VMware has had requests for IPv6 support with VSAN and now they have it. There is support for a mixed IPv4 and IPv6 environment for migration purposes.

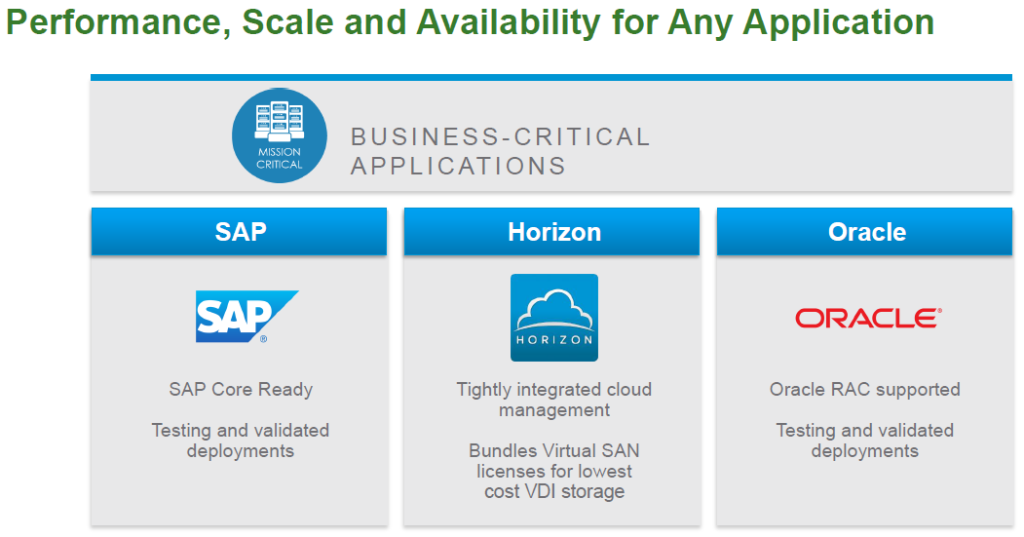

Improved Application Support

Improved Application Support

VSAN already has pretty good application support with key apps such as Oracle and Exchange, they have extended that in 6.2 with new support for SAP and tighter integration with Horizon View. VMware is working hard to make VSAN capable of running just about any application workload.

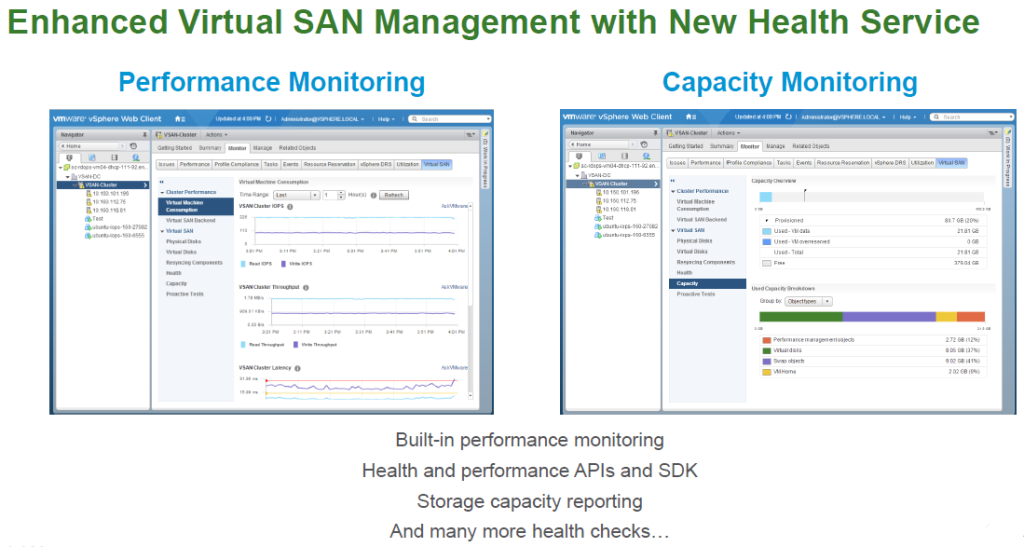

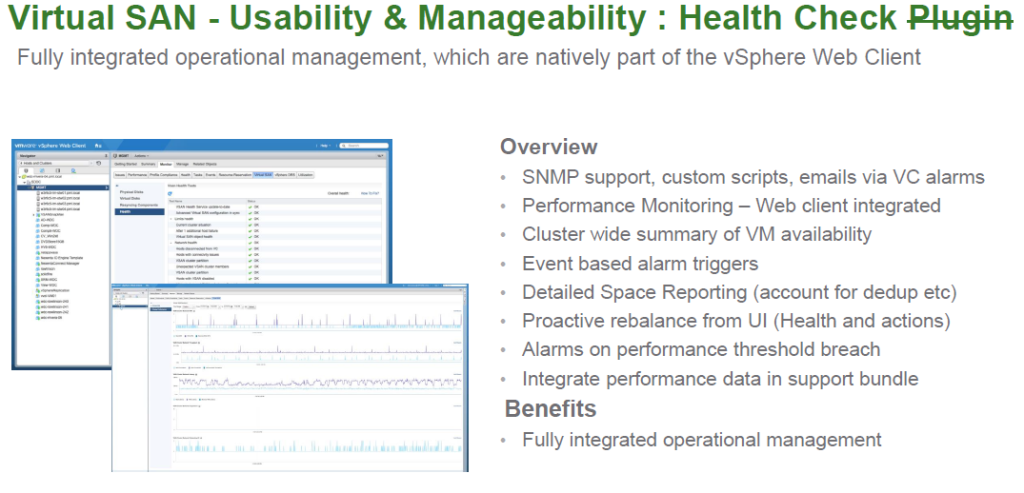

Enhanced Management and Monitoring

Enhanced Management and Monitoring

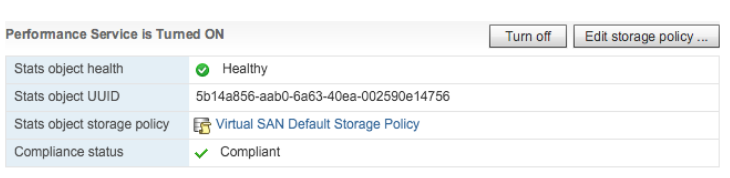

It’s even easier to manage and monitor VSAN in 6.2 from directly within vCenter, prior to 6.2 you had to leverage external tools such as vSAN Observer or vRealize Operations Manager to get detailed health, capacity and performance metrics. This new performance management capability is built directly into vCenter but it’s separate from the traditional performance metrics that vCenter collects and stores in it’s database. The new VSAN performance service will have it’s own separate database contained with the VSAN object store. The size of this database will be around 255GB and you can choose to protect it with either traditional mirroring (RAID 1) or using the new erasure coding methods (RAID-5 or RAID-6). By default this is not enabled to conserve host space but can be enabled if needed in the settings for VSAN.

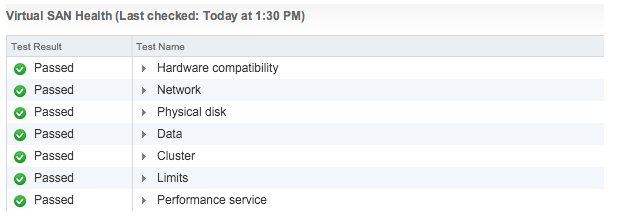

Native Health Check

Native Health Check

You no longer need to use a special Health Check Plug-in to monitor the health of VSAN. This allows you to have end to end monitoring of VSAN to quickly recognize problems and issues and resolve them. They have also improved the ability to detect and identify faults to enable better health reporting with VSAN.

Additional Improvements

Additional Improvements

Finally there are a few minor additional improvements with VSAN in 6.2, the first one is rather interesting. VMware is introducing a new client (host) cache in VSAN 6.2 that utilizes host memory (RAM) as a dynamic read cache to help improve performance. The size of this cache will be .4% of total host memory up to a maximum size of 1GB. This is similar to what 3rd party vendors such as Pernix and Infinio do by leveraging host memory as a cache to speed up storage operations. While this new client cache is currently limited to VSAN you have to wonder if VMware will open this up in a future release to work with local VMFS datastores or SAN/NAS storage.

Another new feature is the ability to have your VM memory swap files (.vswp) use the new Sparse disk format that VMware introduced in VSAN 6.0 as a more efficient disk format. As memory over-commitment is not always used by customers this enables you to reclaim a lot of that wasted space used by vswp files that are created automatically when VMs are powered on.

3 comments

The Failure Tolerance Method of RAID-5/6 (Erasure Coding) is actually applied per object, as opposed to per VM. This is consistent with other SPBM policies.

Author

Thanks Jase, yeah when I say VM I’m actually meaning objects as that is how they are stored in the underlying file system. The same is true for VVols which breaks a VM into multiple objects (VVols) when writing them to the Storage Container. I’m going to assume VSAN is using a very similar object write format as VVols does since they are very similar architectures and both integrate with SPBM.

Also, Space Efficiency Technologies include:

Deduplication & Compression (Cluster Level)

RAID-5/6 (Erasure Coding) as a new Failure Tolerance Method (in addition to Mirroring/RAID-1)

Swap Efficiency – Advanced Host Setting to allow for thin virtual swap files, as opposed to reserved space for them.

Just for clarification.