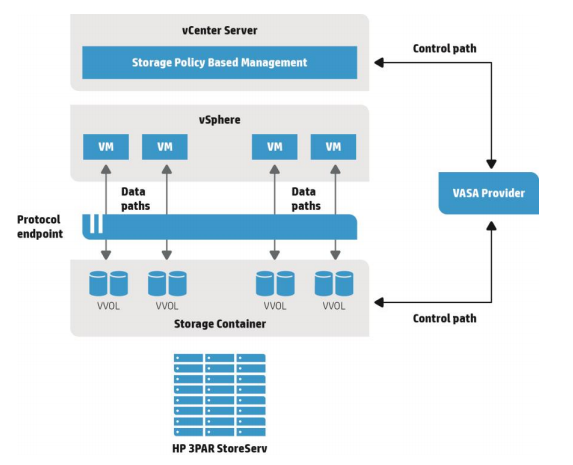

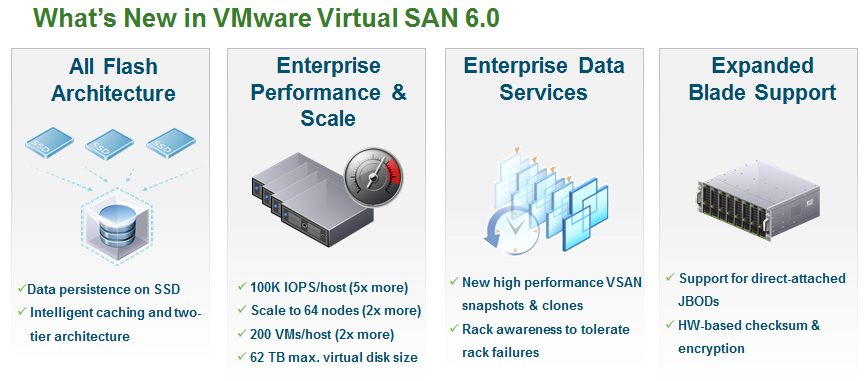

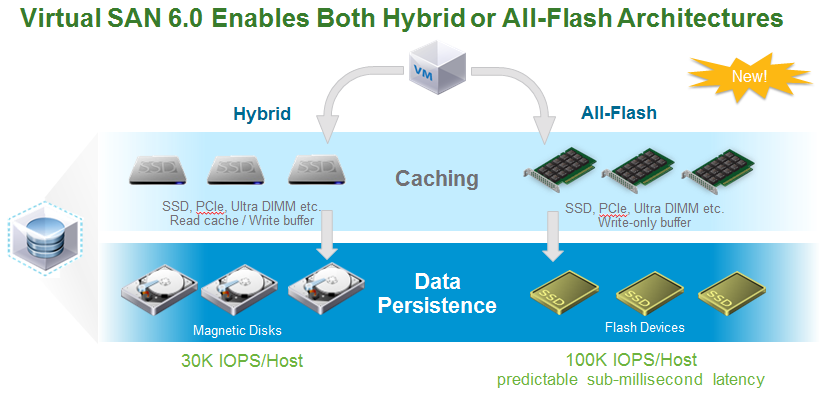

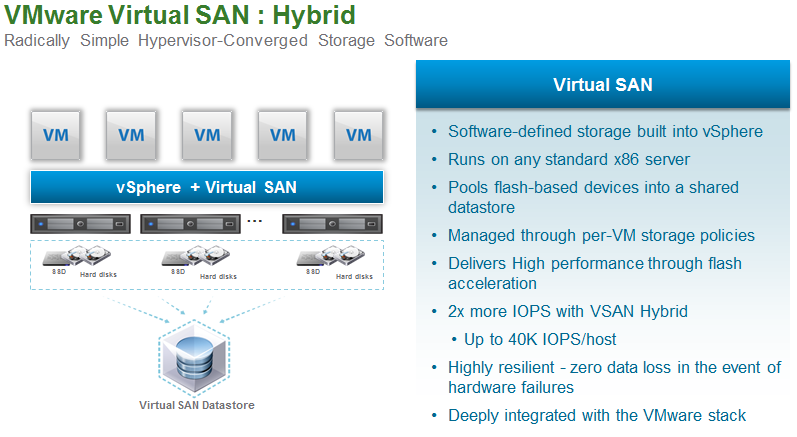

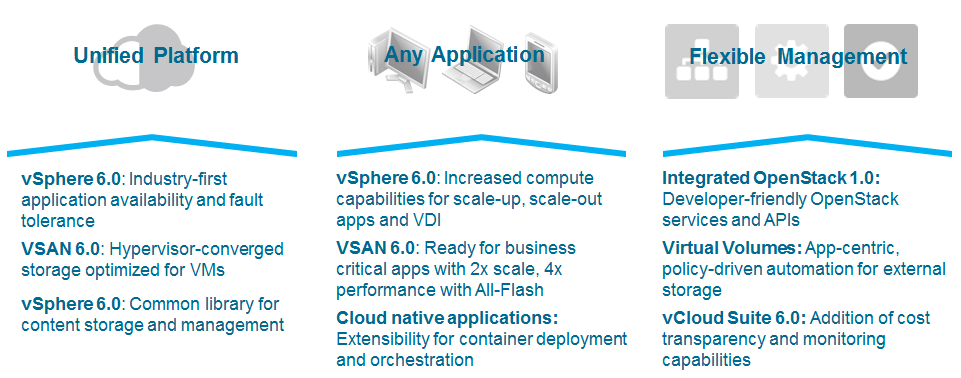

vSphere 6 is the newest major release of vSphere since vSphere 5.5 and with any major release it comes packed with lots of new and enhanced features along with increased scalability. While the vSphere 6 release is very storage focused with big improvements to VSAN and the launch of the VVOLs architecture there are still plenty of other things that make this an exciting release. The following is a summary of some of the big things that are new in vSphere 6, I’ll be doing additional posts that focus specifically on VSAN and VVOLs. Note while vSphere 6 has now been formally announced, it will not GA and be publicly available until March.

The Monster Host is born

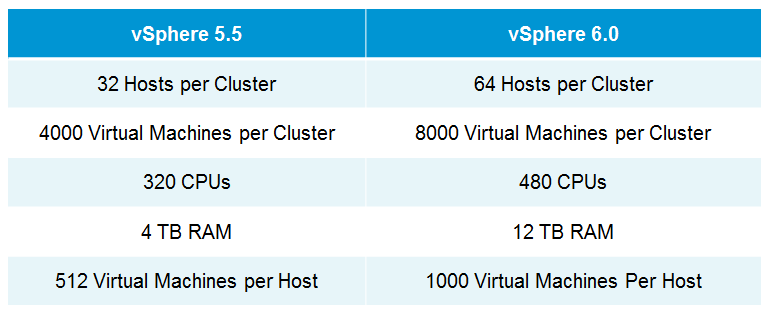

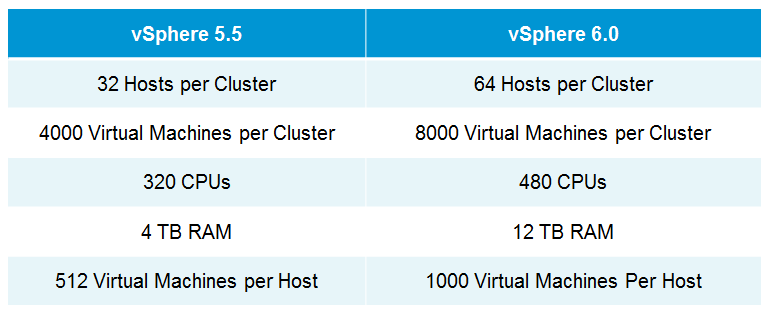

We’ve had monster VMs in the past that could be sized ridiculously large, now we’re getting monster hosts as well. In vSphere 5.5 the maximum supported host memory was 4TB, in vSphere 6 that jumps up to 12TB. In vSphere 5.5 the maximum supported # of logical (physical) CPUs per host was 320 CPUs, in vSphere 6 that increases to 480 CPUs. Finally the maximum number of VMs per host increases from 512 in vSphere 5.5 to 1000 VMs per host in vSphere 6. While this is greatly increased I’m not sure there are many people brave enough to put that many VMs on a single host, imagine the fun of HA having to handle that many when a host fails.

vSphere clusters get twice as big

It’s not just host maximums that are increasing in vSphere 6, cluster sizes are increasing as well. vSphere 5.5 supported only 32 hosts and 4000 VMs per cluster, vSphere 6 doubles that to 64 hosts and 8000 VMs in a cluster. Note the host maximums don’t line up with the cluster maximums, 64 hosts x 1000 VMs per host equals 64000 VMs, the 8000 VMs is a limitation of vCenter Server not of the ESXi hosts.

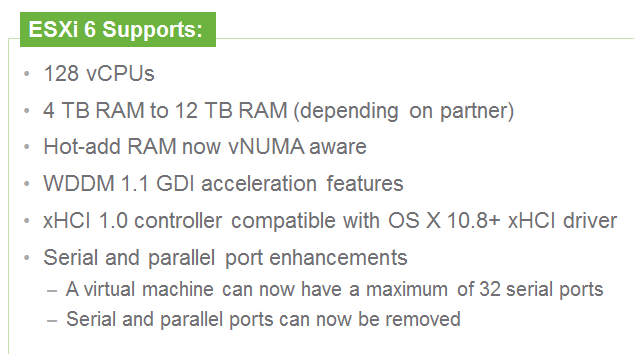

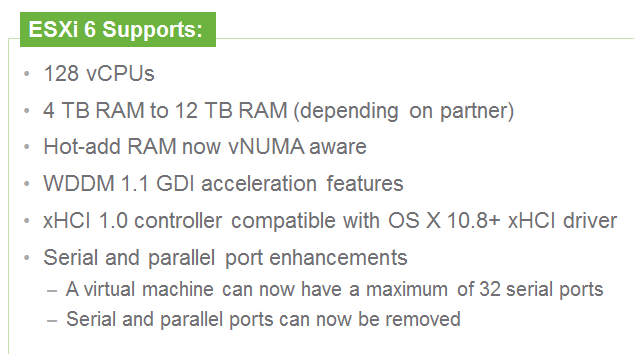

VMs get a little bigger as well

In vSphere 5.5 a VM could be configured with up to 64 vCPUs, in vSphere 6.0 that has doubled do 128 vCPUs. It’s crazy to think a VM would ever need that many but if you have a super mega threaded application that could use them you now have more. I thought serial ports were basically dead these days but apparently there are VMs that need a lot of them as they also increased the number of serial ports that you can assign to a VM from 4 to 32. You can also remove serial and parallel ports from a VM if you don’t want them at all.

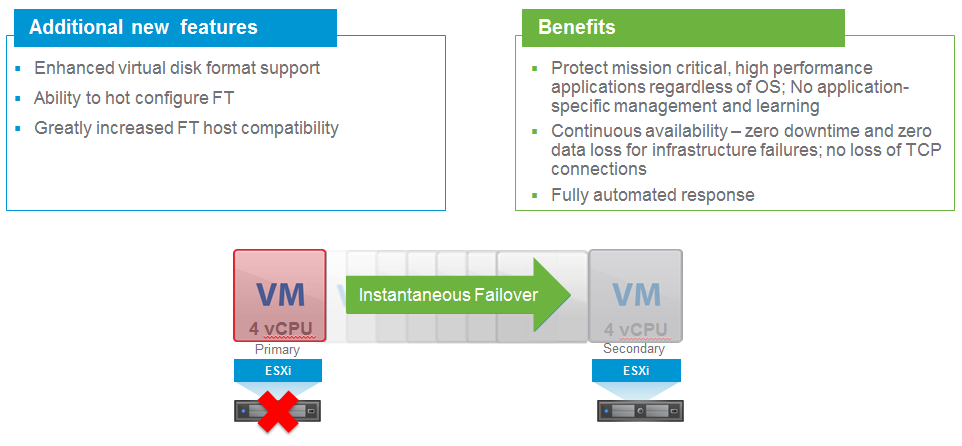

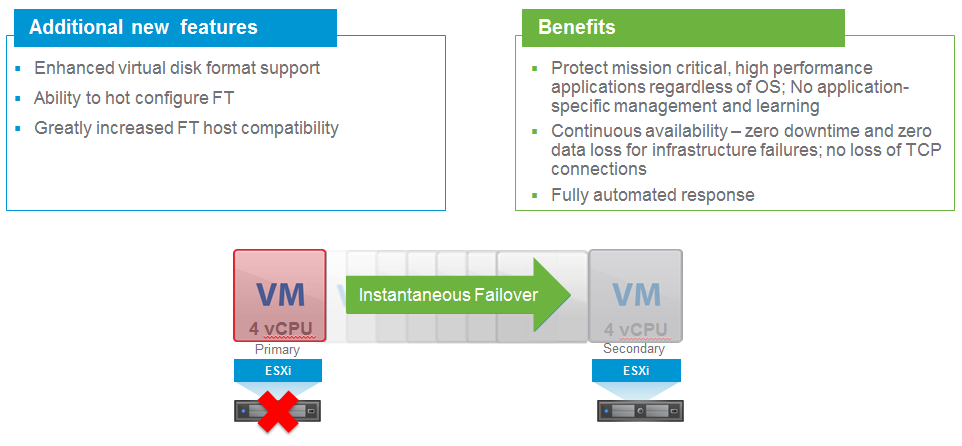

Fault Tolerance is finally ready for prime time

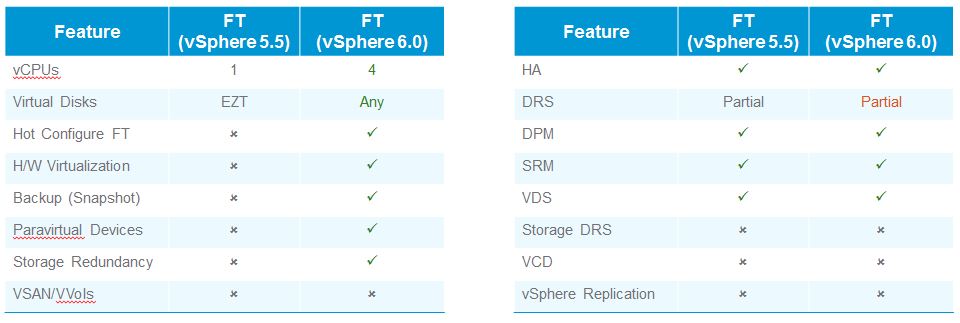

The Fault Tolerance (FT) feature that was first introduced in vSphere 4 which provides the best protection of VMs by preventing any downtime in case of a host failure has always been limited to supporting a single vCPU. This prevented anyone that has an application that might require multiple vCPUs from using FT. Supporting more than one vCPU is not as simple as you might think as the 2 VMs running on separate hosts need to be kept in complete lockstep and synchronized for FT to work. VMware has spent a lot of time trying to engineer this and has teased multi-CPU FT support at VMworld sessions the last few years. Now in vSphere 6 they finally deliver it with support up to 4 vCPUs.

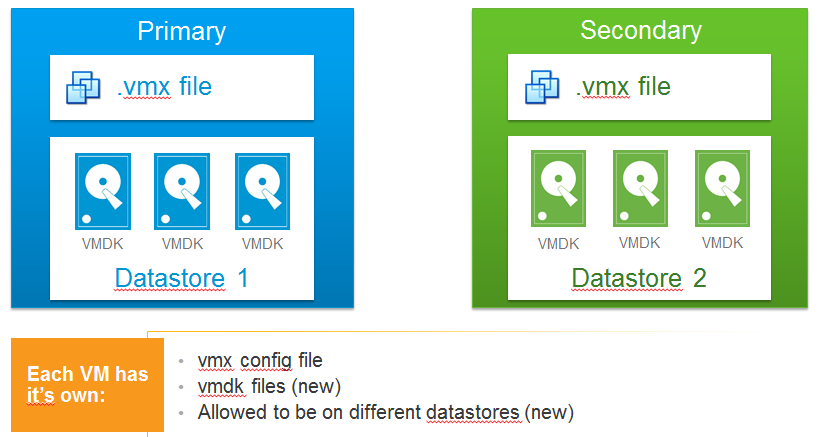

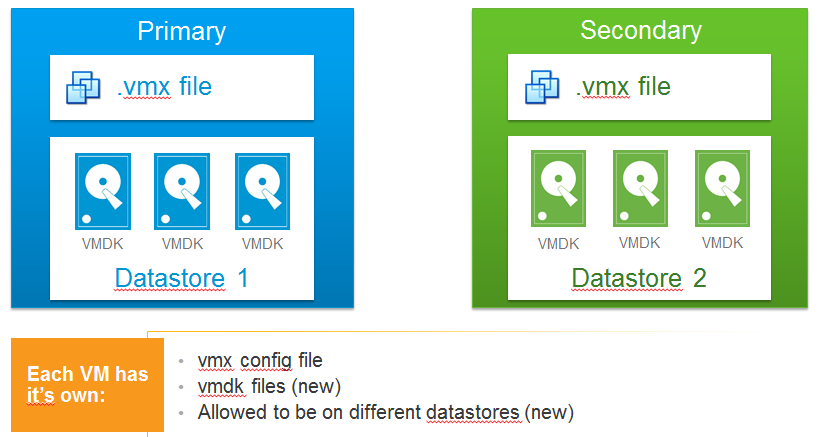

VMware also changed the design of how Fault Tolerance is implemented. Previously FT worked by having 2 VMs on separate hosts, one as a primary and the other as a secondary but both VMs relied on a single virtual disk that resides on shared storage. Presumably it was done this way as keeping two virtual disks (VMDK) in perfect sync may have slowed down the VM and impacted performance. In vSphere 6 this has changed with each VM having their own virtual disk that can be located on different datastores. This change probably helped VMware overcome the lack of snapshot support in previous versions.

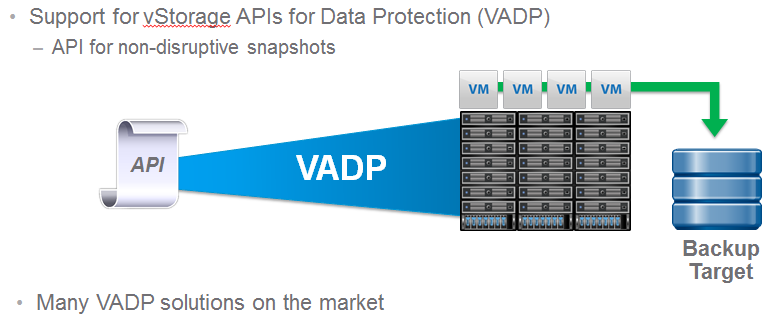

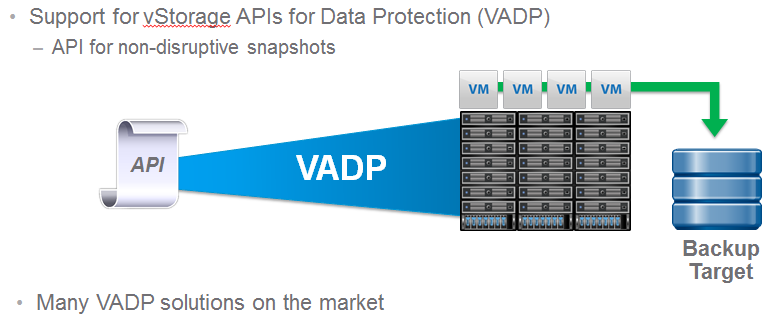

In addition they have also improved some of the limitations that FT had, Eager Zeroed Thick (EZT) virtual disks are no longer required, any virtual disk type can now be used. In addition another big limitation has also been eliminated, previously you could not take VM snapshots of a FT enabled VM. While this may not sound like too big a deal remember most VM backup solutions have to take a snapshot before backing up a VM to halt writes to the virtual disk so it can be backed up. Not having support for snapshots meant you had to do it the old fashioned way using a backup agent running inside the guest OS, something that not all modern VM-only backup solutions do not support. So now you can finally backups Ft enabled VMs more easily, in addition you can also use the vSphere APIs for Data Protection with FT.

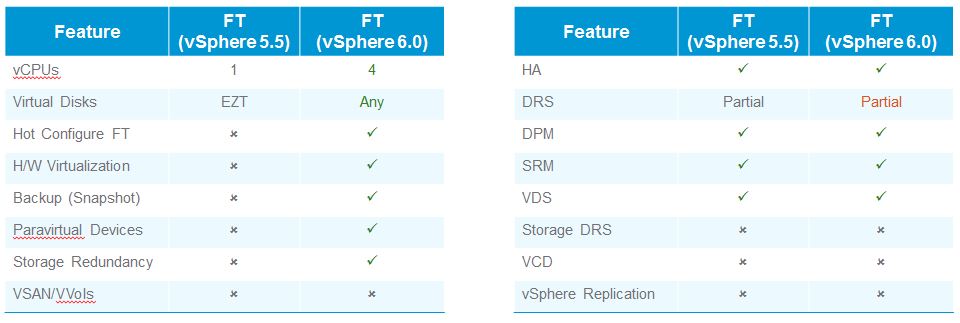

One other thing to note about FT, it only supports a VM running on VMFS, VSAN and VVOLs is not supported. Below is a full comparison of feature support of FT on vSphere 5.5 and vSphere 6.

One other thing to note about FT, it only supports a VM running on VMFS, VSAN and VVOLs is not supported. Below is a full comparison of feature support of FT on vSphere 5.5 and vSphere 6.

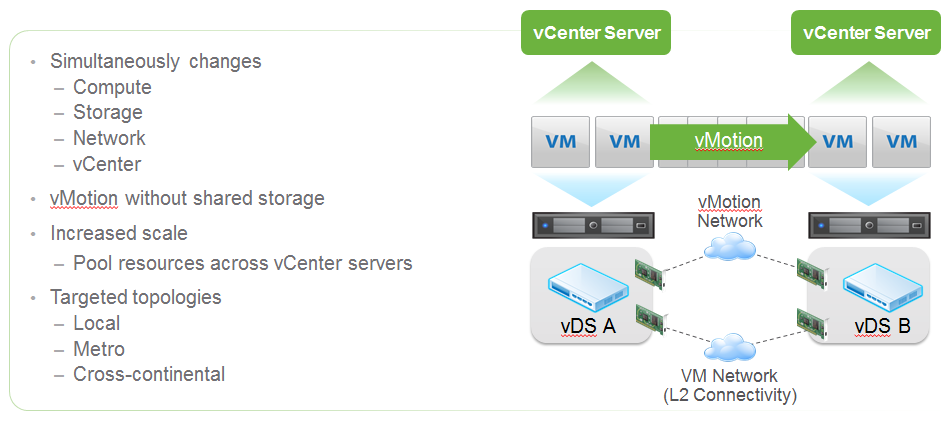

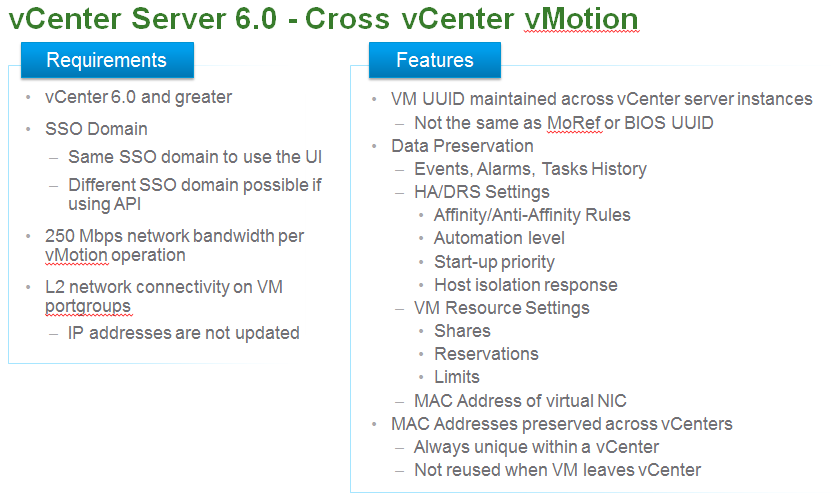

vMotion anything, anywhere, anytime

A lot of new enhancements have been made to vMotion to greatly increase the range and capabilities of moving a VM around in your virtual infrastructure. First off you can now vMotion a VM between vSwitches, this includes moving between different types of virtual switches. So a VM can be moved from a Standard vSwitch to a Distributed vSwitch without changing its IP address and without network disruption.

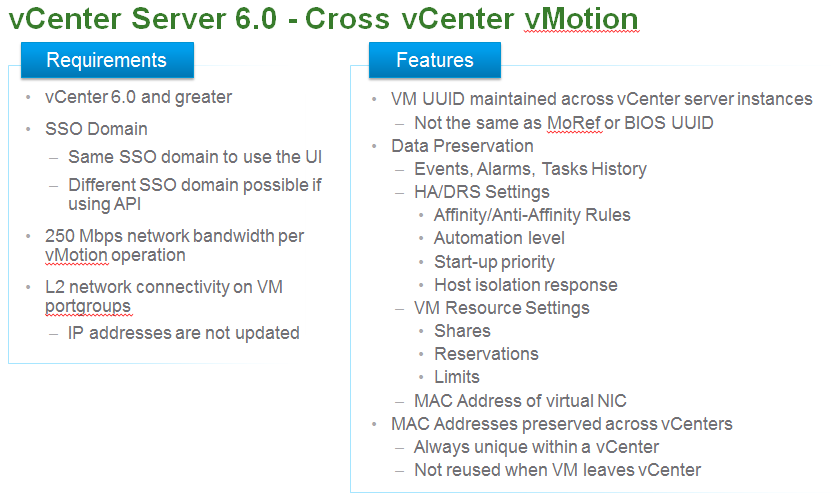

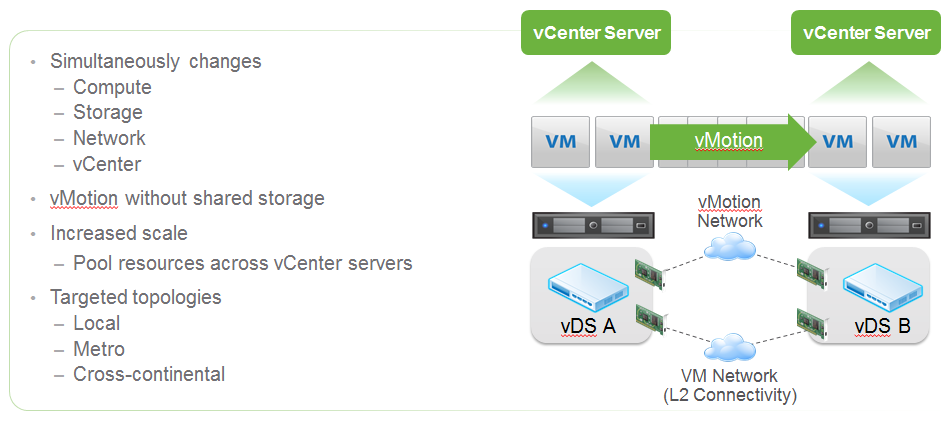

vMotion has always been restricted to moving a VM from host to host on the same vCenter Server. Now with vSphere 6 those walls come tumbling down and you can vMotion a VM from a host on one vCenter Server to another host on a different vCenter server. This does not require common shared storage between the hosts and vCenter Servers and is intended to eliminate the traditional distance boundaries of vMotion allowing you to move VMs either between local data centers, across regional data centers or even across continental data centers.

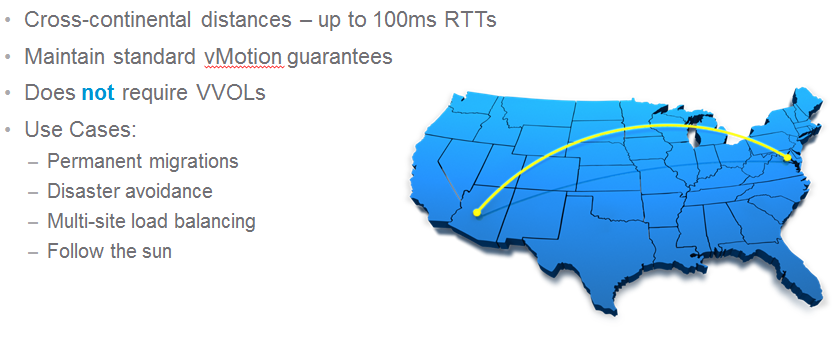

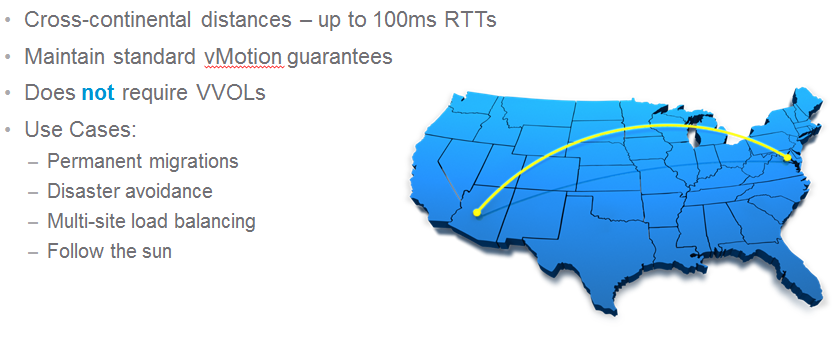

Another vMotion enhancement has to do with latency requirements which have long dictated how far you can vMotion a VM. In vSphere 5.5 the maximum vMotion latency was 10ms which is typical of metropolitan distances (<100 miles). Now in vSphere 6 that goes way up to 100ms so you can move VMs much greater distances more in line with cross-continental distances. Some use cases for this include disaster avoidance (i.e. hurricane inbound), permanent migrations and multi-site load balancing. This really opens up the door for implementing new BC/DR possibilities.

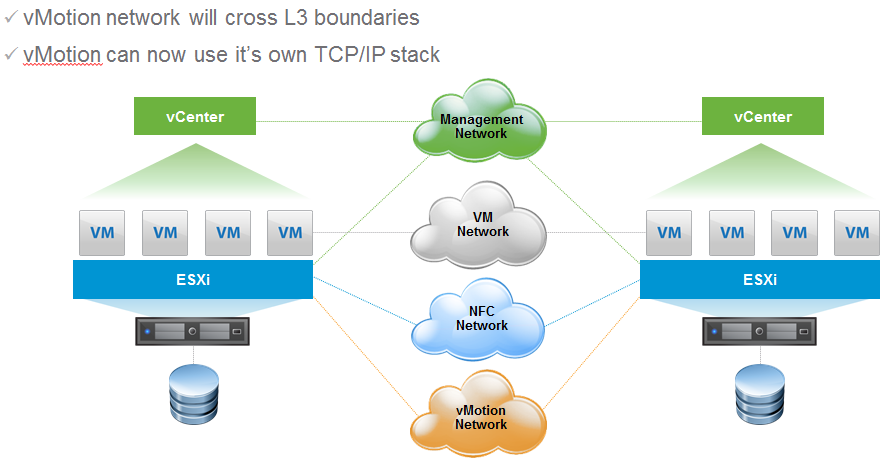

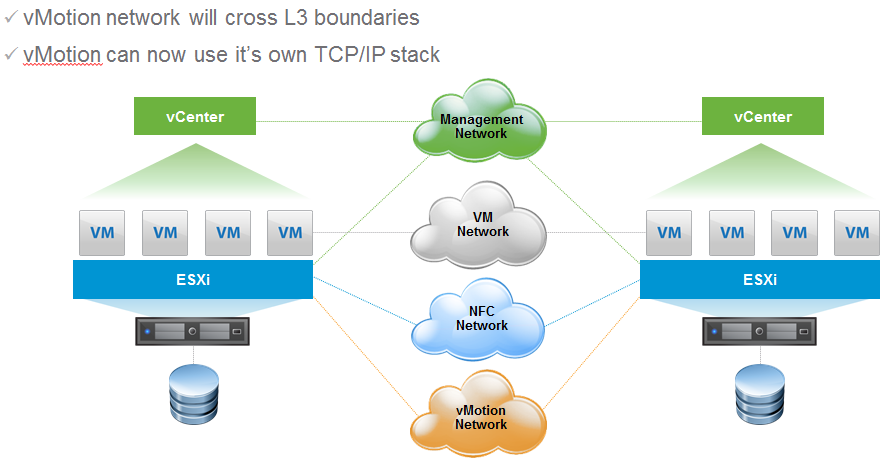

If that wasn’t enough vMotion also has increased network flexibility, as vMotion now has it’s very own TCP/IP stack and can cross layer 3 network boundaries.

Yes your beloved vSphere Client is still here

Your classic Windows-based C# vSphere Client is still around, VMware hasn’t managed to kill it off yet as much as they want to. Despite it still being around, it doesn’t support any new vSphere features or functionality since vSphere 5.1 so you might only want to use it for nostalgia sake. I foresee this as the last major release of vSphere that includes it so you better get used to the vSphere Web Client. In vSphere 6 the vSphere can be used for things like Direct Access to hosts, VUM remediation or if you’re still pissed at the Web Client. They did add read only support for virtual hardware versions 10 and 11 in vSphere 6.

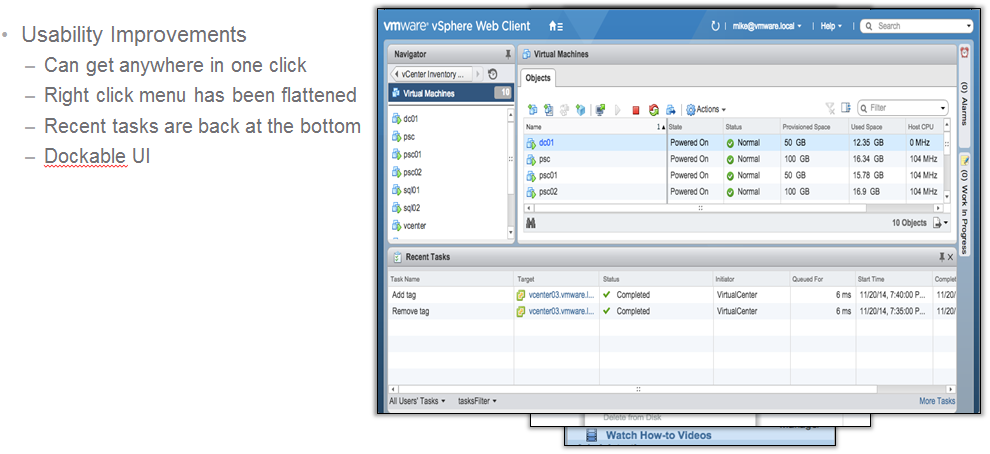

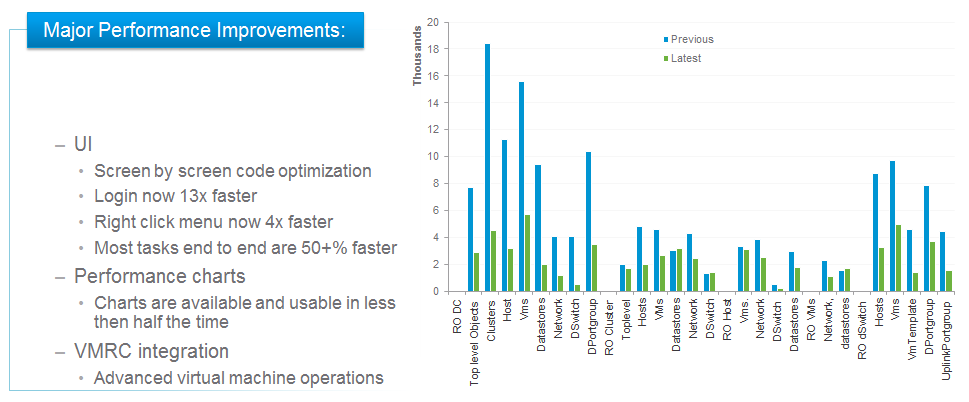

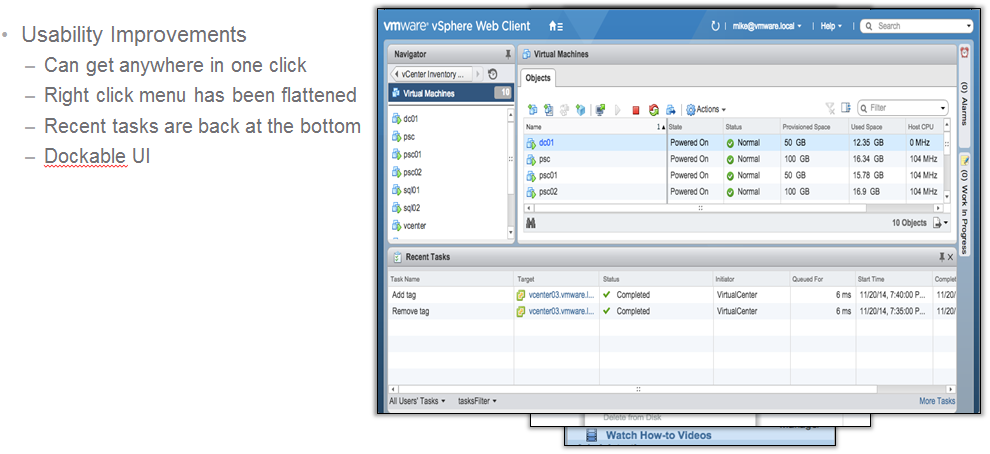

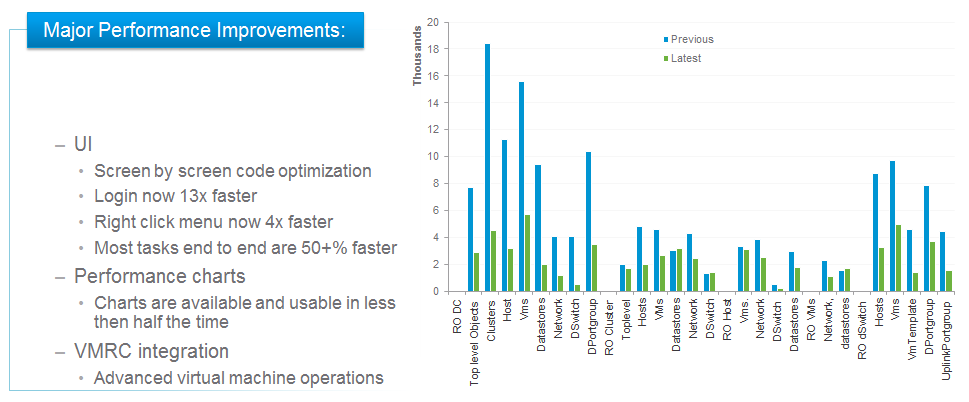

The vSphere Web Client gets a lot better (and faster)

This might help change your mind about using the vSphere Web Client, there have been tons of complaints about speed and performance of it impacting usability and overall concerns with things just not being as good as they were in the class vSphere Client. Apparently VMware has heard all your griping and is finally listening as they have spent significant effort in this release of improving the performance and usability of the vSphere Web Client. Performance improvements include: improved login time, faster right click menu load and faster performance charts. Usability improvements include: recent Tasks moved to bottom (a big gripe before), flattened right click menus and deep lateral linking. The improvements are quite impressive as shown in following slide and should finally help convince admins to ditch the classic vSphere Client. One thing to note is that the Web Client does not support HTML5 yet, VMware has focused on making it better and will move to HTML5 in a later release of vSphere.

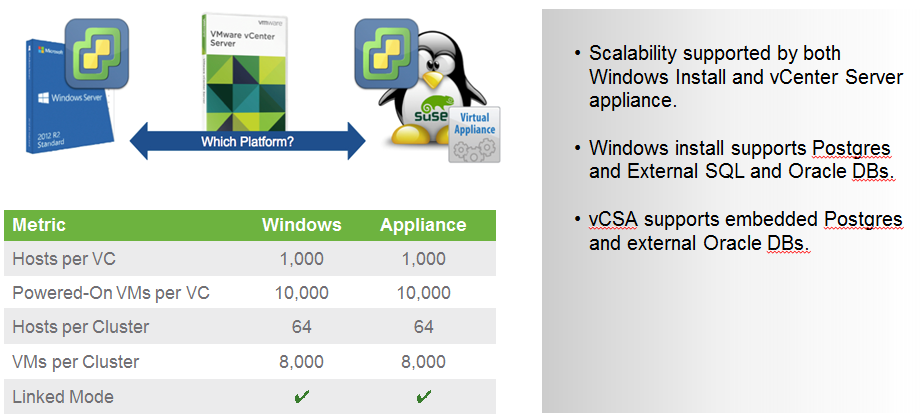

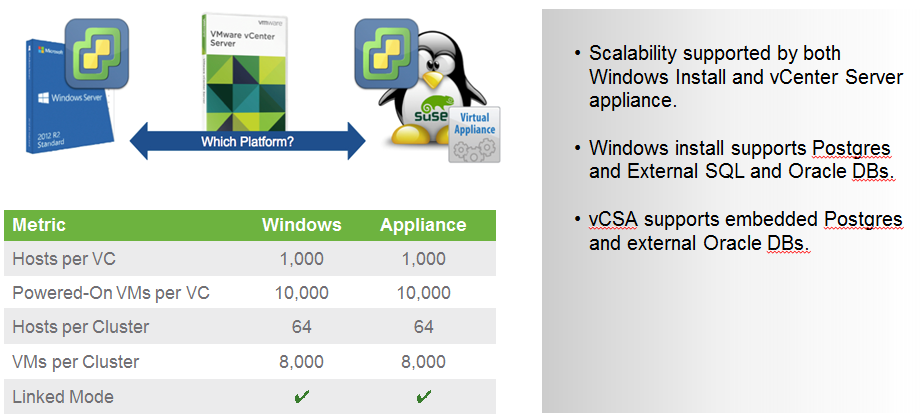

The vCenter Server Appliance gets super-sized

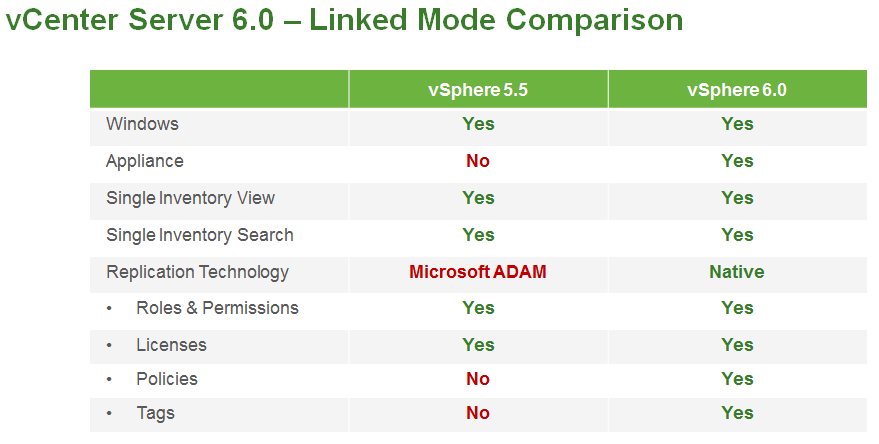

Deploying and maintaining vCenter Server has always been a pain; you have to deal with Windows, the vCenter Server application, certificates, permissions, databases etc. The vCenter Server Appliance (VCSA) eliminates most of that and makes deploying and upgrading vCenter Server simple and easy. The one big limitation with it though has been its lack of scalability, now in vSphere 6 the VCSA fully scales to the same limits that the vCenter Server on Windows scales to. As a result it now supports 1,000 hosts, 10,000 VMs and linked mode.

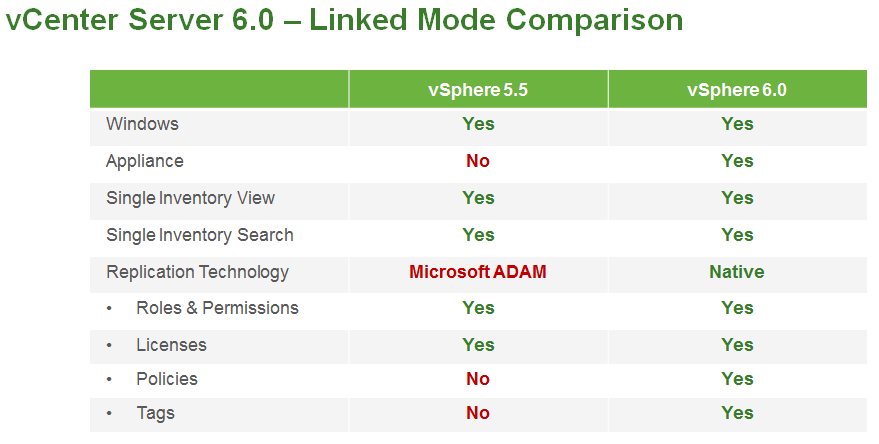

Speaking of vCenter Server Linked Mode it gets some improvements and better support as well.

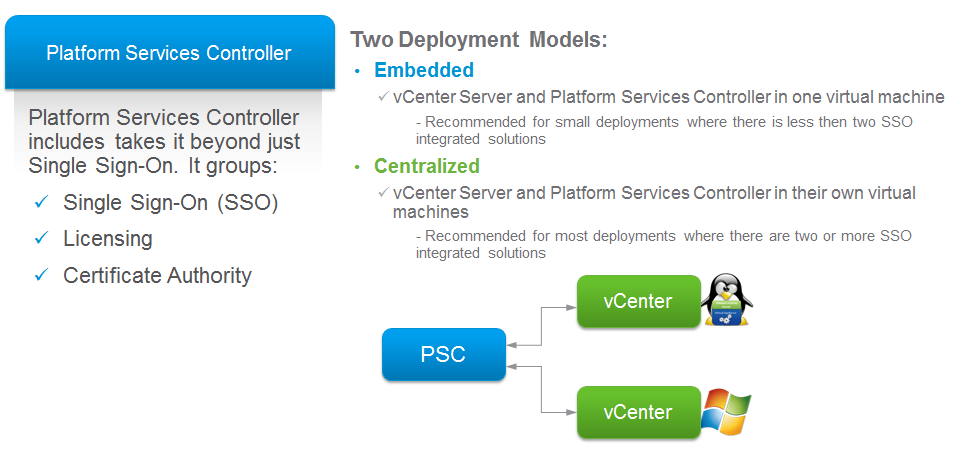

vCenter Server authentication finally gets less complicate

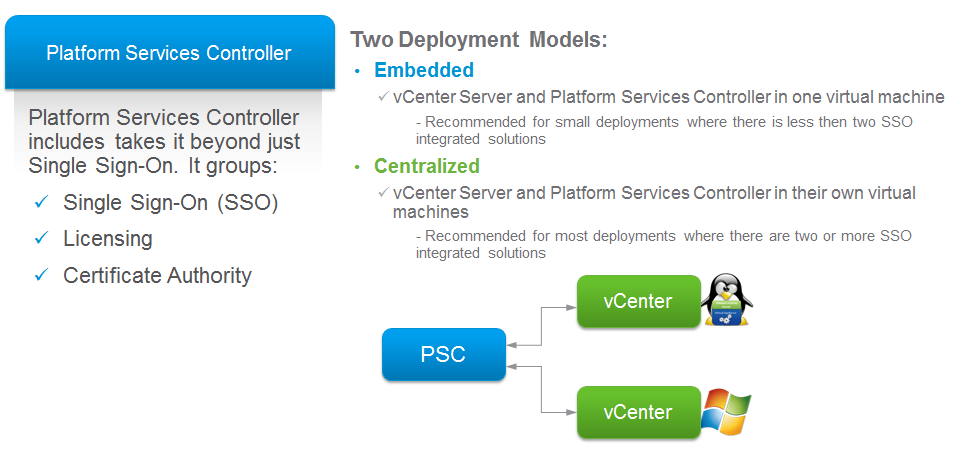

A new Platform Services Controller groups together Single Sign-on, Licensing and the Certificate Authority (Root CA). It comes in 2 deployment models, an embedded model that is installed alongside vCenter Server and is intended for smaller sites with less than 2 SSO integrated solutions or an external model that can be deployed independently of vCenter Server.

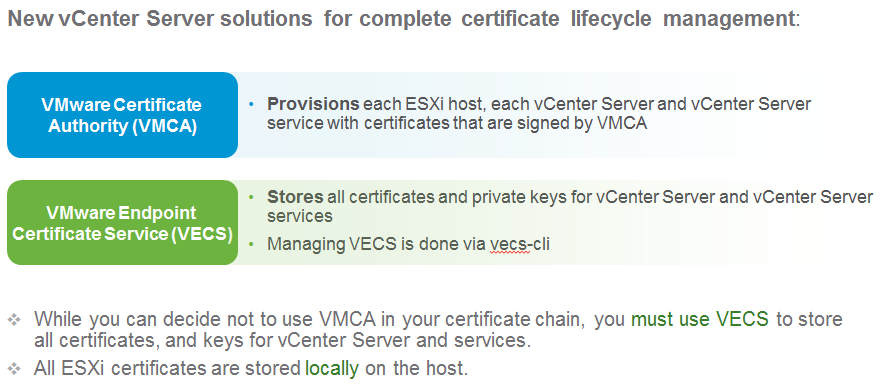

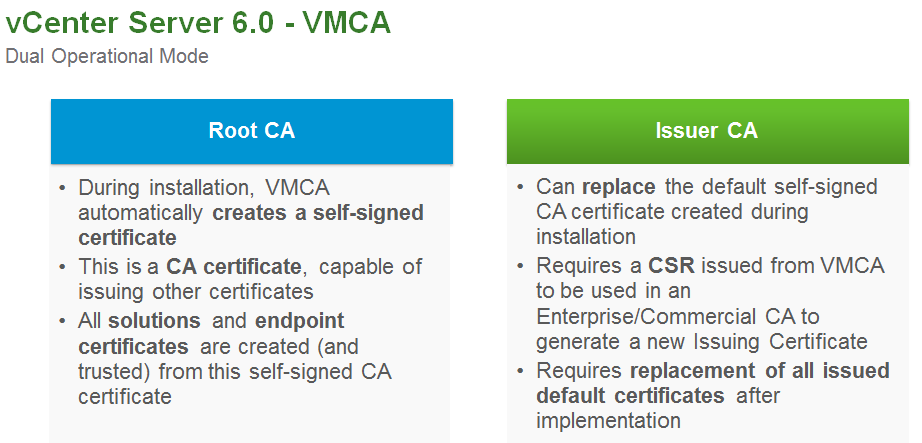

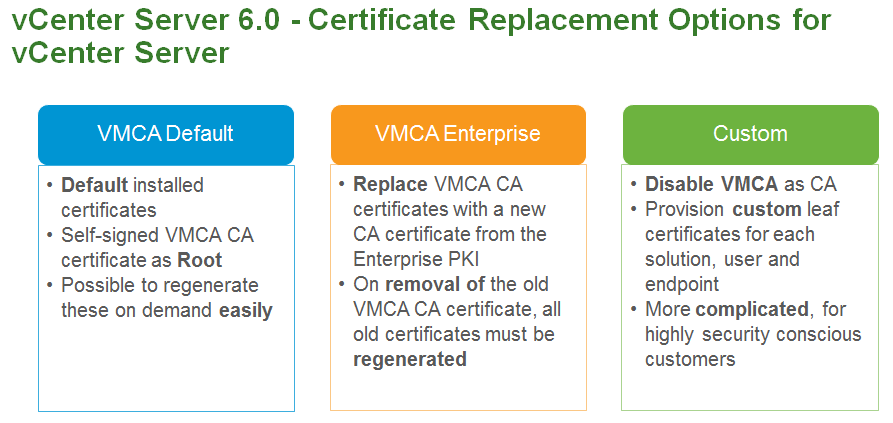

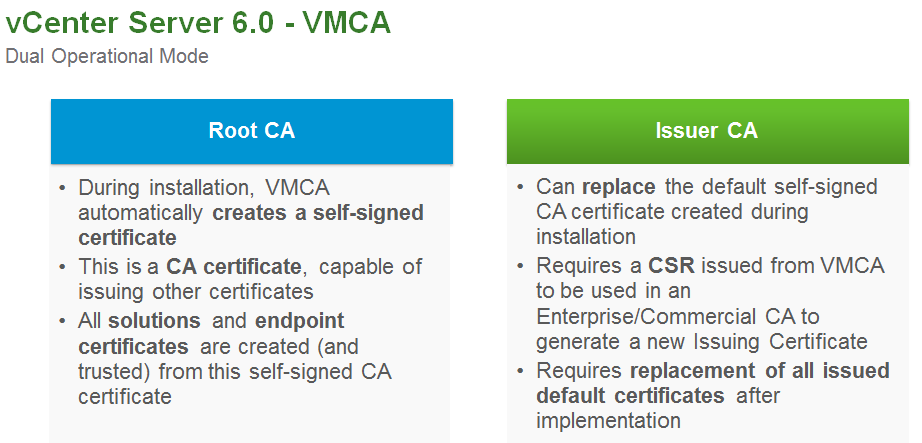

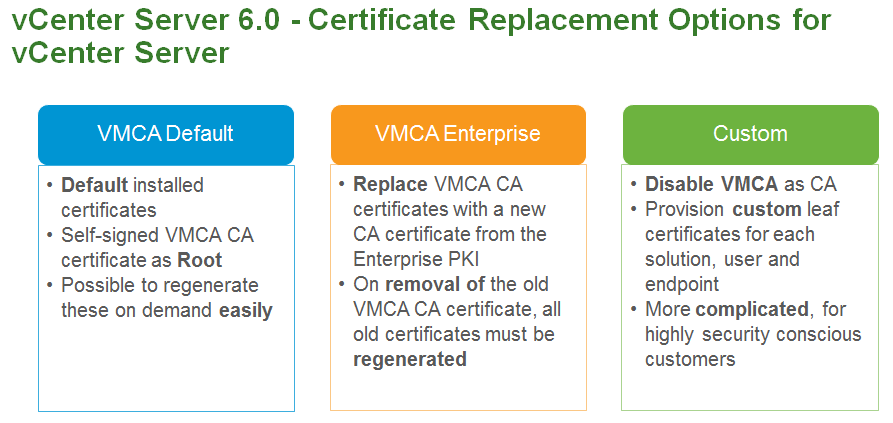

vCenter Server Certificate Lifecycle Management is your new keymaster

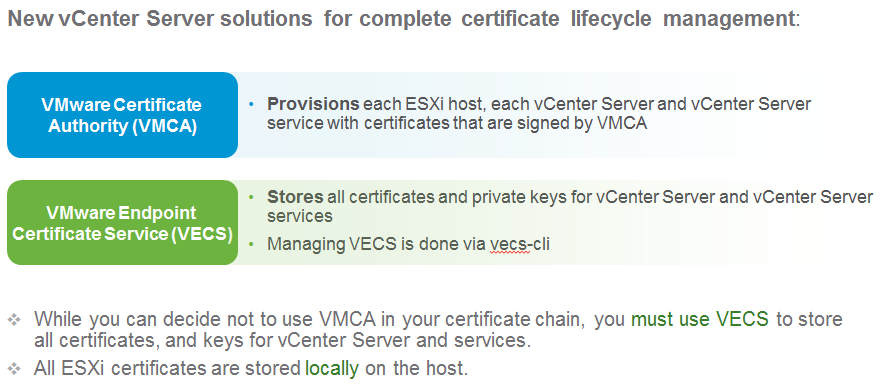

Dealing with security certificates has always been a royal pain in the butt in vSphere. VMware is trying to ease that pain and make it easier with 2 new solutions for complete certificate lifecycle management. The VMware Certificate Authority (VMCA) is the solution that signs and provisions certificates to vCenter Server and ESXi hosts. The VMware Endpoint Certificate Service (VECS) then stores all certificates and private keys for vCenter Server, all ESXi host certificates are stored locally on each host.

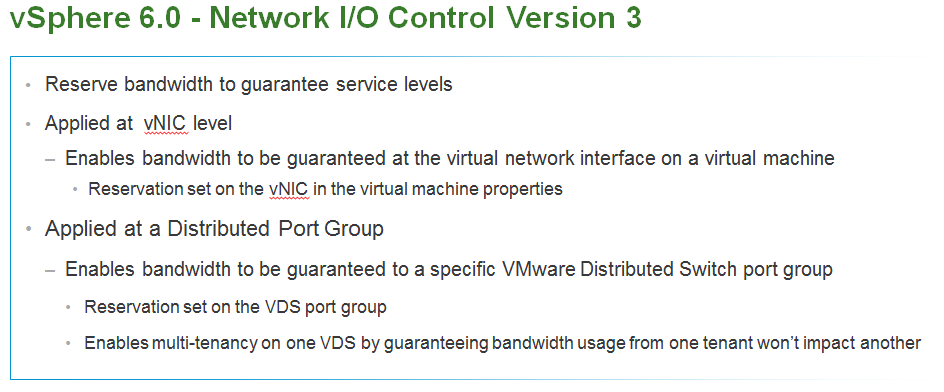

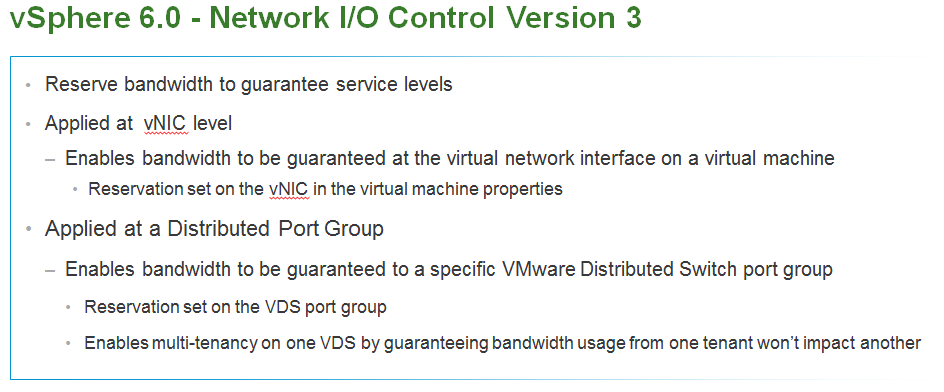

Networking does get a little bit of love also

This release is mostly dominated by storage and vCenter improvements but Network I/O Control gets some new stuff with the ability to reserve network bandwidth to guarantee service levels as outlined below.