Recently at VMworld in Vegas, Pete Flecha from VMware did a presentation on VVols at the HPE booth which highlights the benefits of VVols being simpler, smarter and faster than traditional storage with vSphere. The link to the presentation is below but I thought I would also summarize why VVols are simpler, smarter and faster.

Recently at VMworld in Vegas, Pete Flecha from VMware did a presentation on VVols at the HPE booth which highlights the benefits of VVols being simpler, smarter and faster than traditional storage with vSphere. The link to the presentation is below but I thought I would also summarize why VVols are simpler, smarter and faster.

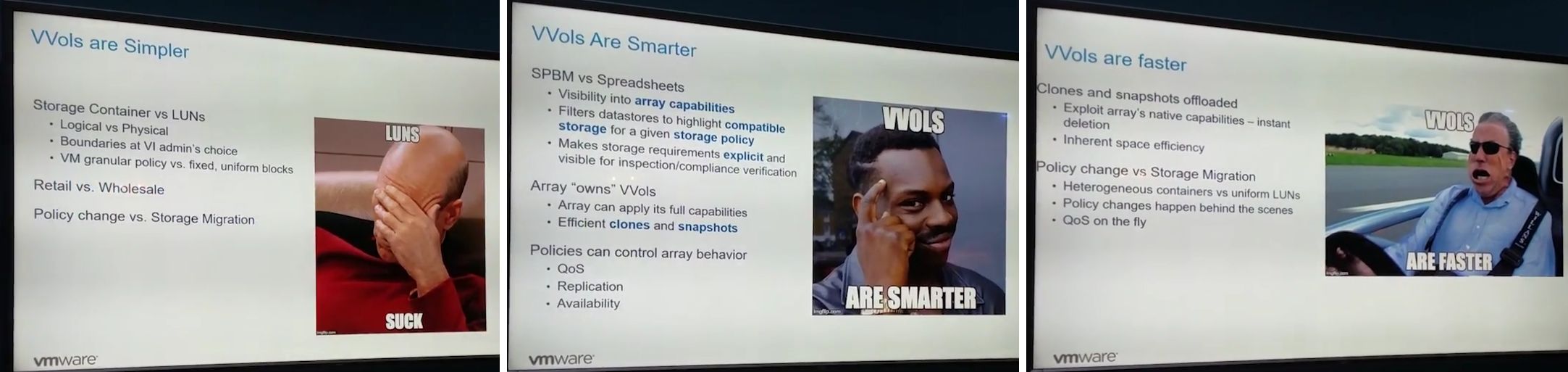

VVols are Simpler

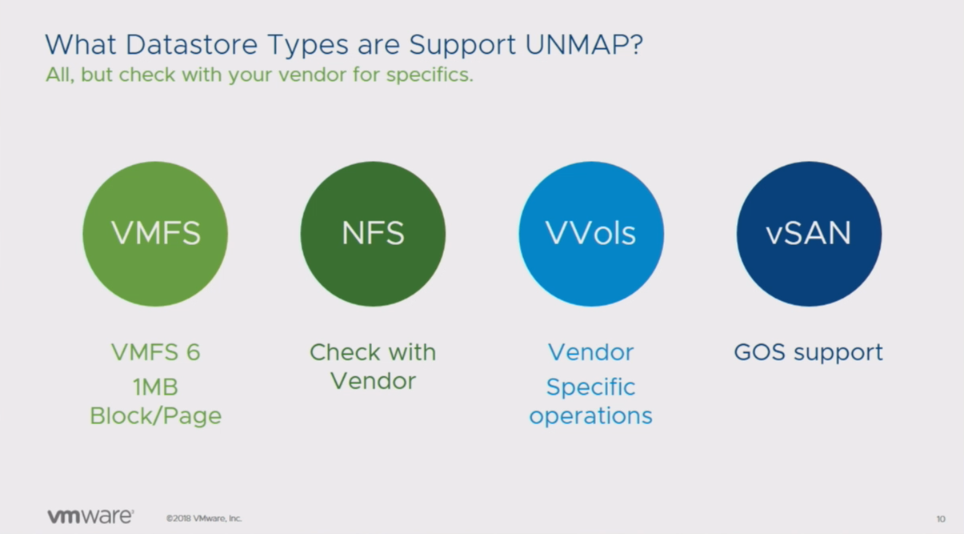

The reason VVols are simpler is because of storage policy based management which automates the provisioning and reclamation of storage for VMs. VVols completely eliminates LUN management on the storage array as vSphere can natively write VMs to a storage array, VVols are provisioned as needed when VMs are created and automatically reclaimed when VMs are deleted or moved.

VVols are Smarter

The reason VVols are smarter is again because of storage policy based management and also by the dynamic real time nature of storage operations between vSphere and the storage array. With VVols a storage array stays as efficient and thin as possible as space is always reclaimed immediately for VMs and snapshots and even when data is deleted within the guest OS it can be reclaimed on the storage array. In addition the array manages all snapshots and clones and array capabilities can be assigned at the VM level with SPBM.

VVols are Faster

The reason VVols are faster is not what you might think, data is still written from vSphere to a storage array using the same storage queues and paths, there is no performance difference between VVols & VMFS for normal read/write operations. Where VVols is much faster than VMFS is when it comes to snapshots, because all vSphere snapshots are array snapshots they are much more efficient and they take the burden off the host, In addition because there is no need to merge any data that has changed while a snapshot is active, deleting snapshots is always an instant process which can have a very positive impact on backups.

Go watch the whole video to learn more about why VVols are so great , it’s only about 15 minutes long.