CPU & memory resources are two resources that are not fully understood in vSphere, and they are two resources that are often wasted, as well. In this post we’ll take a look at CPU & memory resources in vSphere to help you better understand them and provide some tips for properly managing them.

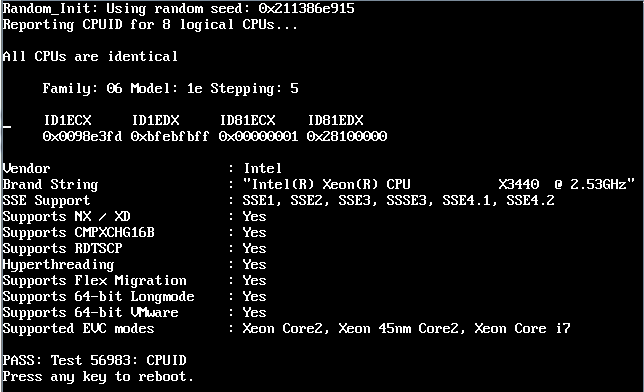

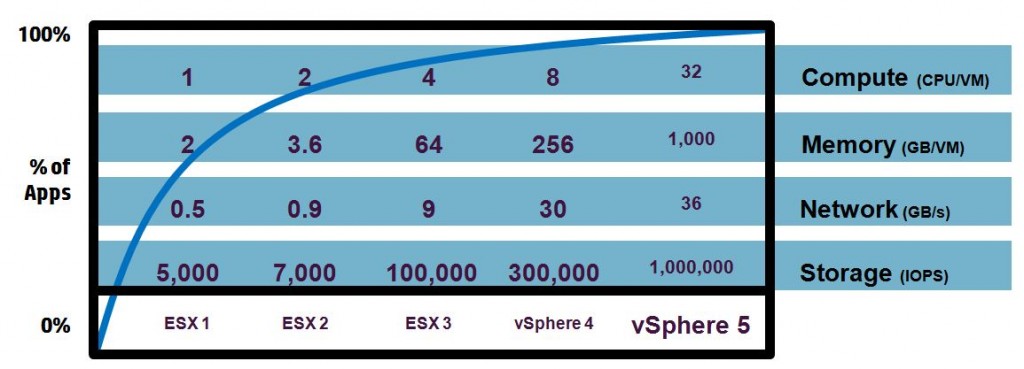

Let’s first take a look at the CPU resource. Virtual machines can be assigned up to 64 virtual CPUs in vSphere 5.1. The amount you can assign to a VM, of course, depends on the total amount a host has available. This number is determined by the number of physical CPUs (sockets), the number of cores that a physical CPU has, and whether hyper-threading technology is supported on the CPU. For example, my ML110G6 server has a single physical CPU that is quad-core, but it also has hyper-threading technology. So, the total CPUs seen by vSphere is 8 (1 x 4 x 2). Unlike memory, where you can assign more virtual RAM to a VM then you have physically in a host, you can’t do this type of over-provisioning with CPU resources.

Most VMs will be fine with one vCPU. Start with one, in most cases, and add more, if needed. The applications and workloads running inside the VM will dictate whether you need additional vCPUs or not. The exception here is if you have an application (i.e. Exchange, transactional database, etc.) that you know will have a heavy workload and need more than one vCPU. One word of advice, though, on changing from a single CPU to multiple CPU’s with Microsoft Windows: previous versions of Windows had separate kernel HAL’s that were used depending on whether the server had a single CPU or multiple CPUs (vSMP). These kernels were optimized for each configuration to improve performance. So, if you made a change in the hardware once Windows was already installed, you had to change the kernel type inside of Windows, which was a pain in the butt. Microsoft did away with that requirement some time ago with the Windows Server 2008 release, and now there is only one kernel regardless of the number of CPUs that are assigned to a server. You can read more about this change here. So, if you are running an older Windows OS, like Server 2000 or 2003, you still need to change the kernel type if you go from single to multiple CPUs or vice versa.

So, why not just give VMs lots of CPUs, and let them use what they need? CPU usage is not like memory usage, which often utilizes all the memory assigned to it for things like pre-fetching. The real problem with assigning too many vCPUs to a VM is scheduling. Unlike memory, which is directly allocated to VMs and is not shared (except for TPS), CPU resources are shared and must wait in a line to be scheduled and processed by the hypervisor which finds a free physical CPU/core to handle each request. Handling VMs with a single vCPU is pretty easy: just find a single open CPU/core and hand it over to the VM. With multiple vCPU’s it becomes more difficult, as you have to find several available CPUs/cores to handle requests. This is called co-scheduling, and throughout the years, VMware has changed how they handle co-scheduling to make it a bit more flexible and relaxed. You can read more about how vSphere handles co-scheduling in this VMware white paper.

When it comes to memory, assigning too much is not a good thing and there are several reasons for that. The first reason is that the OS and applications tend to use all available memory for things like caching that consume extra available memory. All this extra memory usage takes away physical host memory from other VMs. It also makes the hypervisors job of managing memory conservation, via features like TPS and ballooning, more difficult. Another thing that happens with memory is when you assign memory to a VM and power it on; you are also consuming additional disk space. The hypervisor creates a virtual swap (vswp) file in the home directory of the VM equal in size to the amount of memory assigned to a VM (minus any memory reservations). The reason this happens is to support vSphere’s ability to over-commit memory to VMs and assign them more than a host is physically capable of supporting. Once a host ‘s physical memory is exhausted, it starts uses the vswp files to make up for this resource shortage, which slows down performance and puts more stress on the storage array.

So, if you assign 8GB of memory to a VM, once it is powered on, an 8GB vswp file will be created. If you have 100 VMs with 8GB of memory each, that’s 800GB of disk space that you lose from your vSphere datastores. This can chew up a lot of disk space, so limiting the amount of memory that you assign to VMs will also limit the size of the vswp files that get created.

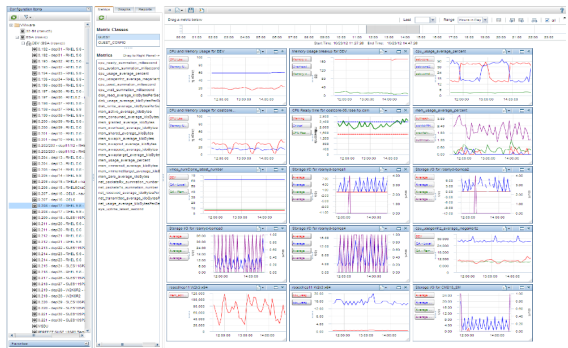

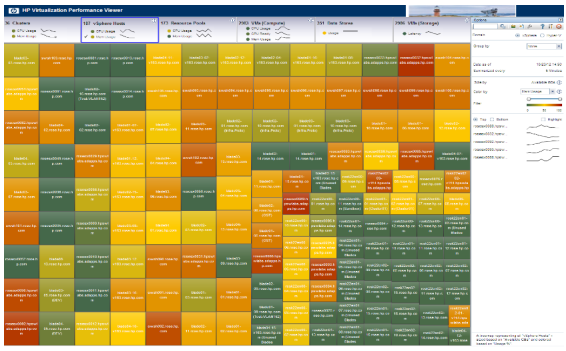

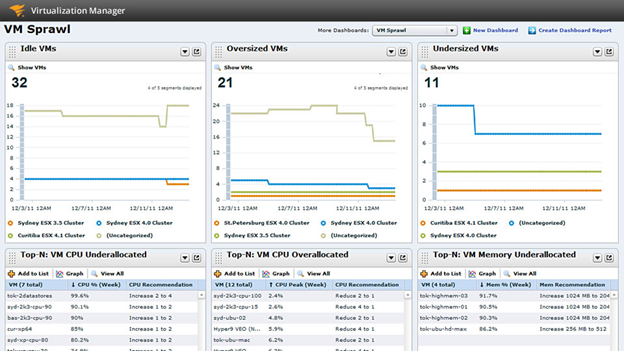

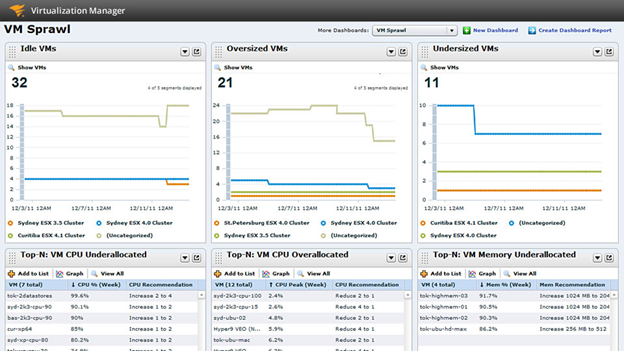

Therefore, the secret to a healthy vSphere environment is to “right-size” VMs. This means only assigning them the resources they need to support their workloads, and not wasting resources. Virtual environments share resources, so you can’t use mindsets from physical environments where having too much memory or CPUs is no big deal. How do you know what is the right size is? In most cases you won’t know, but you can get a fairly good idea of a VM’s resource requirements by combining the typical amount that the guest OS needs with the resource requirements for the applications that you are running on it. You should start by estimating the amount. Then the key is to monitor performance to determine what resources a VM is using and what resources it is not using. vCenter Server isn’t really helpful for this as it doesn’t really do reporting. So, using 3rd party tools can make this much easier. I’ve always been impressed by the dashboards that SolarWinds has in their VMware monitoring tool, Virtualization Manager. These dashboards can show you, at a glance, which VMs are under-sized and which are over-sized. Their VM Sprawl dashboard can make it really easy to right-size all the VMs in your environment so you can reallocate resources from VMs that don’t need them to VMs that do.

Another benefit that Virtualization Manager provides is that you can spot idle and zombie VMs that also suck away resources from your environment and need to be dealt with.

So, effectively managing your CPU and memory resources is really a two-step process. First, don’t go overboard with resources when creating new VMs. Try and be a bit conservative to start out. Then, monitor your environment continually with a product like SolarWinds Virtualization Manager so you can see the actual VM resource needs. The beauty of virtualization is that it makes it really easy to add or remove resources from a virtual machine. If you want to experience the maximum benefit that virtualization provides and get the most cost savings from it, right-sizing is the key to achieving that.

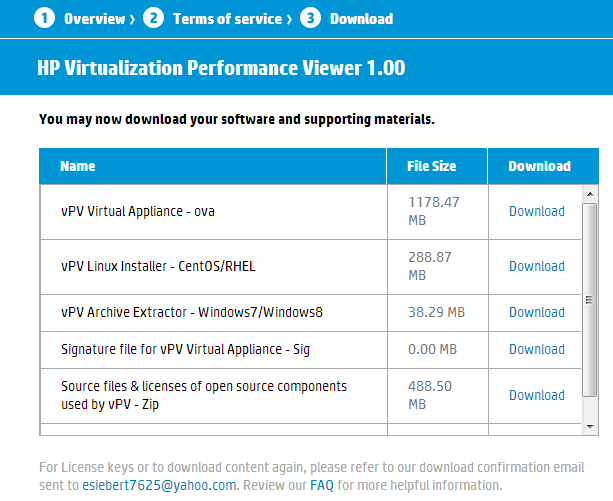

Downloading the OVA file to install the virtual appliance is the easiest way to go, once you download it, you simply deploy it using the Deploy OVF Template option in the vSphere Client and it will install as a new VM. Once deployed and powered on you can log in to the VM’s OS using the username root and password vperf*viewer if you need to manually configure an IP address. Otherwise you can connect to the VM and start using vPV using the URL: http://<servername>:8081/PV OR https://<servername>:8444/PV which will bring up the user interface so you can get started. I haven’t tried it out yet as it’s still downloading but here’s some screenshots from the vPV webpage:

Downloading the OVA file to install the virtual appliance is the easiest way to go, once you download it, you simply deploy it using the Deploy OVF Template option in the vSphere Client and it will install as a new VM. Once deployed and powered on you can log in to the VM’s OS using the username root and password vperf*viewer if you need to manually configure an IP address. Otherwise you can connect to the VM and start using vPV using the URL: http://<servername>:8081/PV OR https://<servername>:8444/PV which will bring up the user interface so you can get started. I haven’t tried it out yet as it’s still downloading but here’s some screenshots from the vPV webpage: